About this book

To cite this book, please use the following.

Frazier, Tyler James. (2021). Data Ekistics: Data Science for Human Communities and their Settlements. Williamsburg, Virginia: William & Mary / Data Science Series IA. https://tyler-frazier.github.io/dsbook/

For more about the author please see this webpage.

Preparations

description: >- Create a GitHub site that will use to populate with results from your geospatial data science investigation on your selected LMIC and administrative subdivision(s).

Getting started with GitHub

Through the course of this semester you will use GitHub as a repository to save and share your work. GitHub uses a fairly simple programming language called markdown which you will use to present content you have created. Upon completing your different GitHub sites, I will ask you to post a link to your website on the appropriate slack channel #data1X0_ prior to the given deadline.

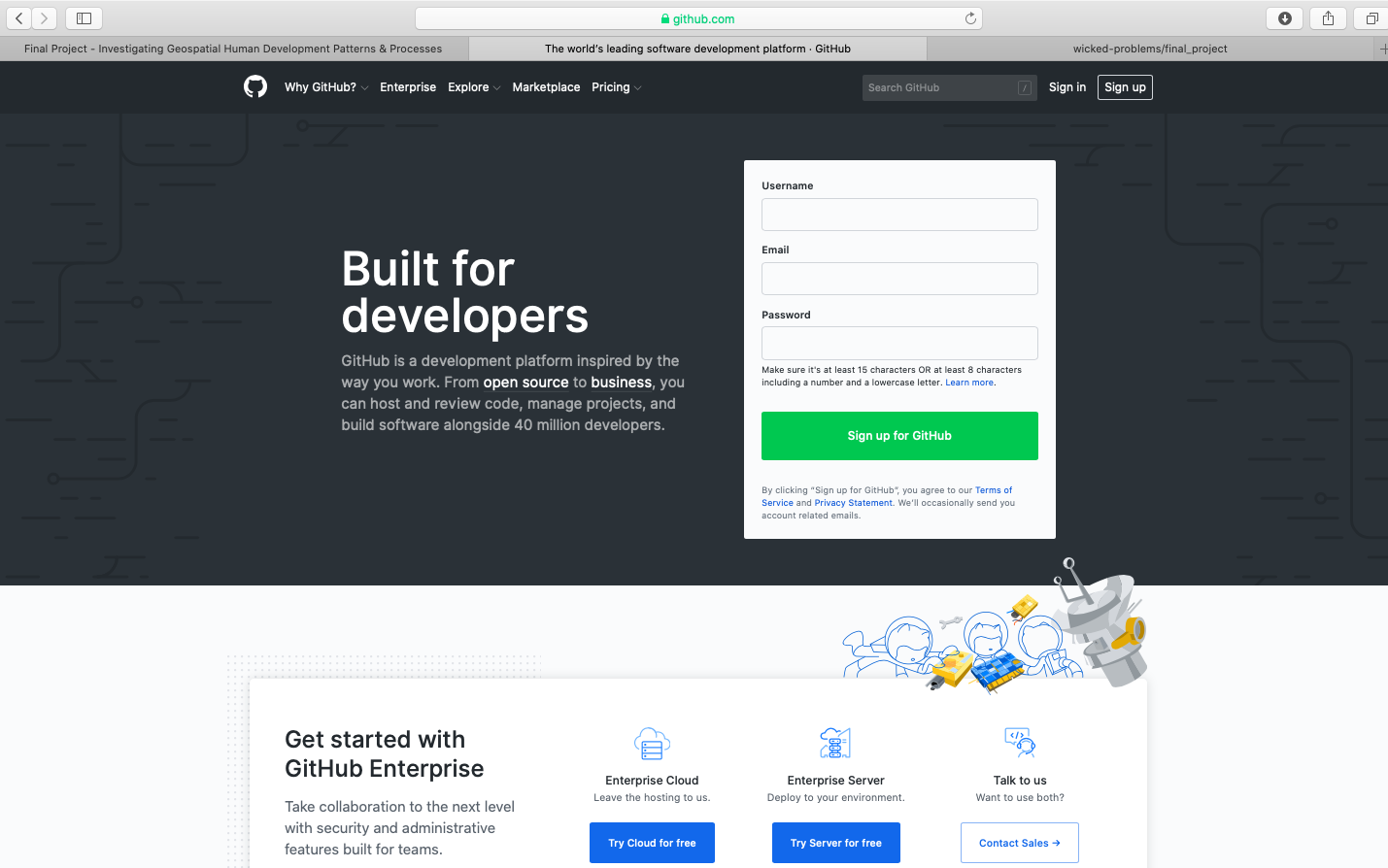

To begin go to https://github.com and create a new account. You should see a website that is very similar to the following image.

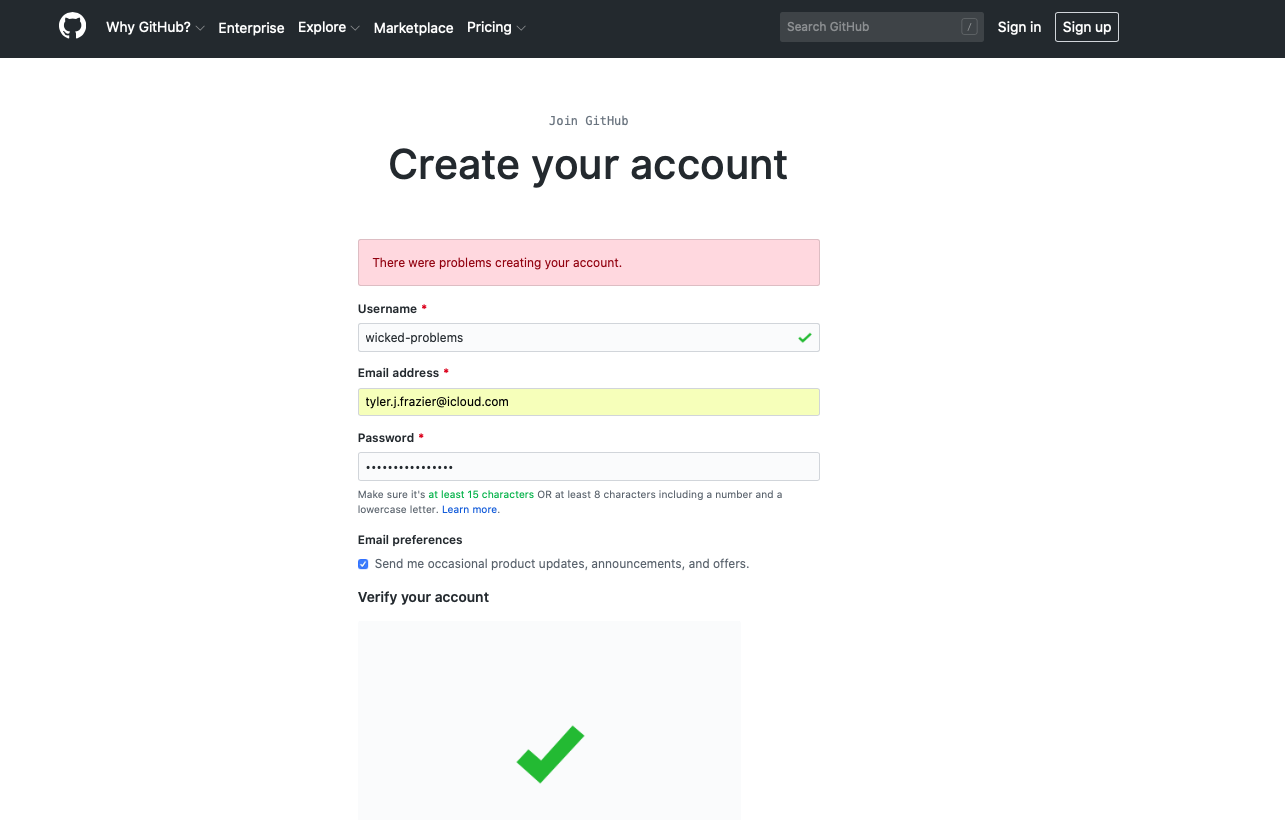

After clicking on the sign up link, create your account by designating your username, e-mail address and password. In order to simulate the process of signing up, I am using the username wicked-problems. My real GitHub account is https://github.com/tyler-frazier, and you are welcome to follow me, although it is not necessary for this final project.

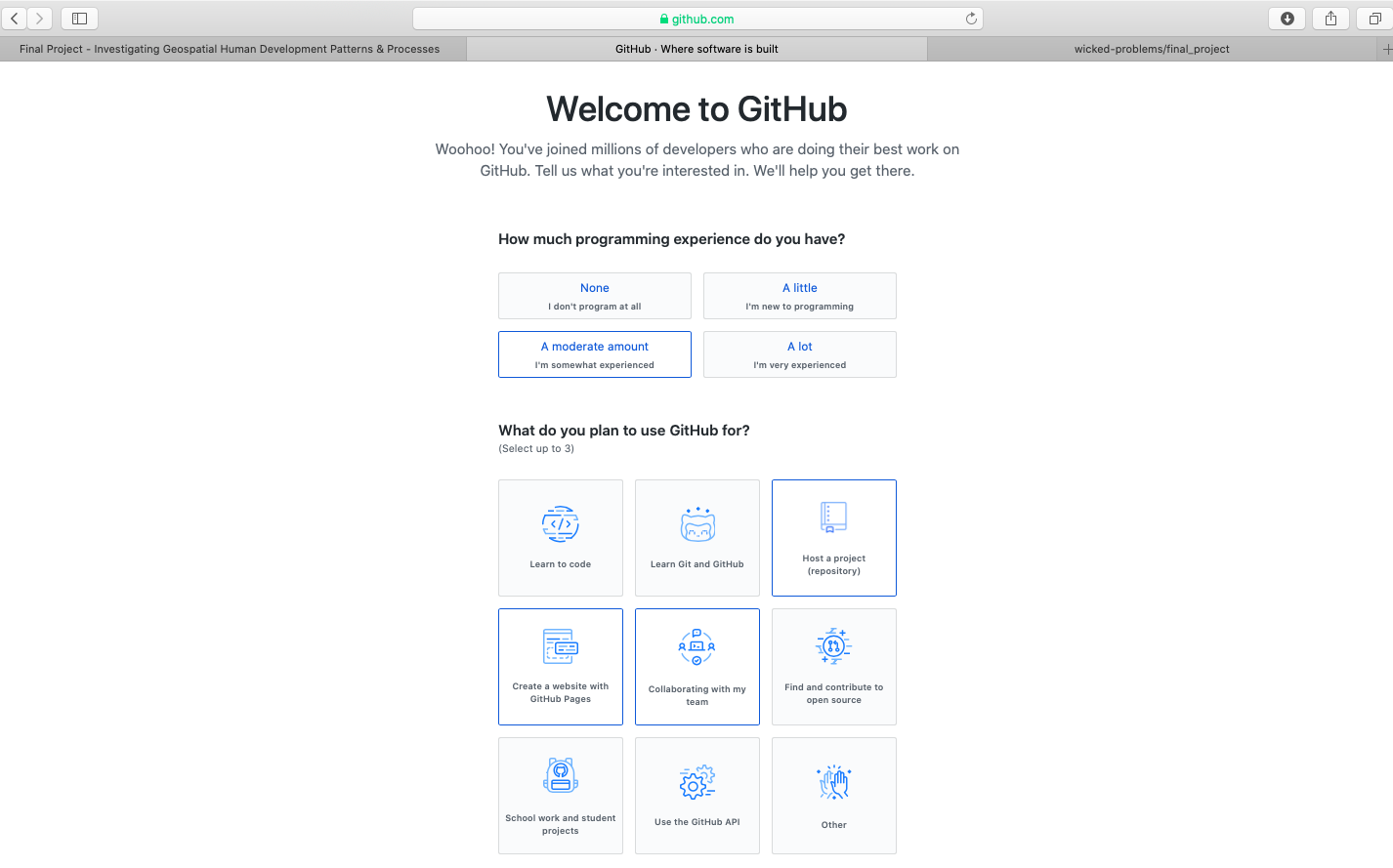

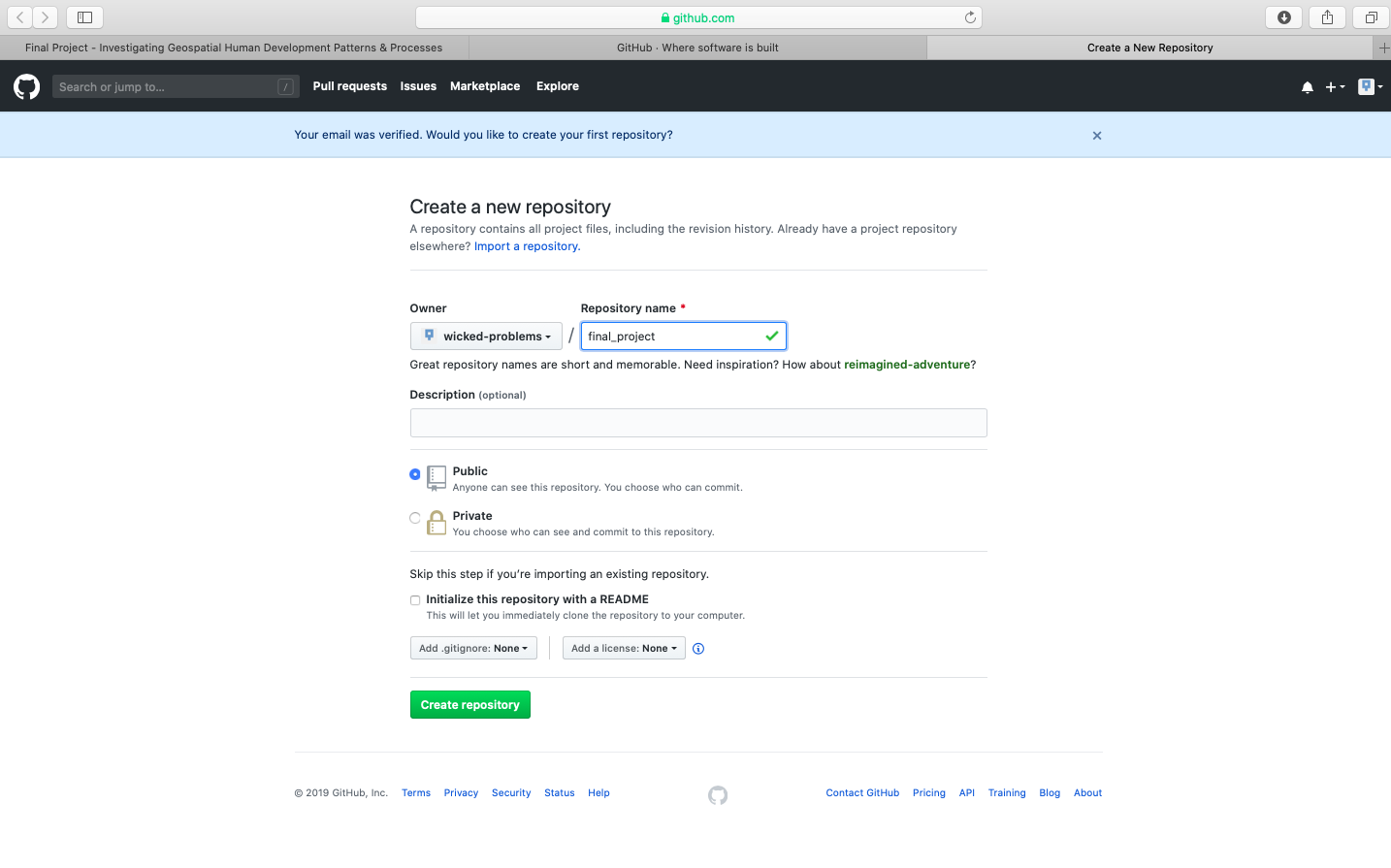

After creating your account, you should receive an e-mail asking to verify your account. Go ahead and verify, so GitHub can permit you to create a new repository. Once you verify your e-mail address, GitHub will likely ask if you want to create a new repository. If somehow you are not automatically asked to create a new repository, it is also possible by selecting the + pull down arrow in the top right corner of the page.

You will also notice that there is a guide made available for new users (the green, read the guide tab). This is really good guide to read, in order to learn how to use GitHub as a version control system. Although you will be using only a small amount of GitHub's full potential for this final project, I highly recommend making a mental note of the guide and returning to the 10 minute read when you have some time. If you are planning to major or minor in data science, computer science, or any discipline that has a signficiant compuational component, it will be very likely that at some point in the future you will need to use a version control system (such as GitHub) for repository control, sharing, collaboration, conflict resolution etc...https://guides.github.com/activities/hello-world/

Create your first repository. In the following example I have named my repository workshop.

After creating your repository, go to the main page for your repository. You should see a quick setup script under the code tab. Click on create a new file under the quick set-up at the top of the page in order to populate your newly created repository with a file named README.md. The .md extension after the filename is the extension for a markdown file. Markdown is a simple, plain text, formatting, syntax language which has as its main design goal, as-is readability. It is a relatively simple language that will enable you to program webpage content fairly easily.

This should bring you to a new page where you are able to create a new file. In order for your GitHub Pages site to function properly, you will need a README.md file in the root folder of your repository. Below the field for the file name is the markdown file body, where you will type your script. Add a first level header to your README.md file by adding one # and following it with your title.

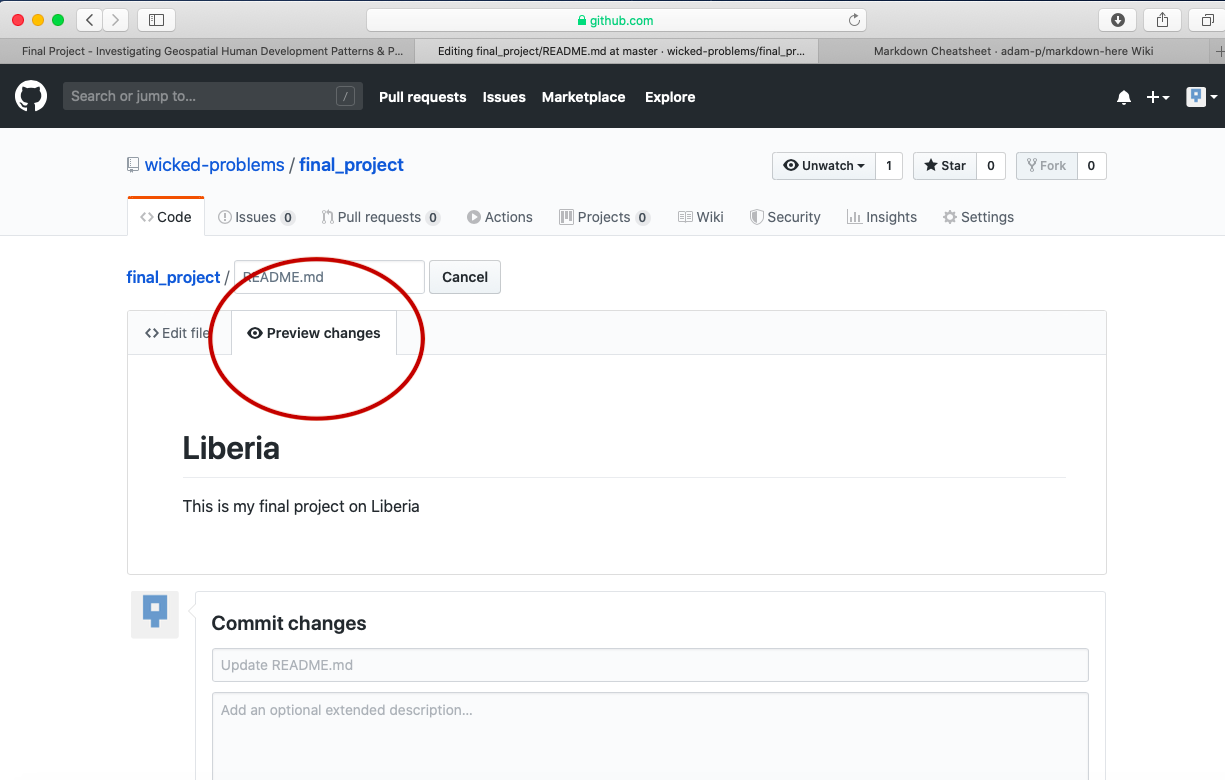

You can also preview the output from your markdown file, by clicking on the preview changes tab.

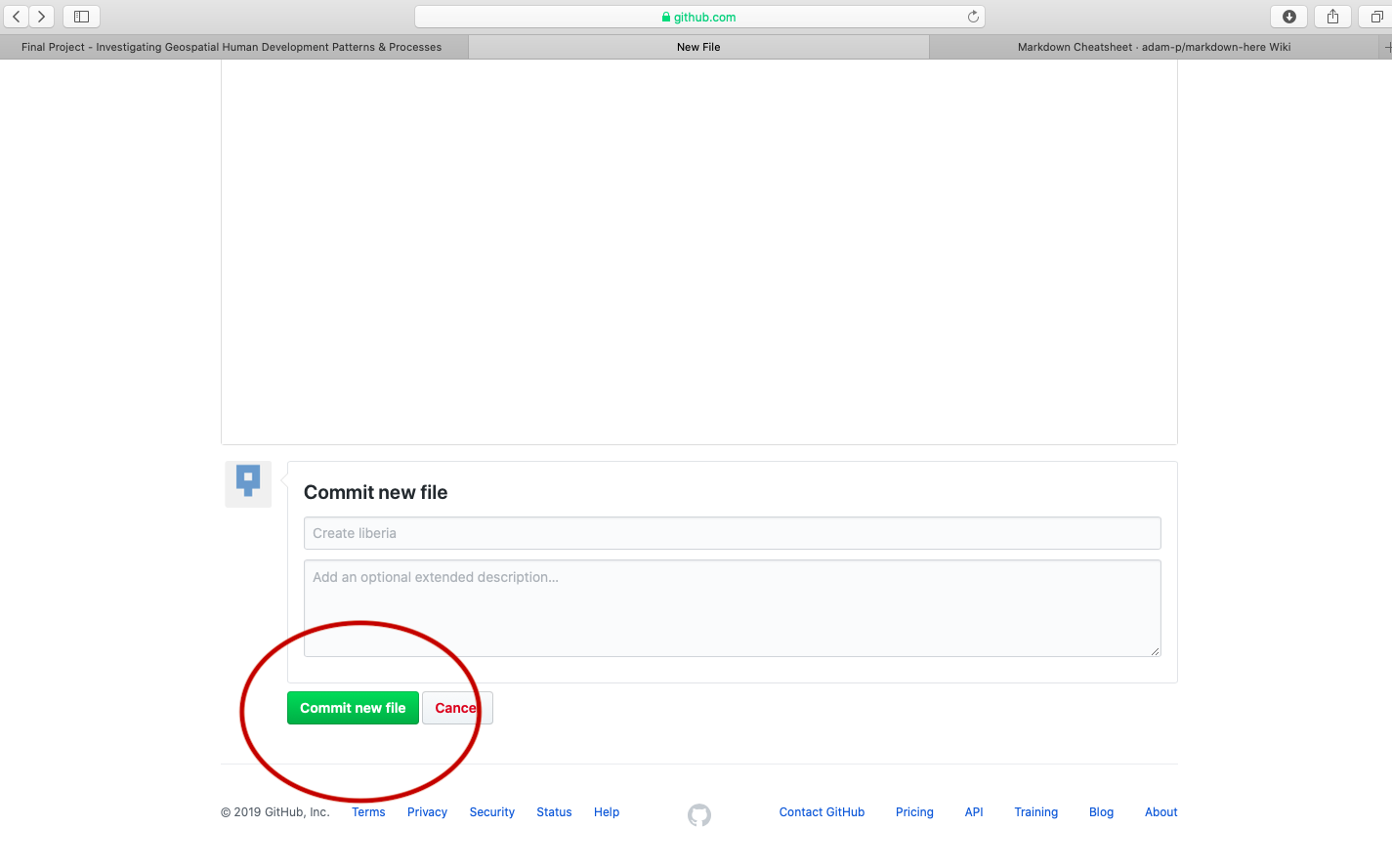

After typing the simple markdown script, scroll to the bottom of the page and click on the green commit button, to commit your file to your repository. You will need to press this green button, each time you edit the content within a file or add new files to your repository. By making a new commit to your repository, you are essentially updating all of the changes you had previously made. While in the case of your final project, there is essentially only one person executing changes per repository, potentially a version control system has the power of resolving conflicts amongst multiple persons all committing changes to the same file simultaneously. That is the power of a version control system, such as GitHub.

To begin getting an understanding of how to use markdown, have a look at the following cheatsheet. The two main areas to note are how to use headers and then a bit further down in the cheatsheet, how to produce tables.

{% embed url="https://github.com/adam-p/markdown-here/wiki/Markdown-Cheatsheet" %}

For the final project, you will only be using headers, paragraph text and inserting images. After having a look at the markdown cheatsheet, return to the main page of your repository, which should appear similar to the following image. After navigating to that location, click on the settings tab in the top right hand corner.

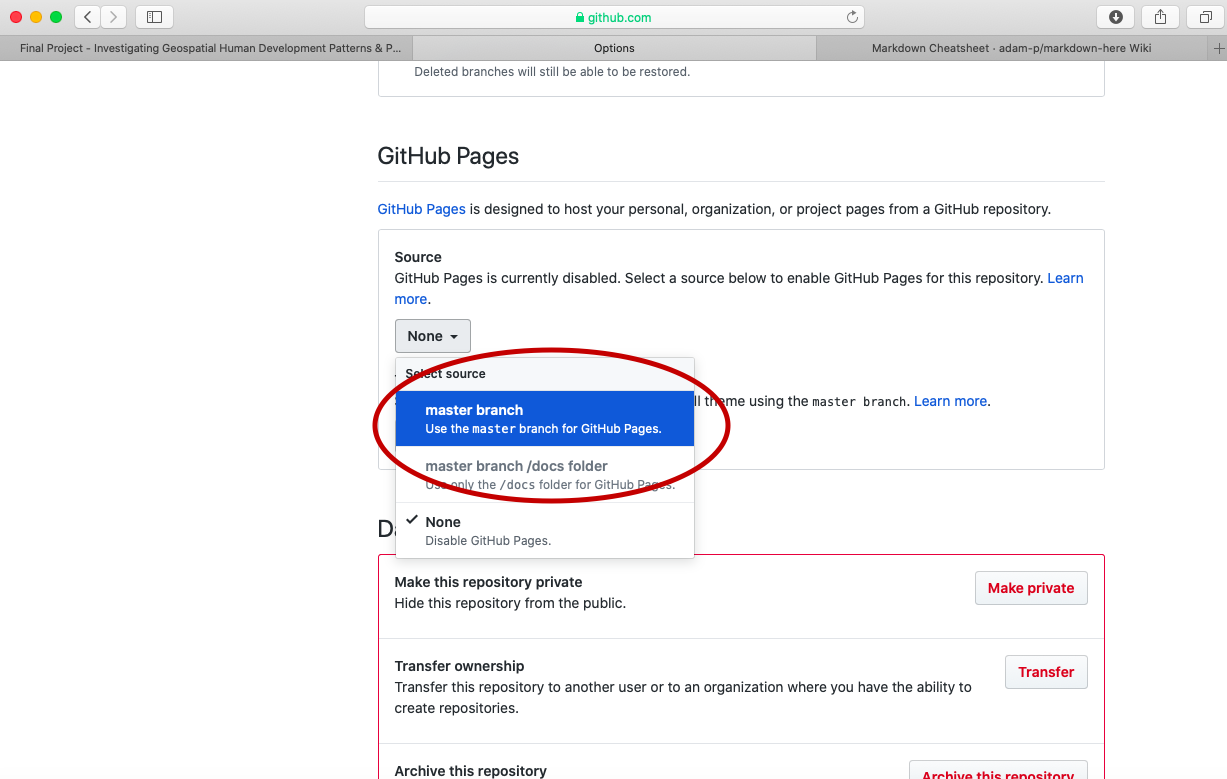

Scroll down to the GitHub Pages section under settings and change the page source from none to master_branch.

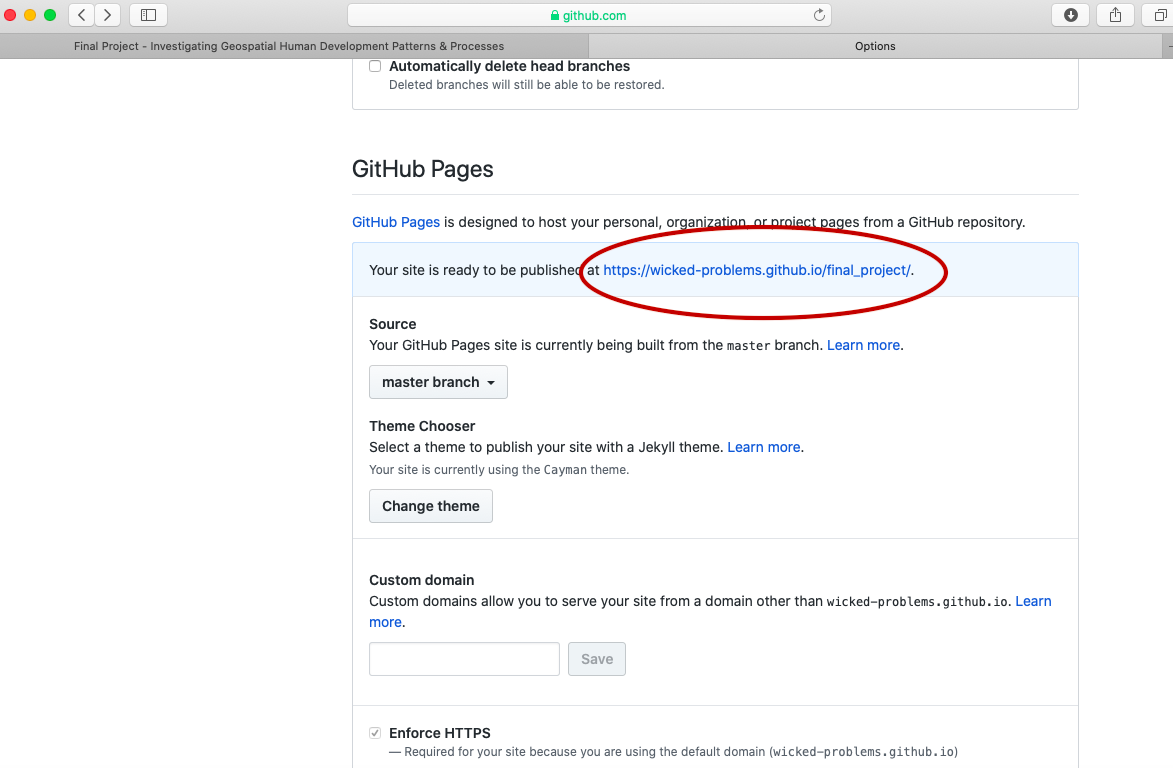

After setting the branch where your GitHub Pages files will reside, also select the theme tab and choose one of the available themes. I chose the theme Caymen for my page, but you are welcome to select any of the available themes for your final project. After, selecting your theme and returning to the GitHub Pages section, you should notice a web address appear where your site has been published. It might take a few moments for your webpage to appear, but not more than 10 or 15 seconds. Usually it updates and publishes almost immediately.

After clicking on the link, the newly created webpage that you will use to publish the results from your investigation should appear. To start making changes to your website, go back to the main page of your repository and select the upload files tab.

This should bring you to a page that will enable you to upload the images you have produced from each of the previous projects. The interface should appear similar to the following image. You can simply drag and drop images directly through the GitHub upload files interface.

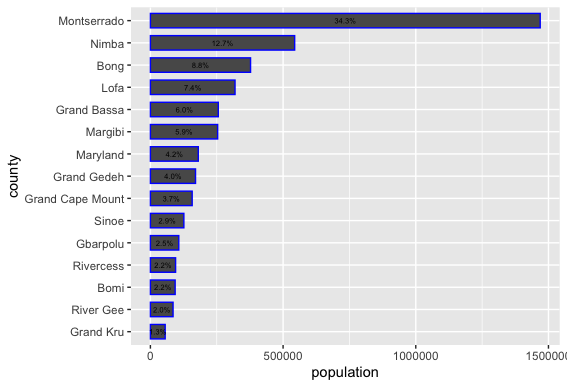

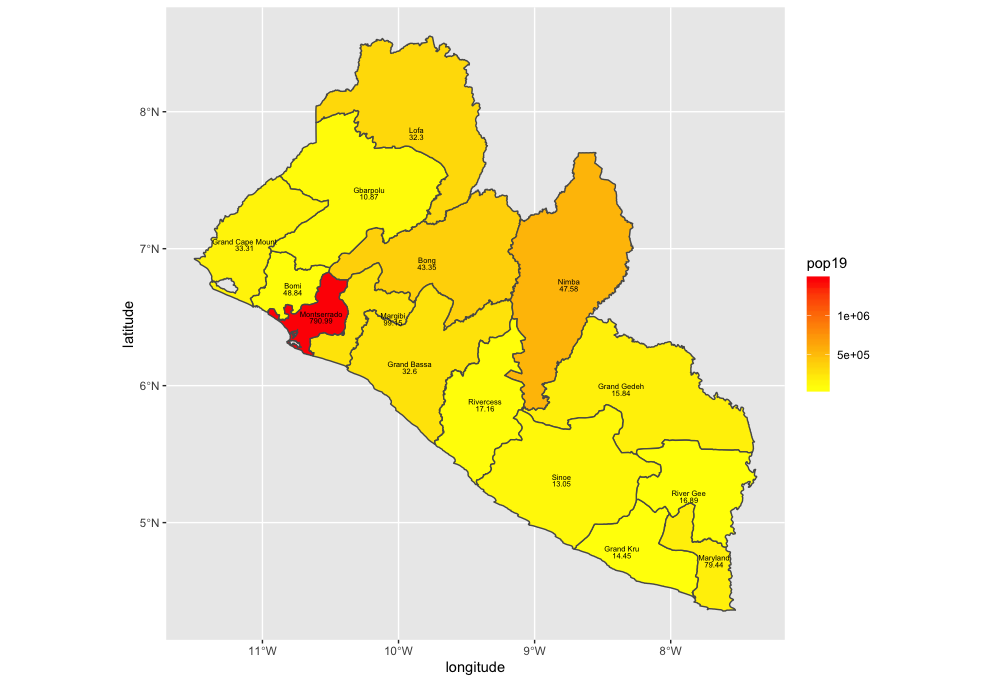

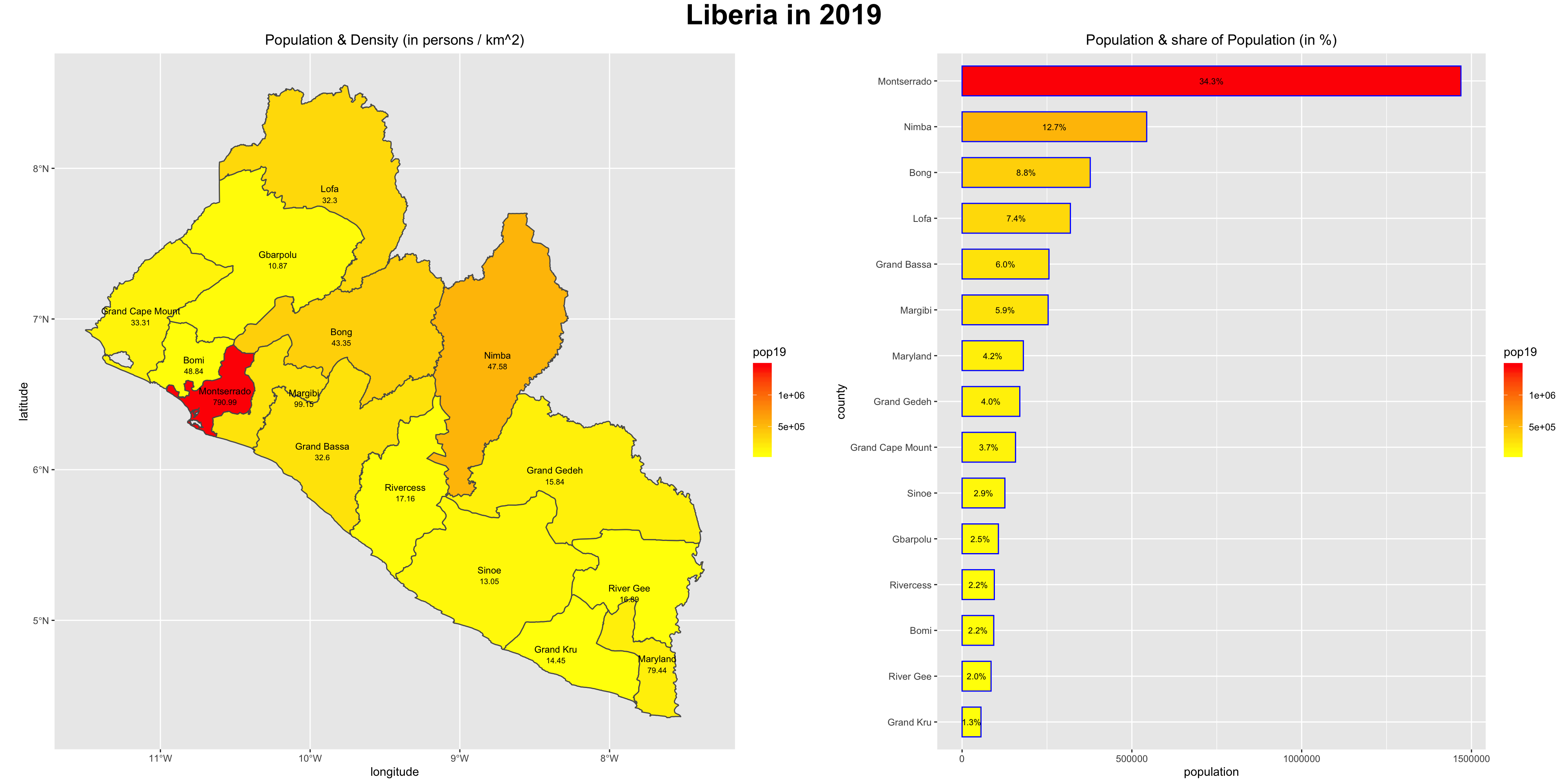

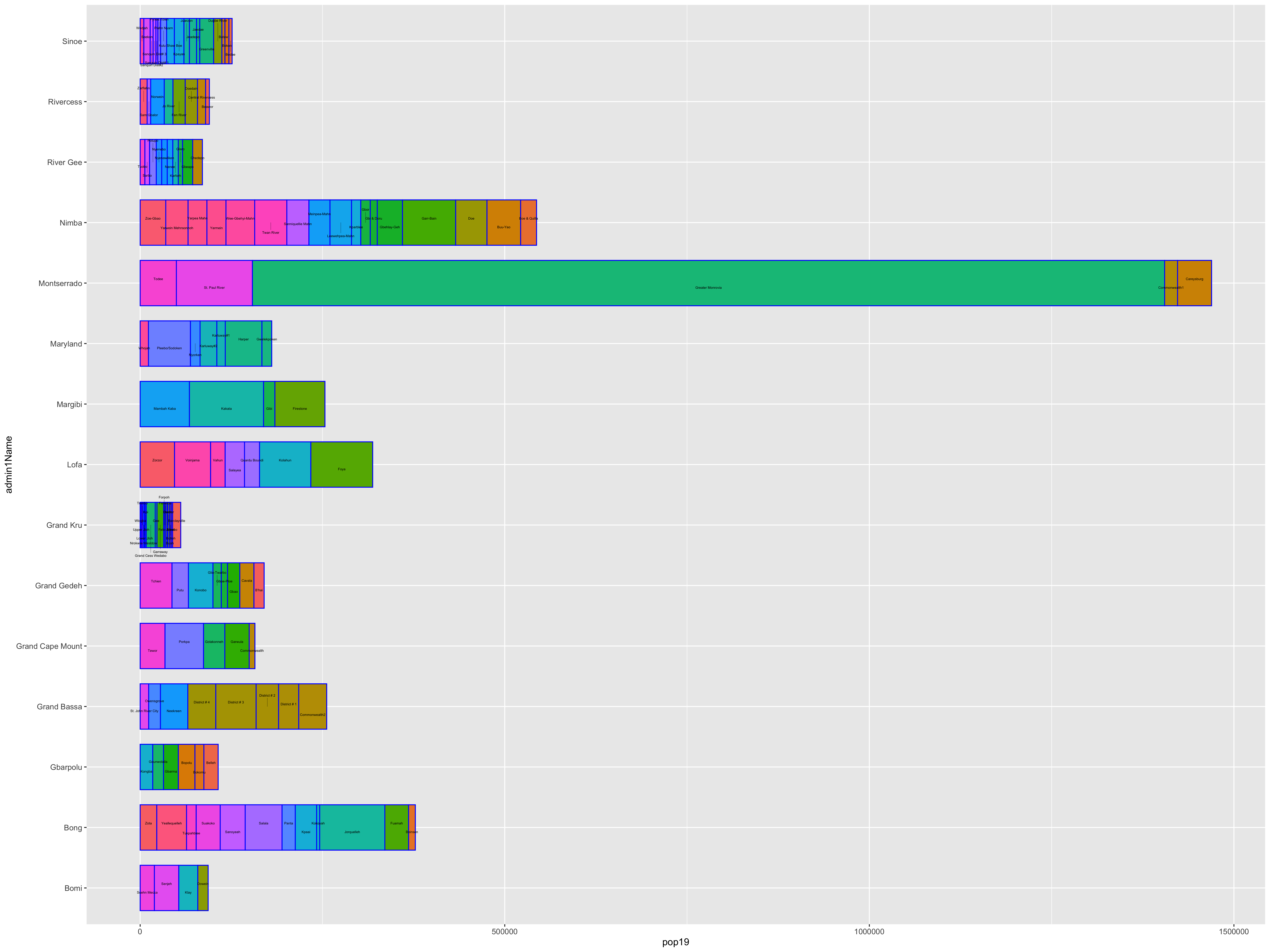

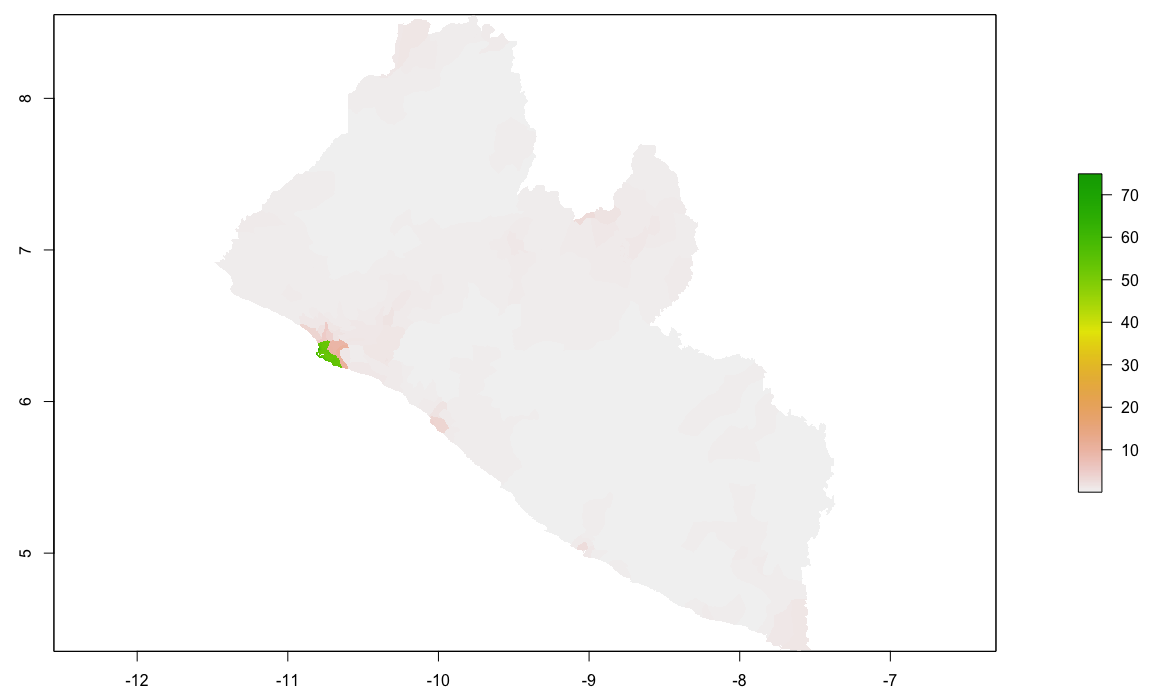

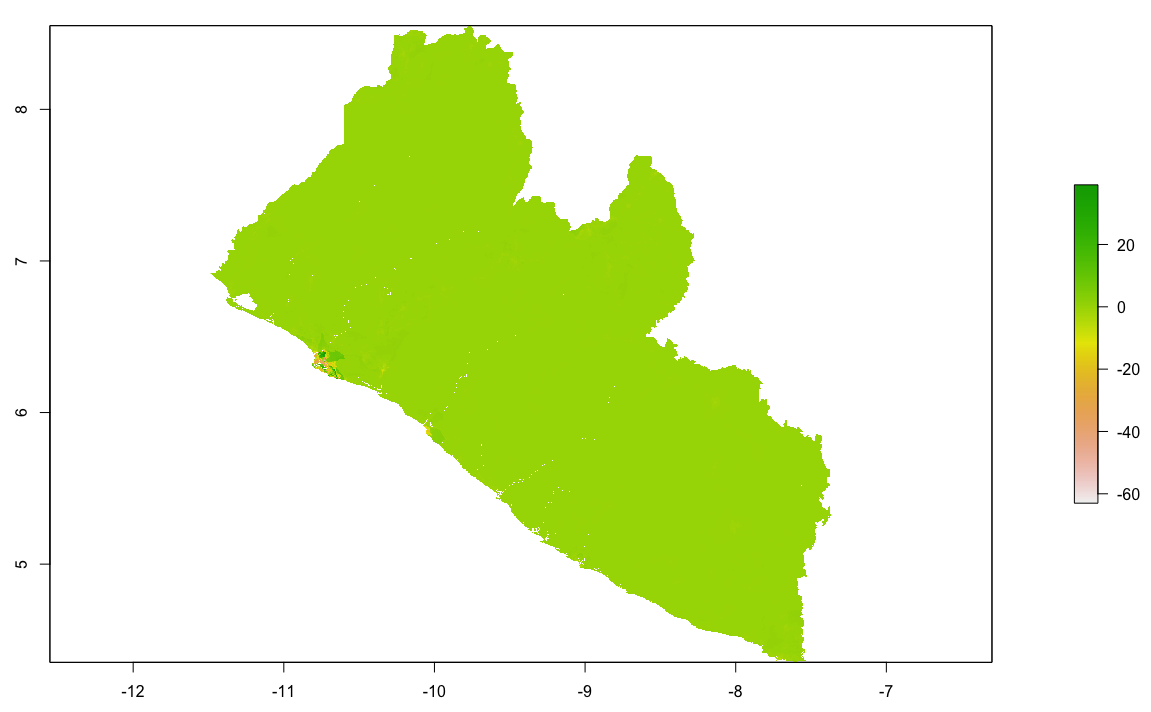

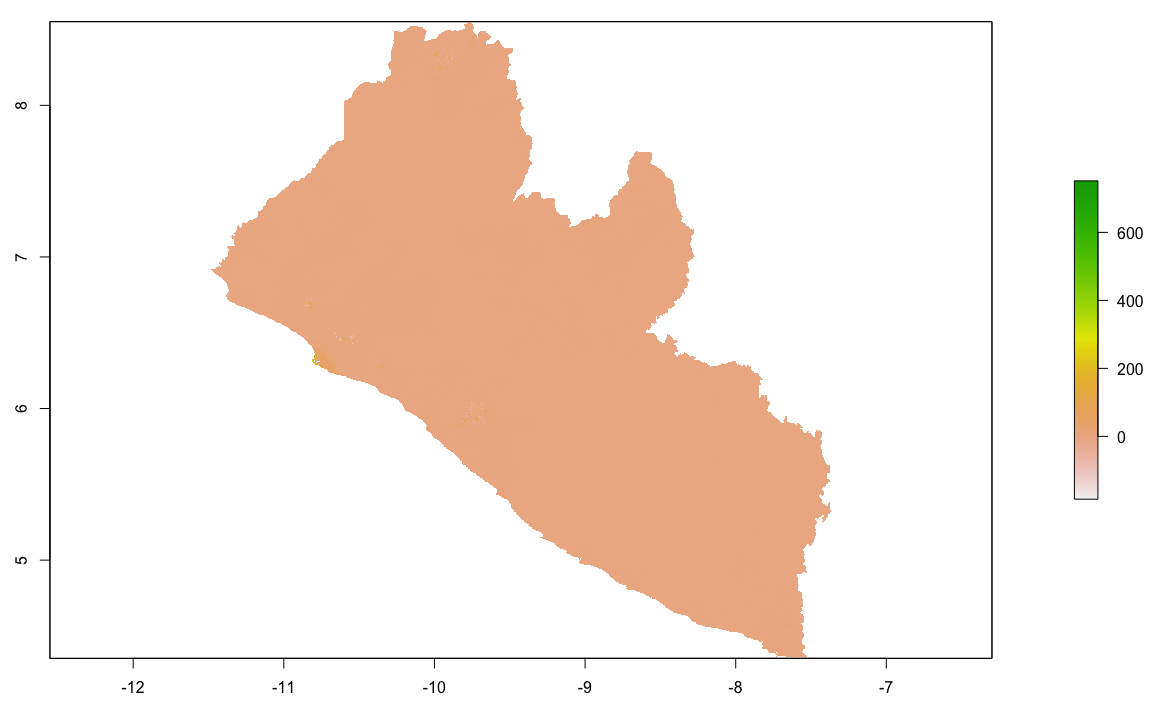

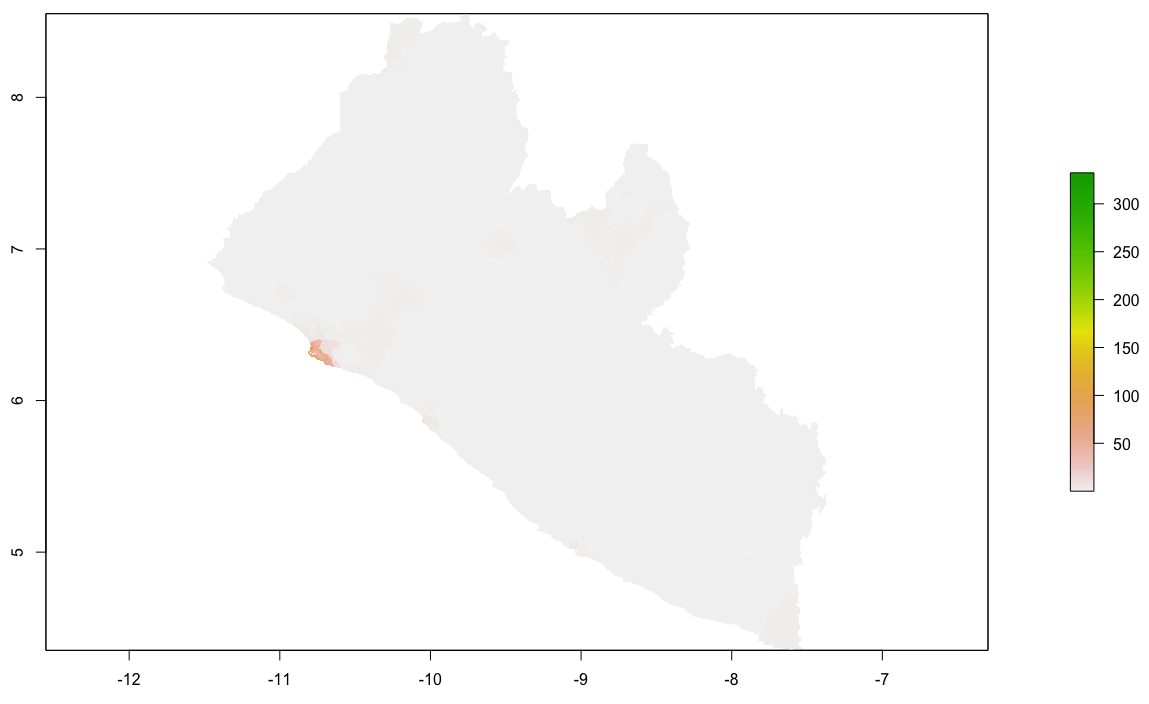

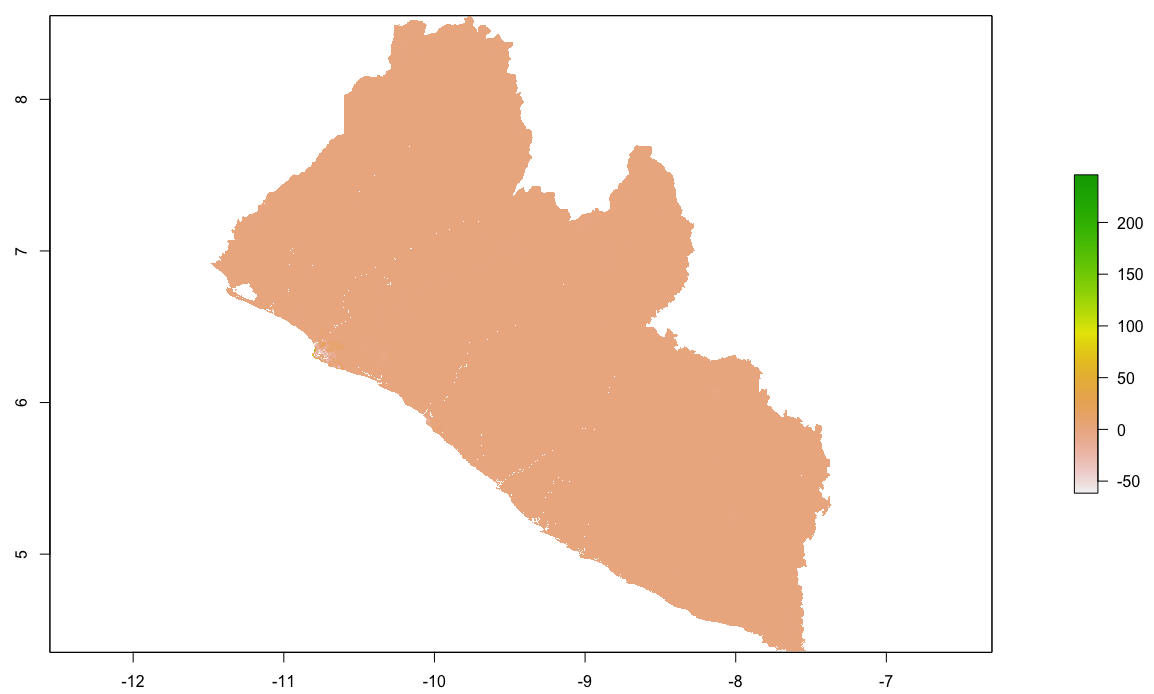

I will begin by dragging and dropping a few of the plots produced that describe the administrative subdivisions of Liberia as well as the spatial distribution of it's population.

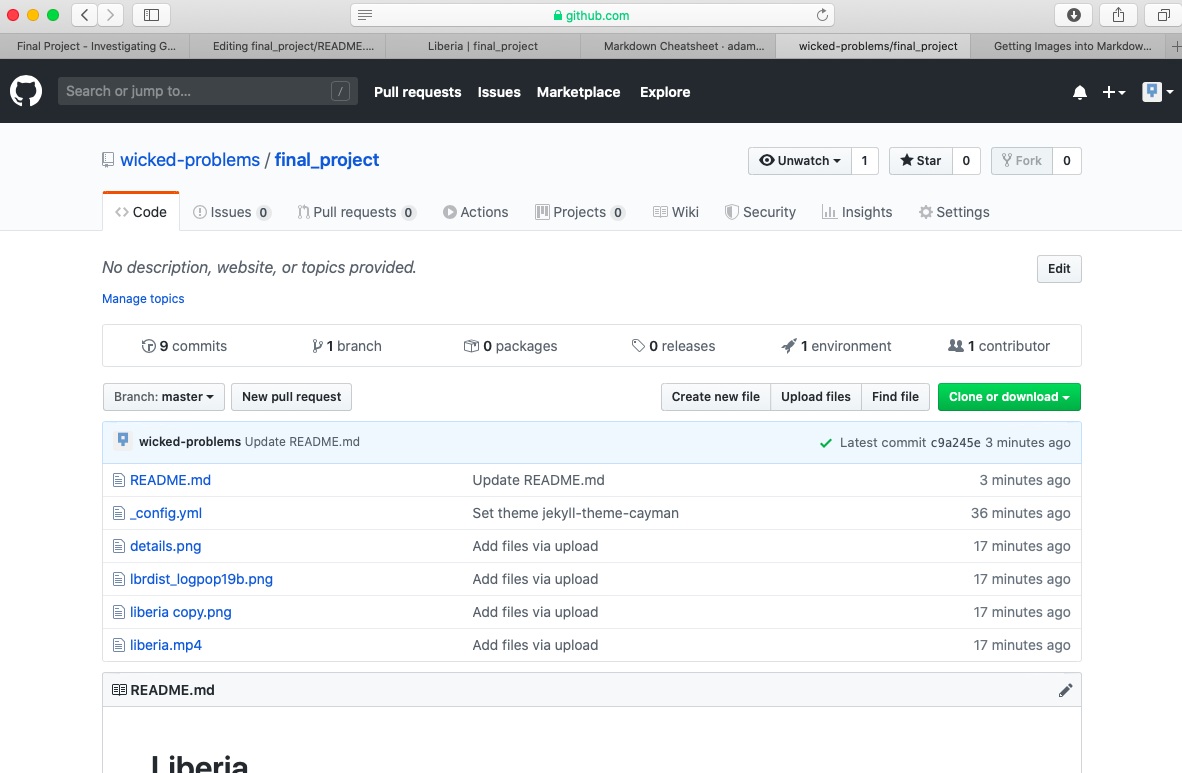

After dropping the files into my repository, the basic file structure appears as follows.

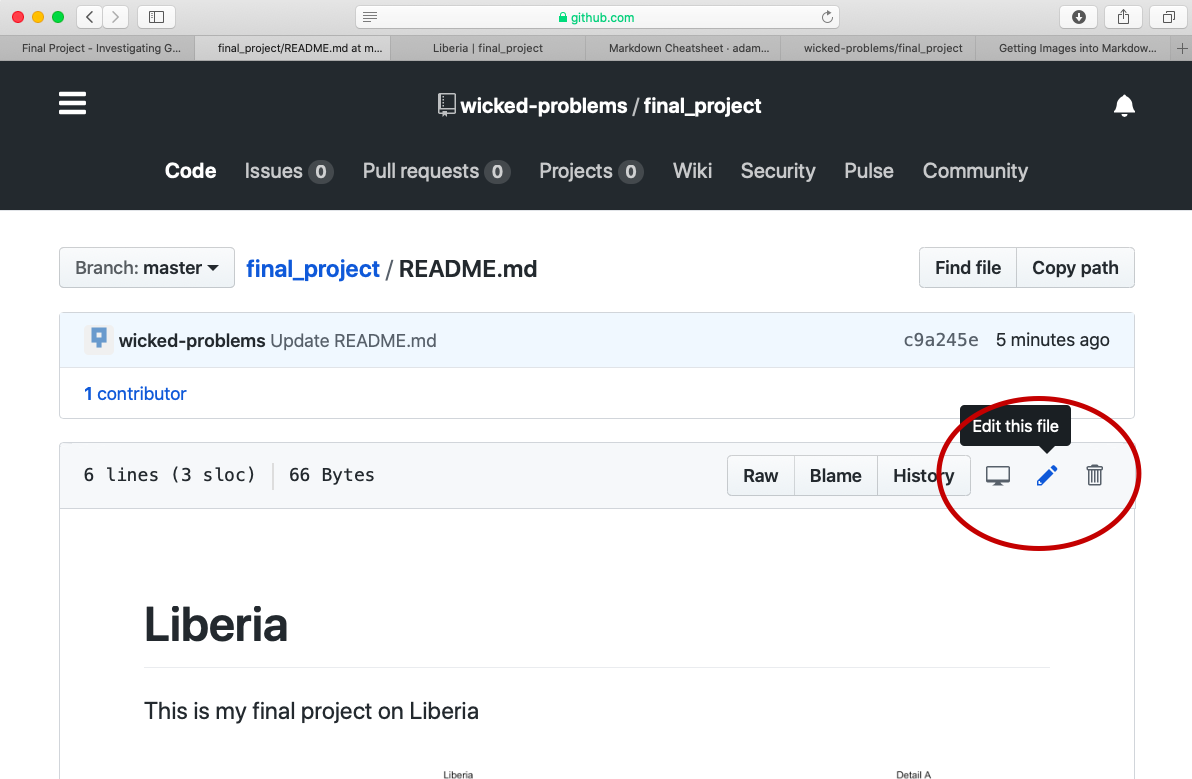

To add the image details.png to your README.md file, first select the README.md file, and then select the pen image in the upper right hand side of the screen to begin editing the content you already saved to your markdown file.

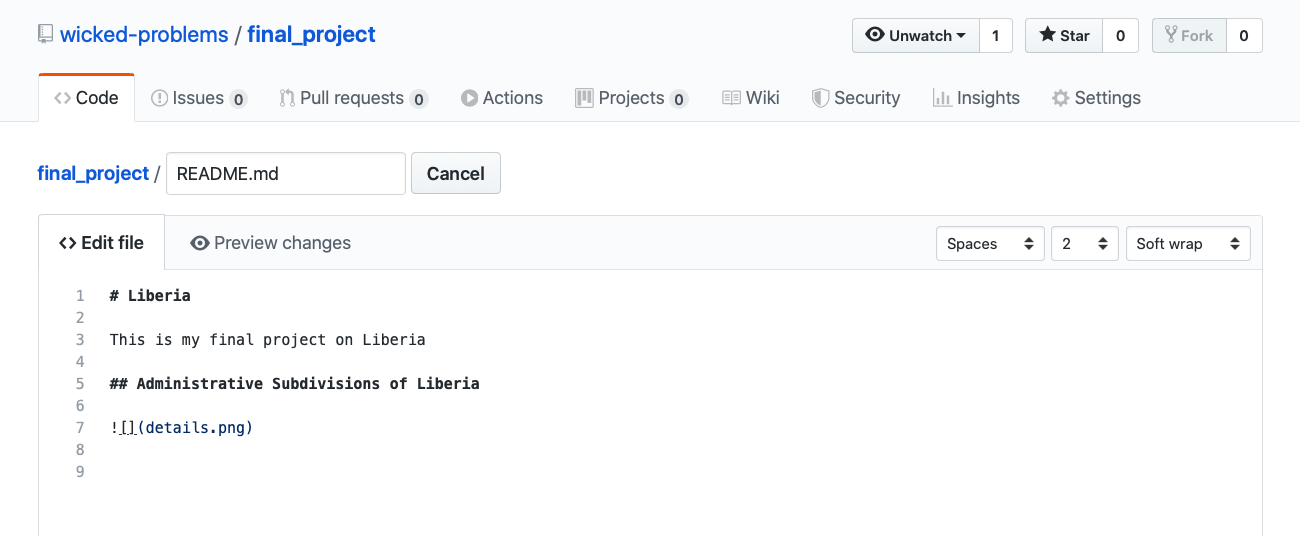

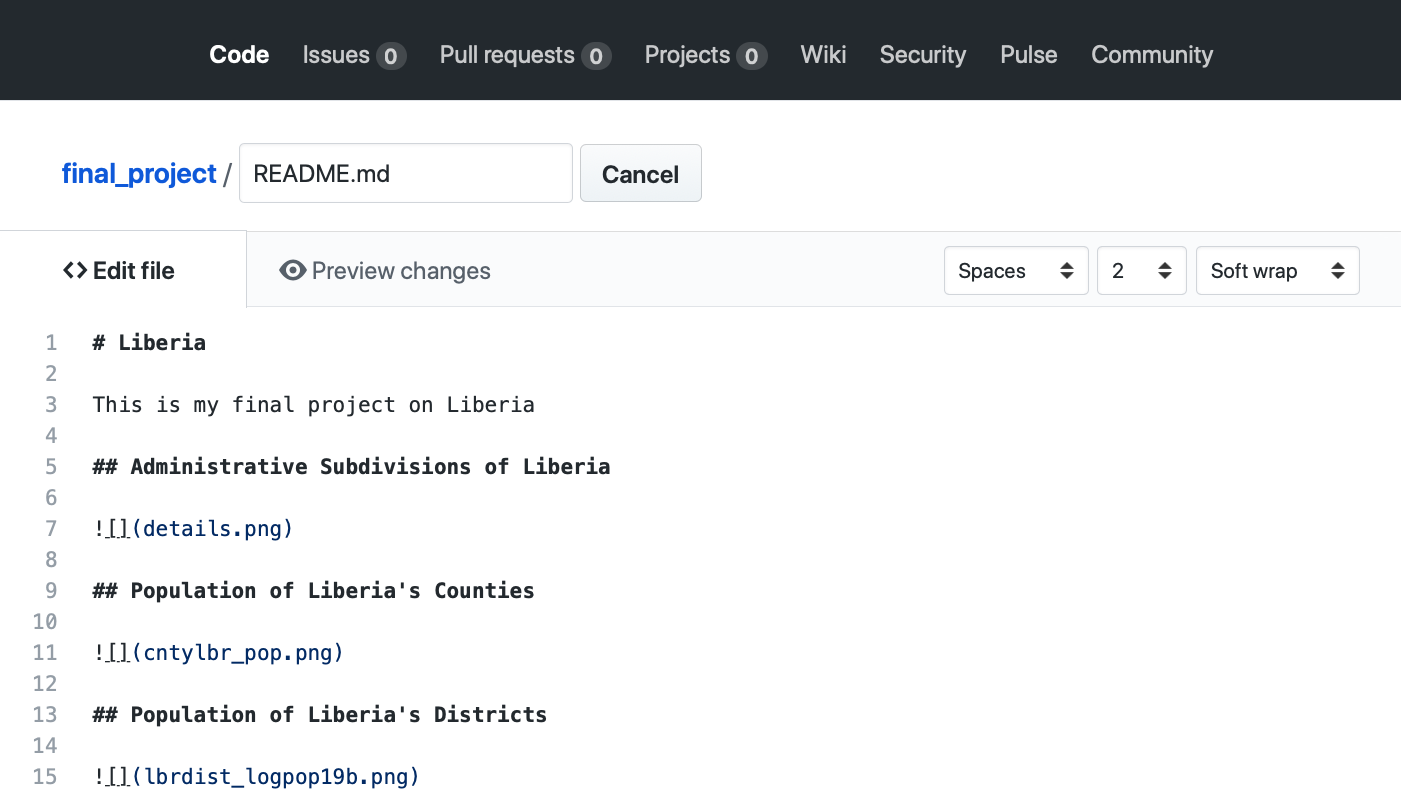

After opening up the markdown file editor, add a second level header by preceding the text with two ## and then add your image by adding a ![] in advance of the file name details.png , which is contained within () . Don't forget to scroll down to the bottom of the page and click on the commit button to make sure the changes you have made to the file are properly committed to the repository. If you do not commit your changes to your repository, your file will not have been saved nor will your webpage updates be published.

After committing the changes and waiting a few moments, your changes will appear to the published webpage.

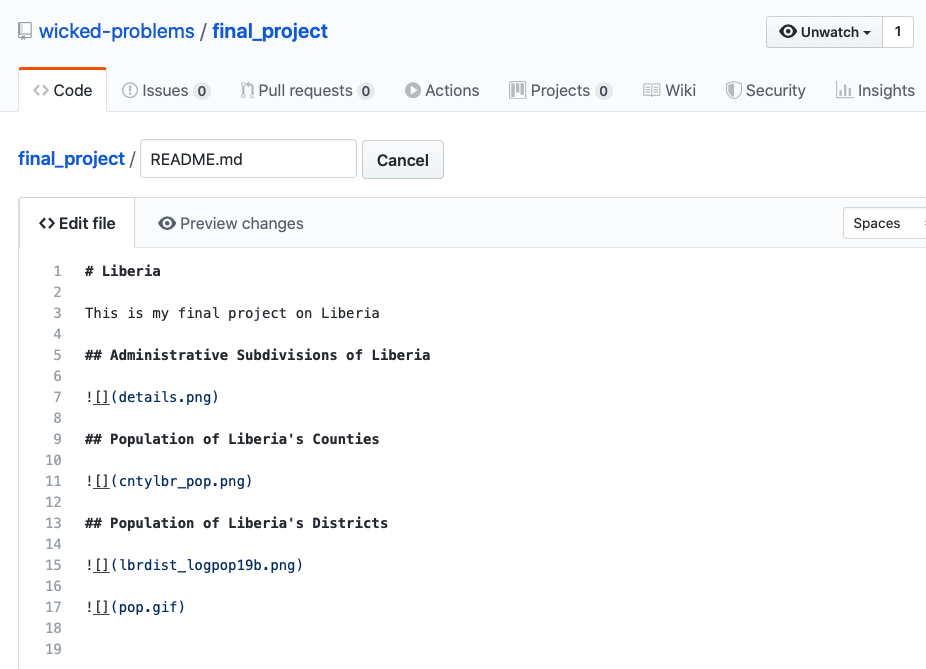

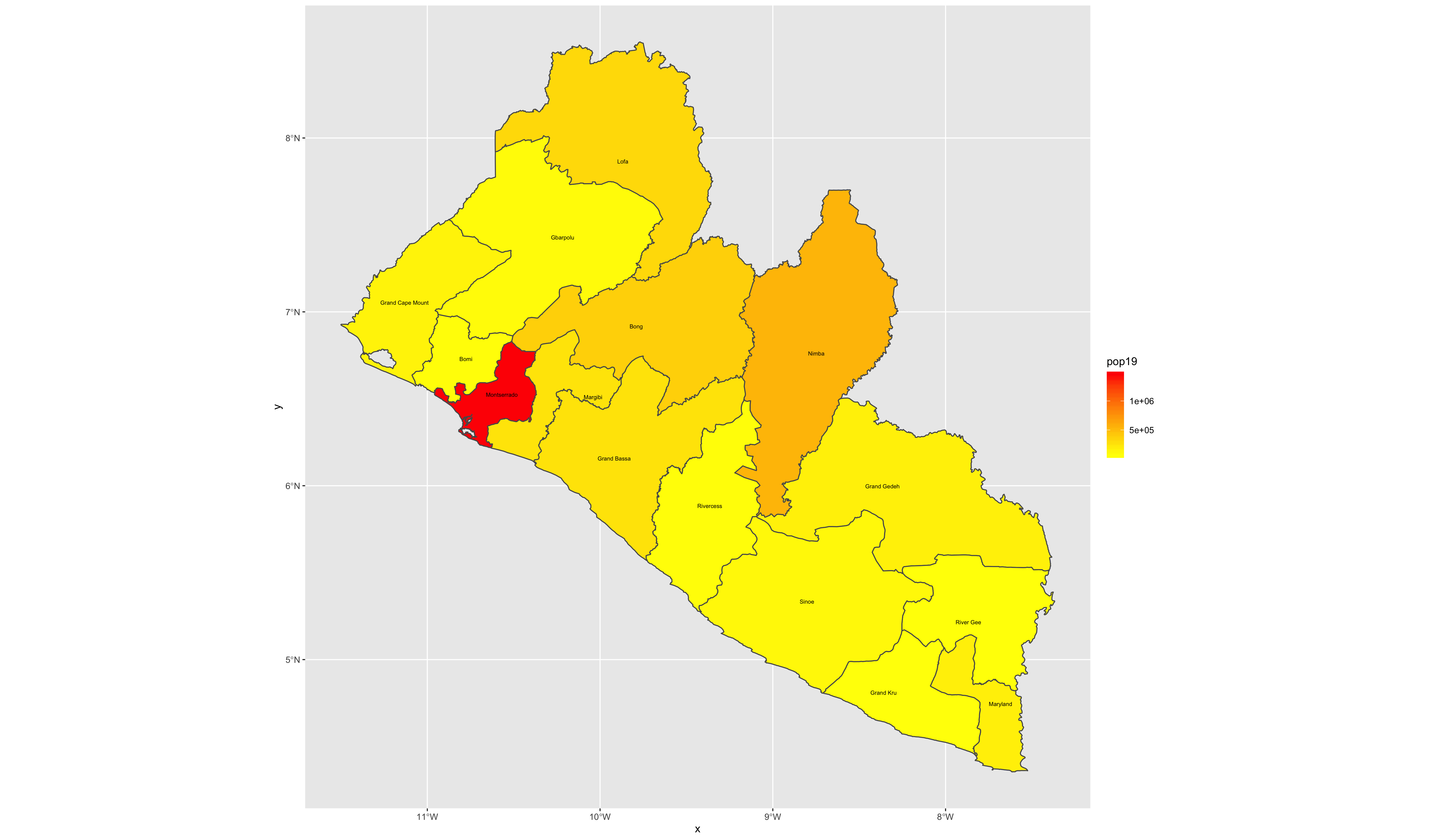

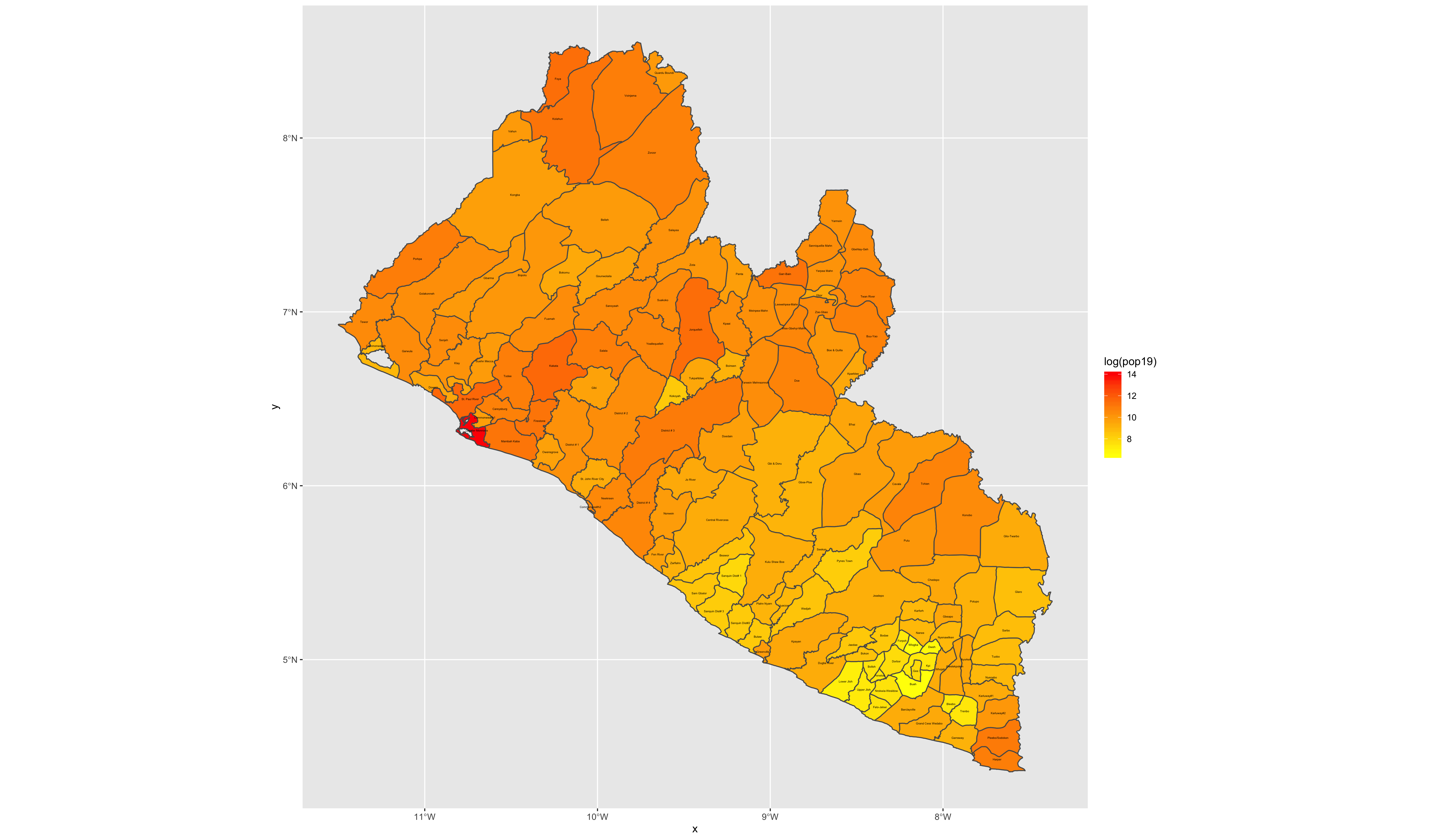

After adding your map that describes the political subdivisions of your LMIC, also add your description of population as spatially distributed at different adm levels.

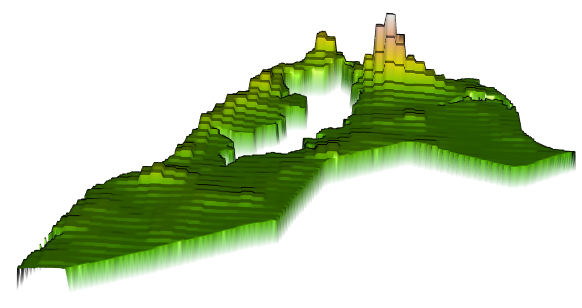

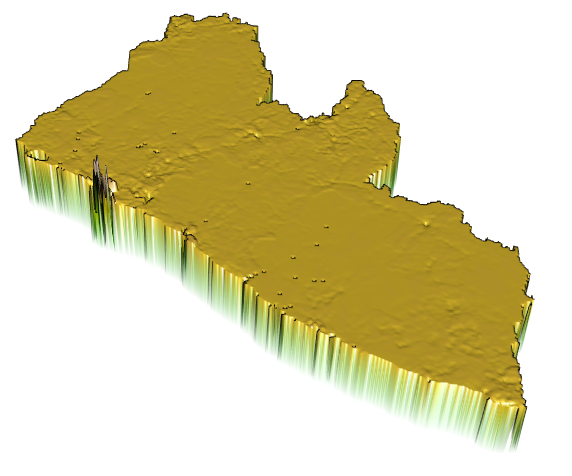

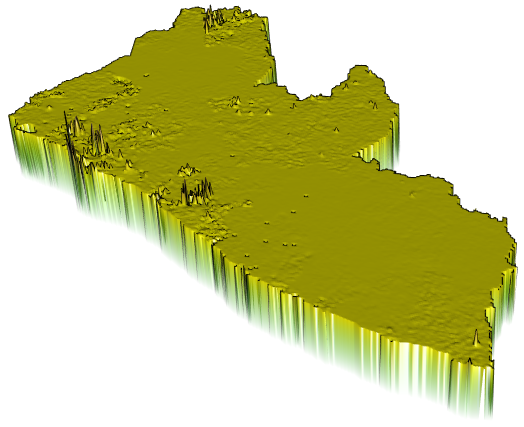

If you created an animated video, such as a .mp4 file that rotates and describes population in three dimensions, you will need to convert that file to a .gif in order to include it in your project webpage. This is fairly easily accomplished by using an online conversion tool. I simply entered "online conversion of mp4 to gif mac" into google, and the search engine returned several possible options, such as https://ezgif.com/video-to-gif, among many others. After converting your .mp4 to a .gif , upload the file to your repository and include it as an image in your markdown file, just like the other images.

Which will produce the following image as part of your webpage.

{% embed url="https://wicked-problems.github.io/final_project/" %}

Now continue to populate your newly created GitHub Pages site, using markdown and your README.md file. There are a multitude of different structural approaches you could take to creating your webpage, such as creating different markdown files and providing links to each, but in the most basic form, you can simply use the README.md file to produce your final project.

images/# Installing R and R Studio on your Computer

What is R?

R is a free, open source, programming environment for statistical computing and graphics that compiles and runs on Linux, Windows and Mac OSX. It is a very capable programming environment for accomplishing everything from an introduction to data science to some of the most poweful, advanced, and state of the art computational and statistical methods. R is capable of working with big data, high dimension data, spatial data, temporal data, as well as data at pretty much any scale imaginable, from the cosmos to the quark and everywhere in between.

The statistical programming language R is often called an interpreted programming language, which is different from machine or native programming languages such a C or Java. An interpreted programming language is distinguished from machine languages because commands and arguments are interpreted prior to being executed by the programming engine. Python is another, closely related interpreted programming language that is also popular amongst data scientists. Although the use of an interpreter compromises speed, interpreted languages have a distinct advantage in their capacity to be more readily accessible and understandable. For example, commands such as plot, read.csv(), or cbind() can be fairly easily understood as the commands for plotting an object, importing a .csv file or binding together columns of data. This accessibility has led to the strength of an open source community that is constantly developing new functions for use within the R programming framework and well as supporting their use by the larger community. One of the major advantages of an open source approach to programming is the community that supports and contributes to R continued development.

In addition to being an open source programming framework, learning to use R also fullfills one of the fundamental principals of the scientific method, reproducibility. A reproducible programming environment functions by always keeping source data in its original state external from the R framework. Data is then imported to the work session and all changes occur within the framework through the code as it is sequentially executed. In this manner, any code one writes is perfectly reproducible not only an unlimited number of times you choose to run it as well as by any other person who has access to your code (unless you are incorporating probabilities type methods in your code). Compare this reproducible workflow concept to software that employs a graphic user interface (GUI), where commands are executed by selecting a pull down menu and following a series of preset options associated with each command. Excel, Pages, Arc or QGIS are examples of software that use a GUI as their primary means of user interaction. Most programming environments keep the code separate from the interpreter or compiler and is much more easily reproducible.

While R is easier to learn than more difficult programming languages such as C or Java, increasing its ease of use can be greatly advanced by using an integrated developer environment (IDE). One of the most popular IDEs for R is called RStudio. RStudio is dependent upon R in order to function, and literally sends commands and receives results to/from the interpreter. RStudio has a number of different features that facilitate programming, project management, graphics production, reviewing data and a whole slew of other useful functions. First you will want to install R and the associated tools, then follow by installing RStudio on your computer.

Installing R

Before installing R on your operating system, it is a good idea to briefly assess the state of your computer and its constituent hardware as well as the state of your operating system. Prior to installing a new software environment, such as R, I always recommend the following.

- Do your best to equip your personal computer with the latest release of your operating system

- Make sure you have installed all essential updates for your operating system

- Restart your computer

- Make sure that all non essential processes have not automatically opened at login, such as e-mail, messaging systems, internet browsers or any other software

After you have updated your computer and done your best to preserve all computational power for the installation process, go the R Project for Statistical Computing website.

Find the download link and click on it. If this is the first time you have downloaded R, then it is likely that you will also need to select a CRAN mirror, from which you will download your file. Choose one of the mirrors from within the USA, preferable a server that is relatively close to your current location. I typically select, Duke, Carnegie Mellon or Oak Ridge National Laboratory. A more comprehensive install of R on a Mac OS X will include the following steps.

- Click on the

R.pkgfile to download the latest release. Following the steps and install R on your computer. - Click on the XQuartz link and download the latest release of

XQuartz.dmg. It is recommended to update your XQuartz system each time you install or update R. - Click on the tools link and download the latest

clang.pkgandgfortran.pkg. Install both.

Following are two video tutorials that will also assist you to install R on your personal computer. The first one is for installing R on a Mac, while the second video will guide you through the process on Windows.

Video tutorial of how to install R on a Mac

Video tutorial of how to install R on Windows

Installing RStudio

RStudio is an integrated developer environment that provides an optional front end, graphic user interface (GUI) that "sits on top" of the R statistical framework. In simple terms, RStudio will make your programming experience much easier, and is typically a good way for beginners to start off with a programmer langauge such as R. RStudio assists with coding, executing commands, saving plots and a number of other different functions. While the two are closely aligned in design and function, it is important to recognizing that RStudio is a separate program, which depends on R having first been installed.

To install RStudio go to the following webpage and download the appropriate installer for your operating system.

Local Installation of Python and PyCharm

Downloading Python and Pycharm installation files

In order to install python, you can simply go to the python website and find the correct version, download and install it. The latest version of python is 3.9.1, although if you are planning to use the tensorflow library of functions (to employ applied machine learning methods for example), at this time, the tensorflow library is only functional up to python version 3.8.7. For our purposes let's just stick with Python 3.8.7, although I will also provide the main link below.

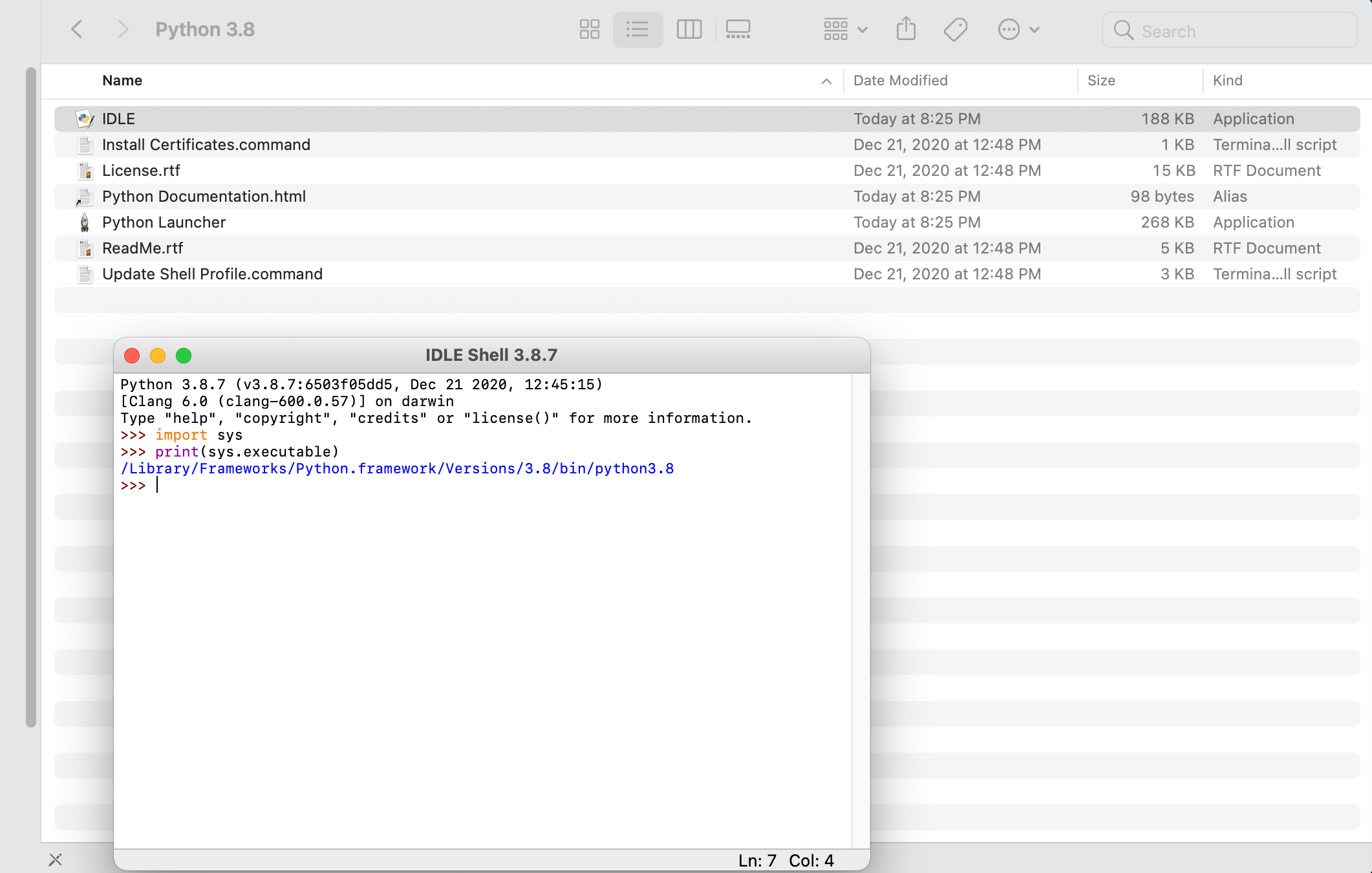

Once you have Python installed, go ahead and open the application and run it. On a Mac, you should find a Python 3.8 folder within your applications folder. If you run the IDLE app, a shell window running python 3.8.7 should open. Later you may need to know the path to your python 3.8.7 executable file, so go ahead and enter the following commands and copy the output.

import sys

print(sys.executable)

The interpreter should return the path to the executable file being used to run this version of python. Make a note of that path for later use.

Next proceed to install the Jetbrains IDE (integrated developer environment) that has been created for use with python called PyCharm. We are going to select the professional version, and then register with JetBrains as educational users, which should offer us nearly the full functionality of the IDE.

Once PyCharm is installed you should find it in your applications folder (Mac) or search for it in your Windows search box (bottom left-hand corner). In order to gain full functionality of PyCharm, you will want to register your product as a student license, which you should be able to accomplish from the "Welcome to PyCharm" screen. Before entering a new license for your PyCharm software you will need to create a JetBrains account. You can do so at the following website.

JetBrains Products for Learning

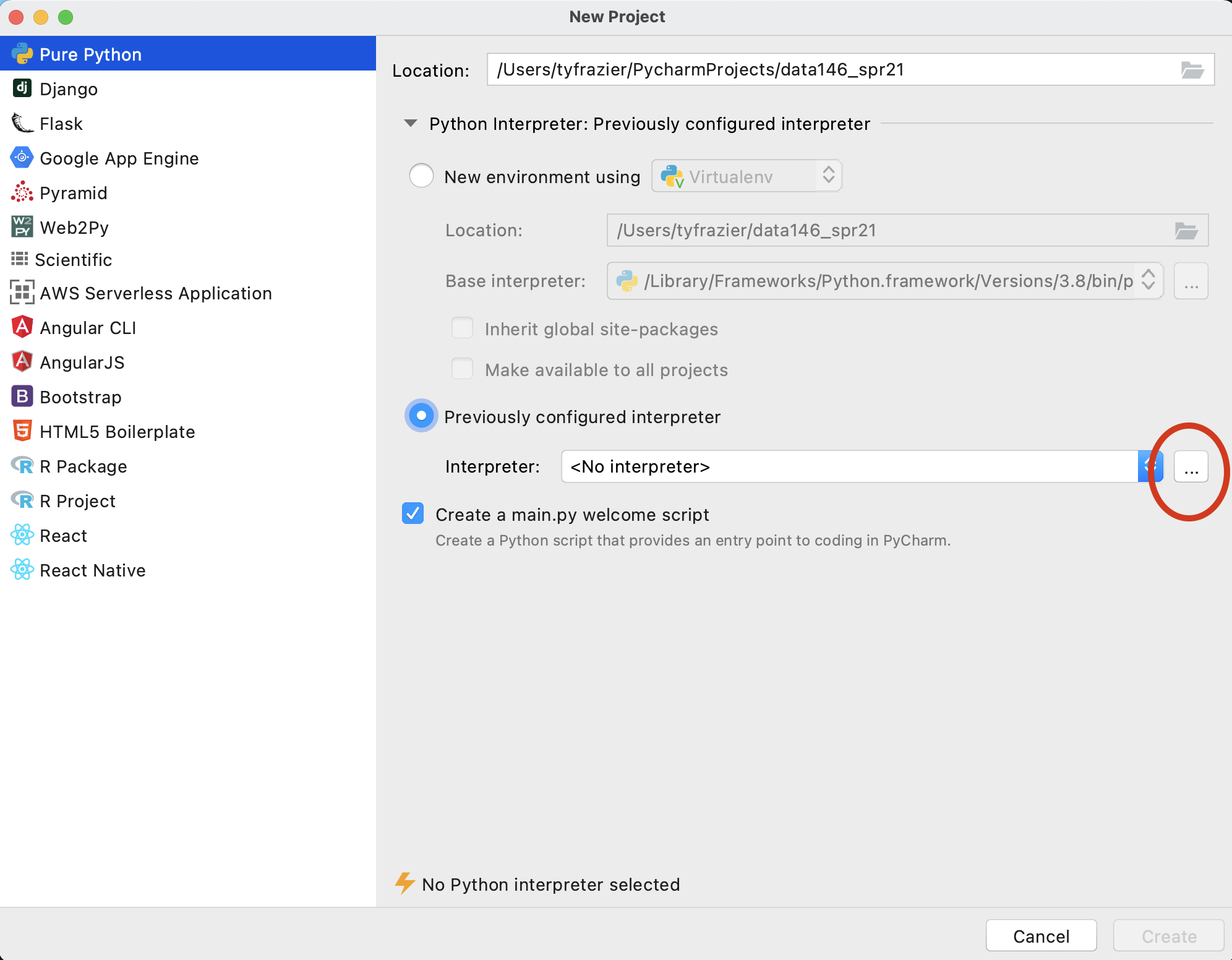

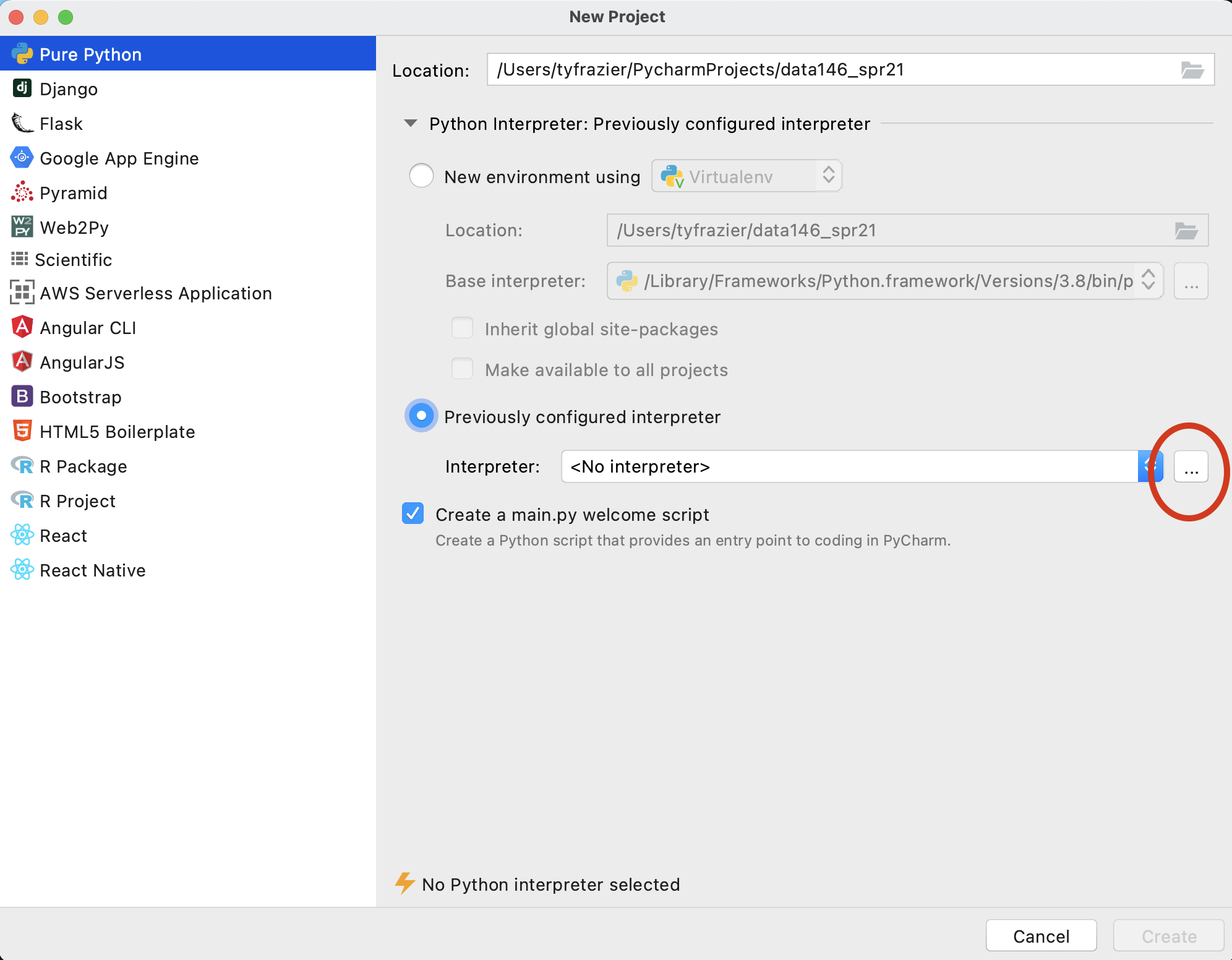

Once you have registered your product, from the Welcome to PyCharm screen, select New Project. You should see a window that gives you the option to name your new project and select an interpreter. Since you just installed python 3.8.7 we should find that interpreter and add it to your newly created project. To do this select "previously configured interpreter" and select the ... off to the right.

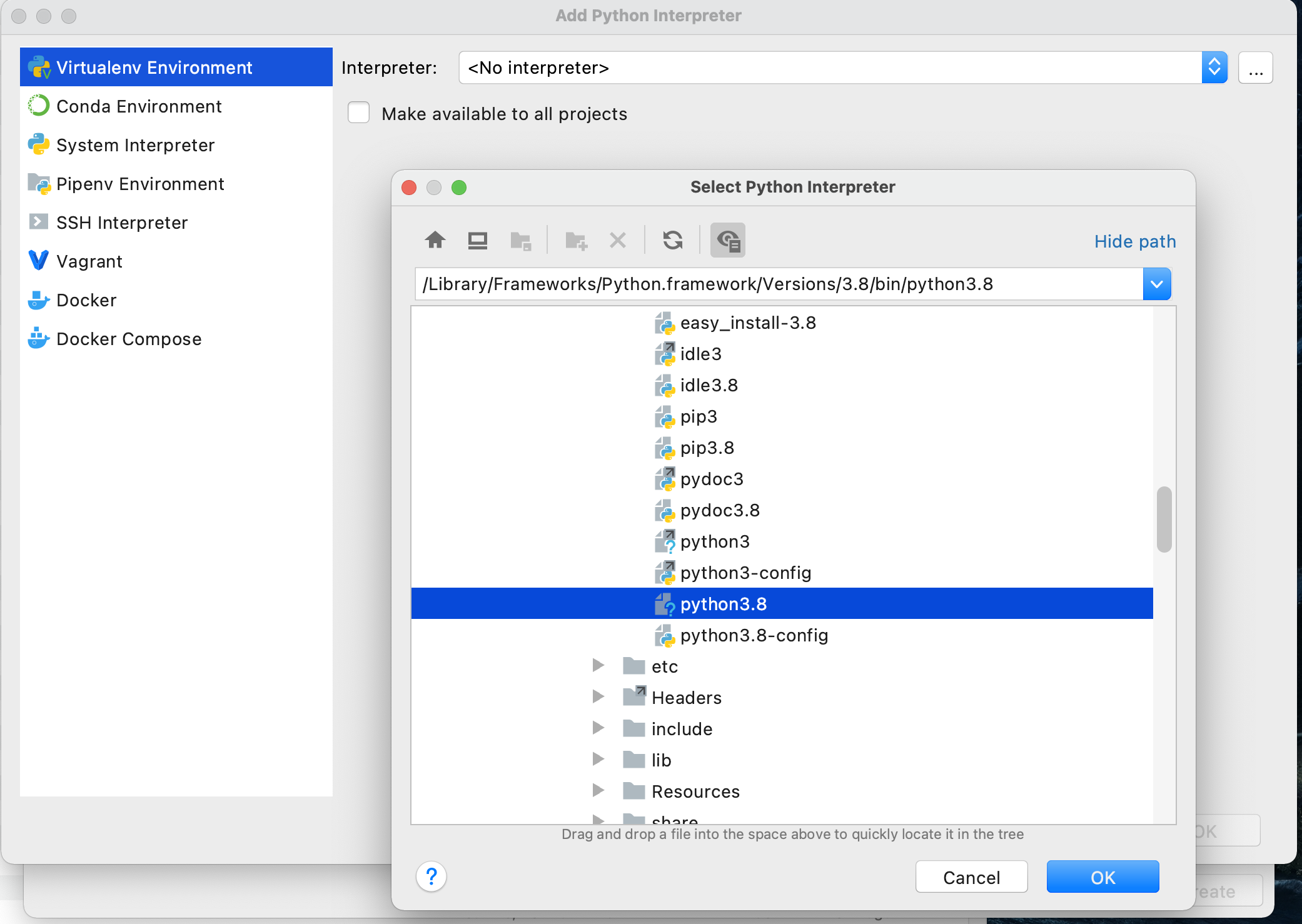

On this screen, again click the ... off to the right of the pull down to select your "Interpreter" in the "Virtualenv Environment" pane. Recall the path you previously recorded from when you initially installed python 3.8.7. Find this file using the "Select Python Interpreter" window and then select "OK".

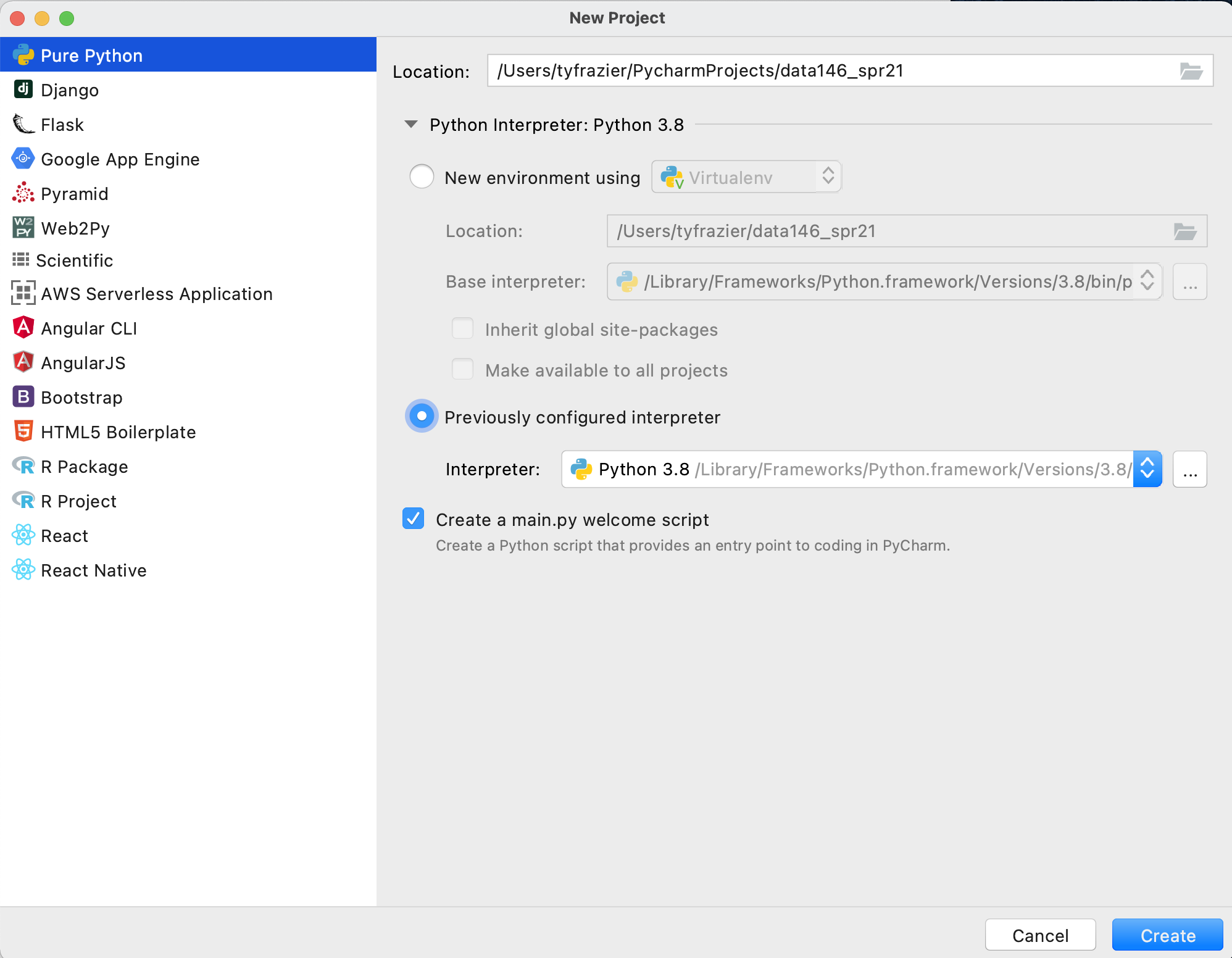

Upon completing the previous steps, you should return to the "New Project" window. Go ahead and create your new python project.

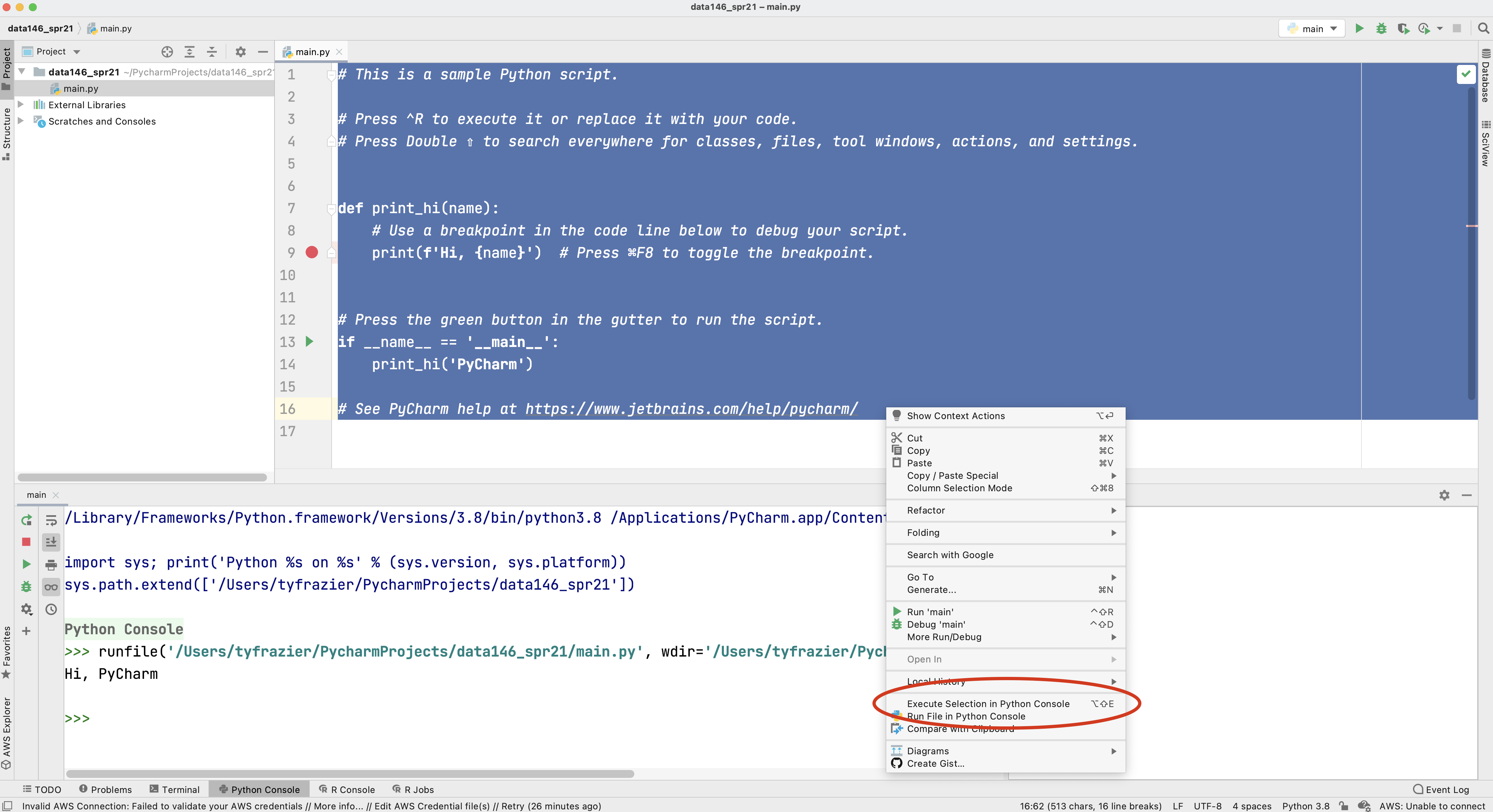

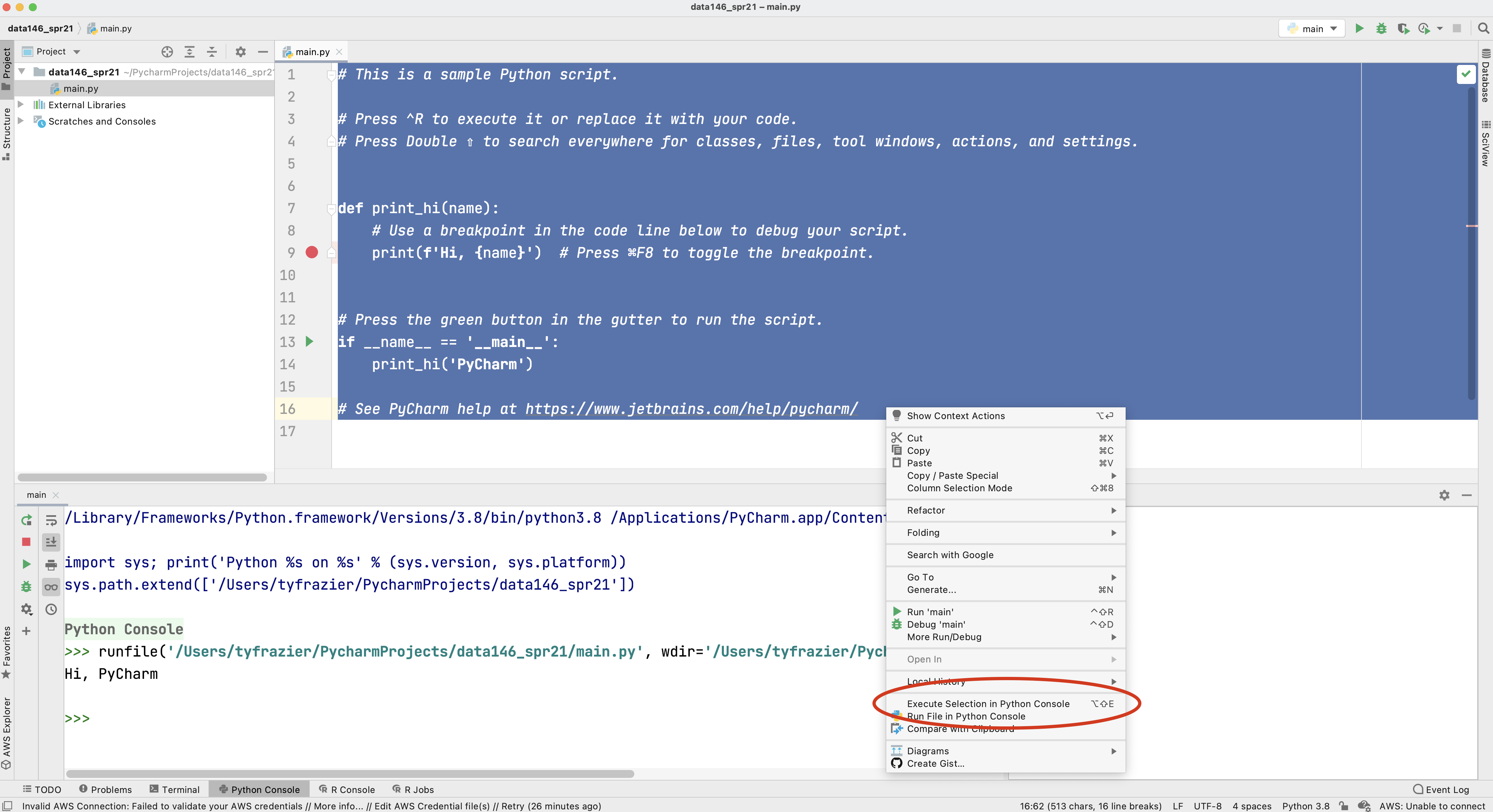

Once you have created your new project and designated the python interpreter, your integrated developer environment should appear. If you left the "create a main.py welcome script" check box selected, then you should also have a main.py script in the right pane of your console. To execute this script, select the entire code either by highlighting it with your cursor or using command-A, and then right click on the selected code select the option "Execute Selection in Python Console".

Alternatively you can also run your selected code by executing the option-shift-E combination of keys. If your console returns a "Hi, PyCharm" then you have successfully installed Python and PyCharm!

Remote access via William & Mary Virtual Desktop

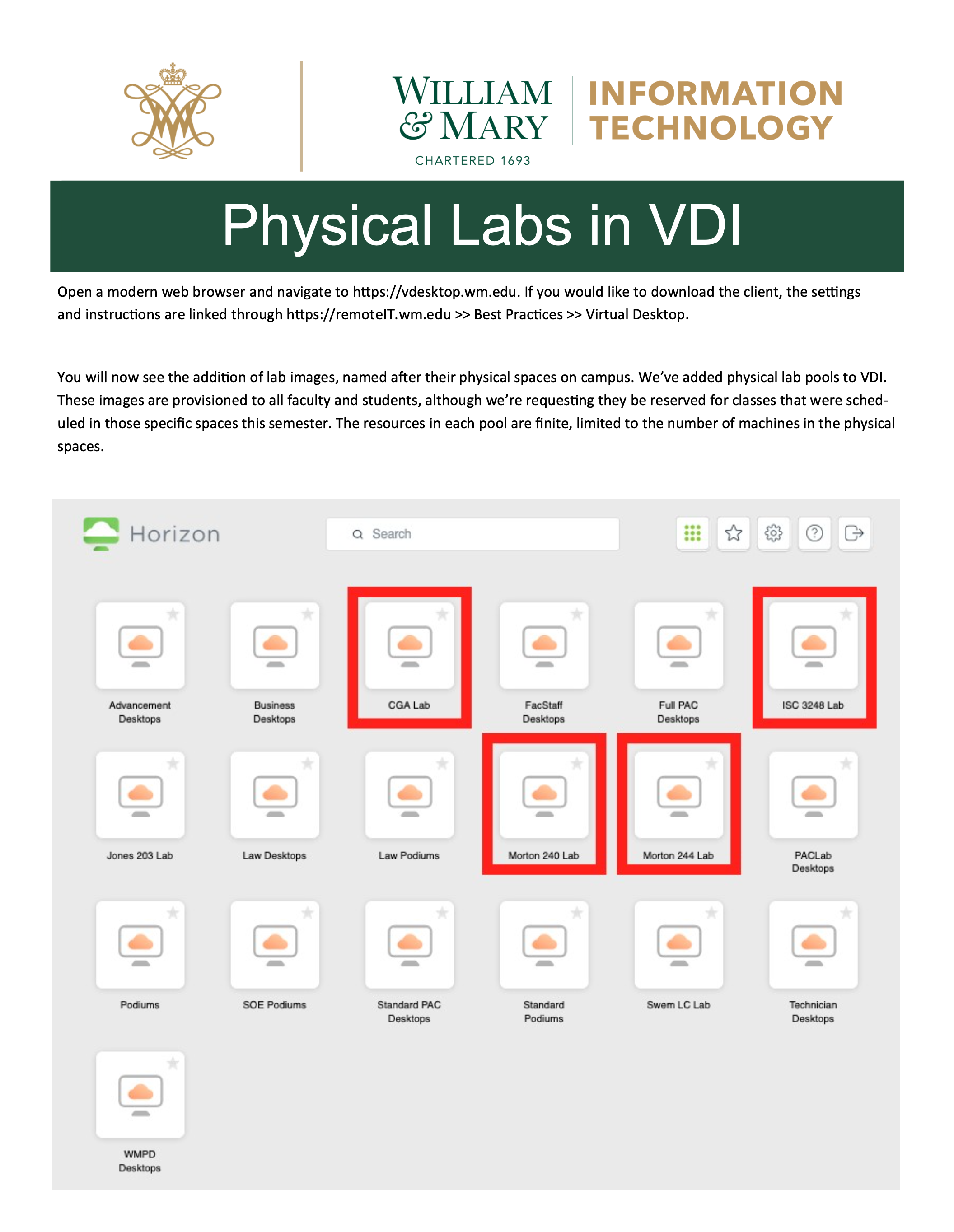

A good back-up option for running Python and PyCharm is to remotely access a laboratory computer. The William & Mary IT department offers virtual access to most of our physical labs using a virtual desktop.

Point your browser to the web address in the image above and follow the instructions in order to gain remote access to a lab computer on campus. The computers in ISC 3248 should have Python and PyCharm available for your use. For additional instructions please see the following link.

Also, if you wish to simply visit the computer lab in ISC3 #3248 (or elsewhere on campus) and open PyCharm on a local machine at that location that is also an option.

Remote access via Jupyter Notebooks or Google Colab

Jupyter notebooks employ a slightly different approach to running python or alternatively R or Julia. An advantage of using a Jupyter Notebook is that the installation process is typically removed from the local workstation and executed on a remote server. Project notebooks employ cells and integrated text that sequentially alternate instruction and execution of code in order to facilitate teaching and learning. Jupyter notebooks are a popular choice for running python, and we have a local server that is available for your use and can be found at the following web address.

Another possible option for remote python execution is Google developers free Jupyter notebook environment called Colaboratory or often just simply Google Colab. Like the jupyter hub installation, google colab also runs in a webbrowser and is accessed via the following address.

Both Jupyter notebooks and Google Colaboratory offer simple, remote interfaces that readily enable access to python from the outset.

One final note regarding jupyter notebooks. With your professional version of PyCharm, you should be able to run and modify .ipynb file types (jupyter notebooks) within your PyCharm IDE.

More advanced option: using a Package Manager

Package managers or package management systems are an effective way to keep track and automate the process of installing, upgrading, configuring and removing computer programs for a computer operating system. While there are several possible options available, there are two recommended options, depending on the operating system you are running on your local machine. The package manager itself, requires a bit more investment on the front-end in order to implement and understand, but ultimately in the long run, this investment can pay off with a more smoothly running operating system and encountering fewer technical complications and conflicts.

Installing Homebrew for Mac Operating Systems

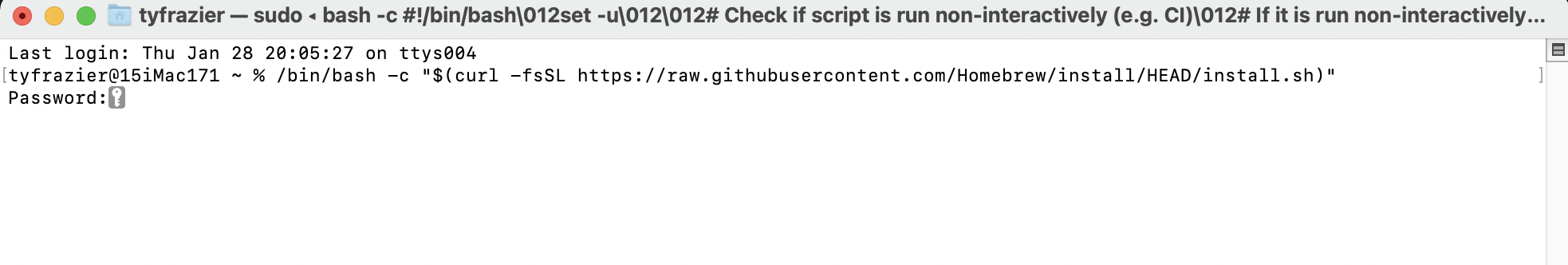

One of the most preferred Mac OSX package managers is homebrew. To install homebrew on your Mac, go to the homebrew webpage, copy the link beneath where it says "Install Homebrew" and then paste it into your terminal. Your operating system may ask you to provide your password in order to install the package manager with administrative privileges.

One of the first things you will want to do with your new homebrew installation is to run a few commands in order to get your system up to date, cleaned up and ready to go.

% brew upgrade

- Upgrades your homebrew installation to the current version

% brew update

- Updates the package installation formulas

% brew cleanup

- checks system links and removes them as needed

% brew doctor

- Checks the installation of your programs for possible configuration conflicts. Ideally this command returns "ready to brew" although some possible warnings can be laborious to investigate and fix, and may require further study for novices.

Once you have homebrew installed you can start by installing python. Go back to the main homebrew page and have a look at the [Homebrew Packages](Homebrew Packages), formulas that are available. Scroll down through the list until you find the formulas for python. The basic syntax for installing a package using homebrew is brew install [package name here].

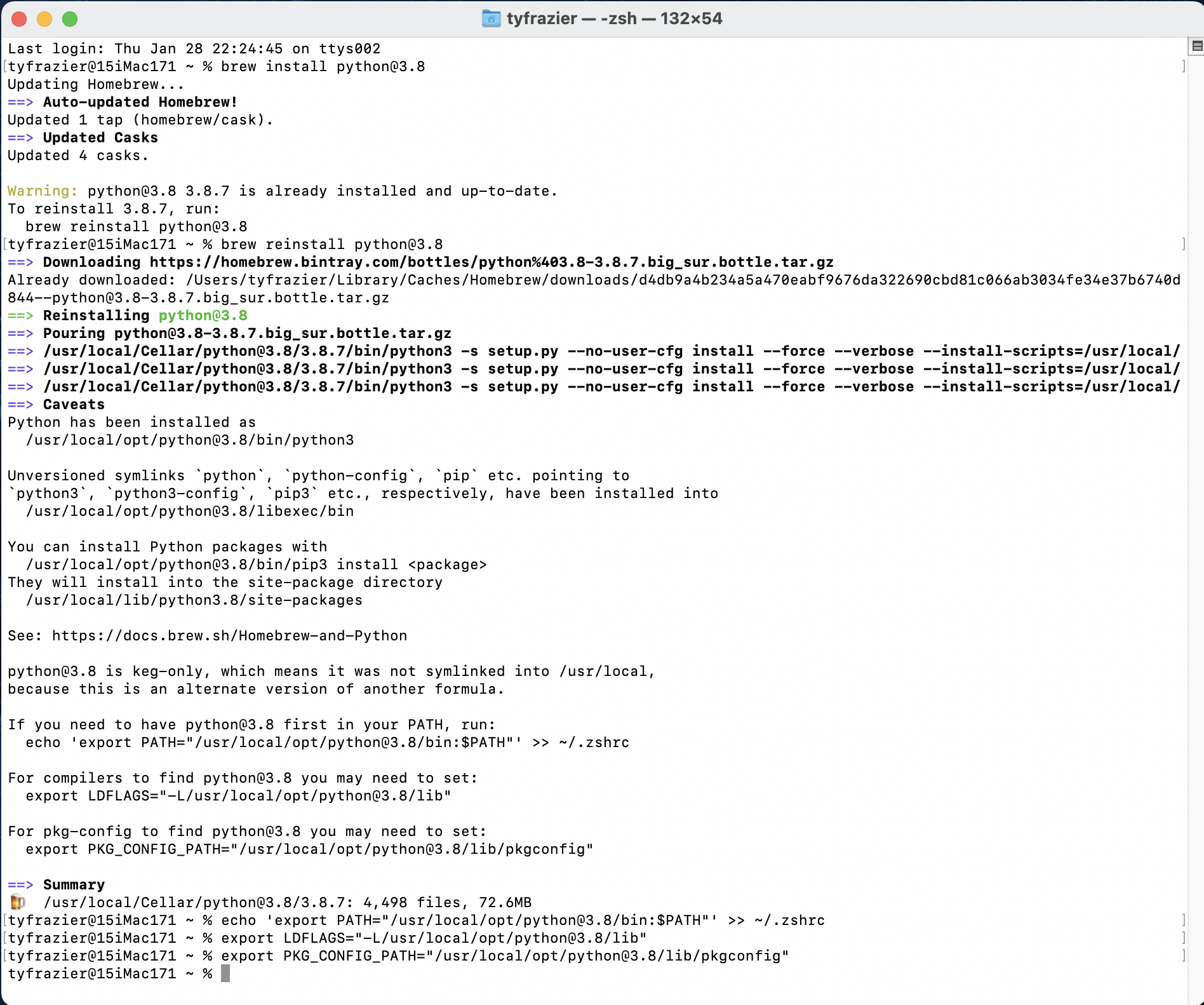

The latest version of python is 3.9.1, although if you are planning to use the tensorflow library of functions (to employ applied machine learning methods for example), at this time, the tensorflow library is only functional up to python version 3.8.7. I will start with the python version 3.8.7, which can be installed using the basic homebrew command. If you find the python@3.8 formula page, you can simply copy the formula to your clipboard and paste into your terminal.

brew install python@3.8

From the following image of my terminal you can see that I already have python 3.8.7 installed, but none the less, I have decided to go ahead and reinstall again. There are a number of symlinks that probably need to be addressed, which also show up when I run brew doctor, but I'm simply going to ignore these for the time being. At the end, I did go ahead and run the command that exported a path to my configuration file (in my case zshell) and likewise did for compilers and also package configuration. Just as you did above, you will want to identify the location of your newly installed python interpreter for use when creating your new python project and initially selecting an interpreter.

Once you have python installed, next you can proceed to install the Jetbrains IDE (integrated developer environment) that has been created for use with python called PyCharm. We are going to select the professional version, and then register with JetBrains as educational users, which should offer us nearly the full functionality of the IDE.

brew install pycharm

Once you have installed PyCharm, you should be able to find it in your applications folder. You are welcome to go ahead and open it and look for the add license tab. Before entering a new license for your PyCharm software you will need to create a JetBrains account. You can do so at the following website.

JetBrains Products for Learning

It might take a few minutes to complete the registration, but once finished you should be able to enter your e-mail and password in order to activate PyCharm. Once you have activated your software, and started up PyCharm, you should see a "Welcome to PyCharm" window where you can open a new project. Go ahead and select "new project." Following this step, you should see a window that has a similar appearance to the following image. Choose "pure python" and give your project a name there it will be located within your PycharmProjects home folder. I will assign the name of my course and select the "virtual environment" and python@3.8 as the base interpreter for this newly created project.

Once you have created your new project designate the python interpreter. Rather than using the full path to the executable you should be able to locate your interpreter in its system folder. On my Mac, there are separate interpreters for both python@3.9 and python@3.8 within the /usr/local/bin/python3 folder. You can create two separate virtual environments within your PyCharm IDE and select per project as needed. After selecting your interpreter PyCharm should appear.

If your PyCharm IDE appears similar to the previous image, you are pretty much ready to get started using python!

Installing Chocolatey for Windows Operating Systems

Chocolatey is a relatively new package manager that is similar to homebrew but is designed for use with the Windows operating system rather than a Mac. To install chocolatey on your Windows computer go to the Chocolatey installation webpage and follow the instruction under "Step 2."

Chocolatey functions in a manner very similar to homebrew (as described in the previous step), although instead of entering your commands at the terminal of a unix shell, on a windows system you will enter the installation command using the powershell. Copy the command using your clip board and paste it into your powershell.

Once you have the Chocolatey package manager in place, you should be able to begin installing software using the chocolatey formulas. Have a look at the python 3.8.7 formula page and copy the formula onto your clip board and then enter it into your powershell.

choco install python3 --version=3.8.7

Which should effectively install python to your system.

Again, in a manner that is quite similar to applying a homebrew installation formula, go ahead and install the PyCharm IDE using that particular formula.

choco install pycharm

Once you have installed the PyCharm IDE to your operating system, follow the instructions above under the homebrew installation in order to register with Jetbrains and to obtain a student educational license. After licensing your product, go ahead and create a new project, select a virtual python interpreter and open your PyCharm IDE!

Getting Started with RStudio & R

Starting RStudio

Once you have finished the installation process, run the RStudio IDE, which will automatically find R on your computer. Find the application RStudio on your computer. The RStudio executable should be located in the applications folder on a Mac. Once running the RStudio application on a Mac it is often helpful to keep the application icon in the dock, which is the bar of applications that exists along the bottom of your computer desktop screen. On Windows, if you select the Window icon in the bottom left corner and begin typing RStudio, you should see the application icon appear. On a Linux system, the application should appear in one of the OS drop down windows. Choose the application icon and open it.

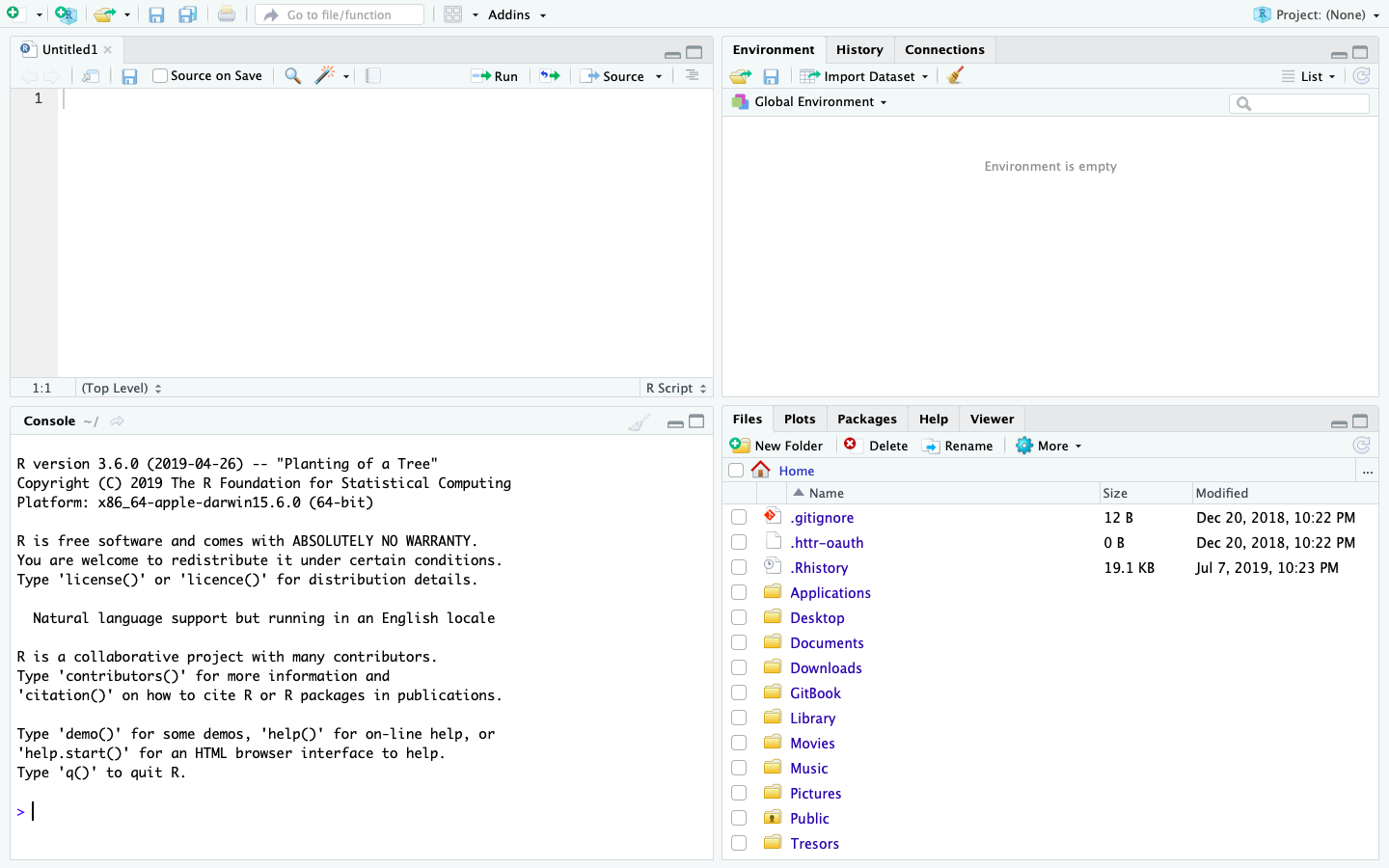

If both R and RStudio were properly installed, then the start up for RStudio should appear something like the following image.

One of the first things to note at start up is the bottom left hand pane, which is essentially a window to the R interpreter. RStudio reports the version of R that has been installed on your computer. Next go to the pull down menu for File > New Script > R Script and select that option. This will create a new R Script that will appear in the top left pane of your R Studio IDE. One can think of the script as the location where all computer code will be written and saved, in a manner somewhat analogous to writing a letter or essay with a work processor. Below the script in RStudio is the console or the location where your commands are sent and responses from R will be returned. You can think of the > symbol in the console as R somewhat figuratively waiting for your command and subsequently also the location where R will respond.

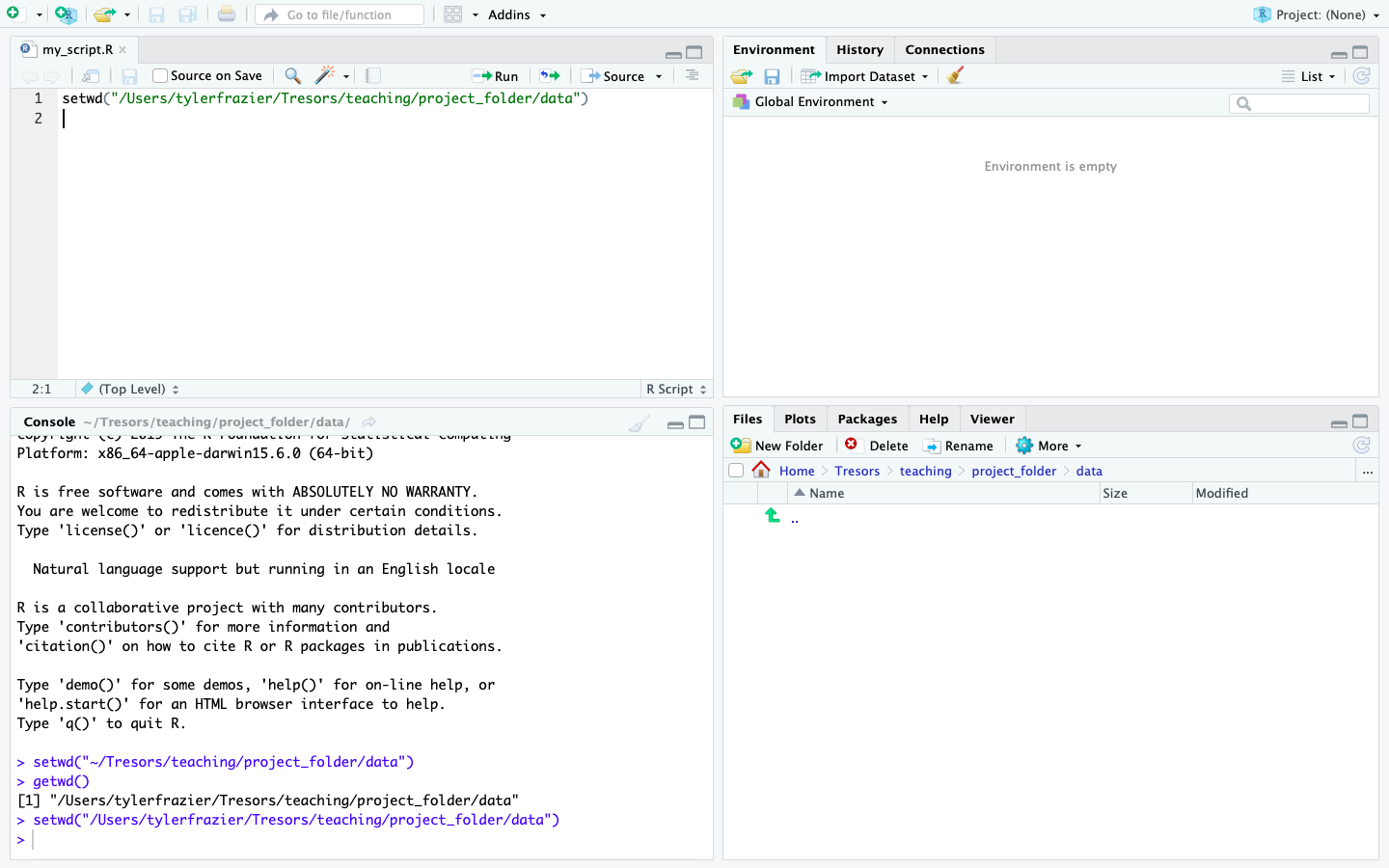

Since you now have a script file where you will save your first R commands, you should also have a working director where you will save your my_first_script.R file. At this point you should minimize RStudio, and return to your file explorer or finder and create a folder that you will use to save your script, output as well as any files you may import to R. Generally, I create a project specific folder and then within that folder I begin with two subdirectories, one that is dedicated for storing data and a second one that is dedicated to saving my scripts. After you have created your project folder, return to RStudio and then select Session > Set Working Directory > Choose Directory from the drop down menu. Choosing this command will result in a file explorer window appearing in order for RStudio to select the working directory for your work session. The working directory is the default location where R will automatically look in order to import or export and data. Go ahead and select the data subdirectory you just created within your project folder. Upon selecting your data folder, you should notice a command appear within the console pane in the bottom left hand corner of RStudio.

setwd("~/my_folder/my_project_folder/my_data_folder")

By using the RStudio IDE GUI you have just executed your first command. You can confirm that the command was properly executed from within the console by typing the following command directly in the console.

getwd()

You should notice that R returns the path from the working directory you just designated. Instead of setting your working directory using the drop down menu, the preferred method is to designate that path by using code in R. Fortunately, RStudio has already specified the command for you, so just go ahead and copy the setwd() command from above and paste it into your script file in the top left hand corner of your RStudio work session. You could also retype it, but in general, copying and pasting is going to be much more efficient. On a Mac copying and pasting is accomplished by using the ⌘C & ⌘V keys or on Windows control-C and control-V. **** After copying and pasting that line of code within your script, go ahead and execute the function again, except this time, send the command directly from your script to the console. To do this use, move the cursor to the line where you pasted the code and then select ⌘return on a Mac or control-return on Windows. Congratulations! You have just written and executed your first line of code.

Now that your script has content, you should save the file. Select the >File>Save command from the drop down window and choose the data subdirectory you created within your project folder. Name your script file and then select save. Your RStudio work space should appear similar to the following image.

One noteworthy observation regarding the command setwd(), notice how the path to the working directory is specified within quotation marks. In general, whenever RStudio is communicating with your operating system (OS) or any entity outside of its workspace, what ever is being sent to that computer will be included within quotation marks. For example setwd("the/path/to_my/working/directory") is contained within quotation marks in order for RStudio to traverse the path as defined by your OS to that location on your computer.

description: >- In this exercise you will learn how to create vector and data frame objects, use the sample function and generate a plot that includes different types of squares, circles and lines.

Creating and Plotting Objects

Creating an object & creating a plot

Since you have already set your working directory in the previous step, now you can create your first object. Do so by writing the following command in your script.

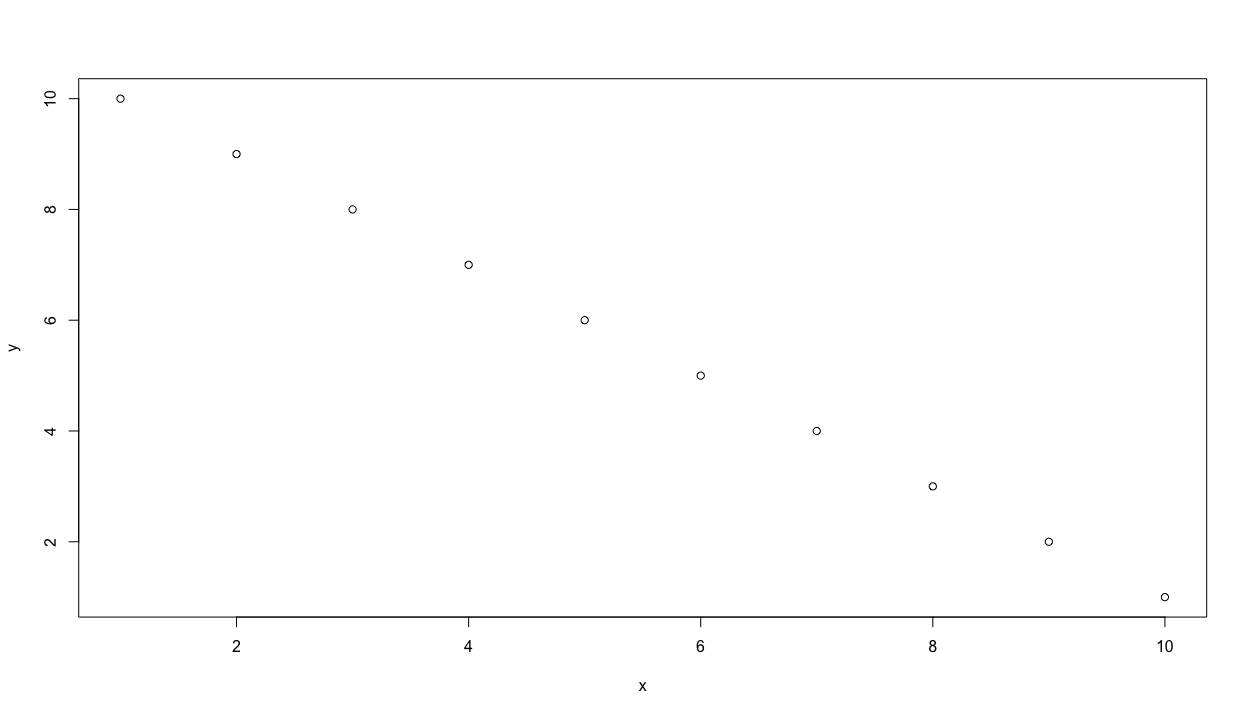

x <- 1:10

There are essentially three parts to this command. First take note of the <- symbol, which is often called the assignment operator. The <- operator will function by assigning everything on its right hand side to the newly named object on the left. For example, if you entered the command t <- 1 and then typed t directly in the console and pressed return, R would inform you of the value of t, which would be 1. After creating and defining x do the same thing for y but this time start with the highest value and decrease sequentially to 1.

y <- 10:1

Now lets move to the console and ask R a few things directly. Sometimes we want to save our script, while at other times we just want to ask R a quick question. First lets ask R to list all the objects that exist in our workspace at this point in time. We use the ls() command to list all objects that exist in our workspace.

[1] "x" "y"

Let's also ask R to tell us more about the two objects we have created and placed in our workspace. Go ahead and type x and then y directly into your console and consider the output.

x

[1] 1 2 3 4 5 6 7 8 9 10

y

[1] 10 9 8 7 6 5 4 3 2 1

We can see that our earlier use of the colon in x <- 1:10 created an object named x that contains each whole number in sequence from 1 to 10, while y likewise did the same except in reverse. Also by simply typing the name of the object, R reveals to us everything it knows. Since we have two objects of equal length, lets plot x & y together.

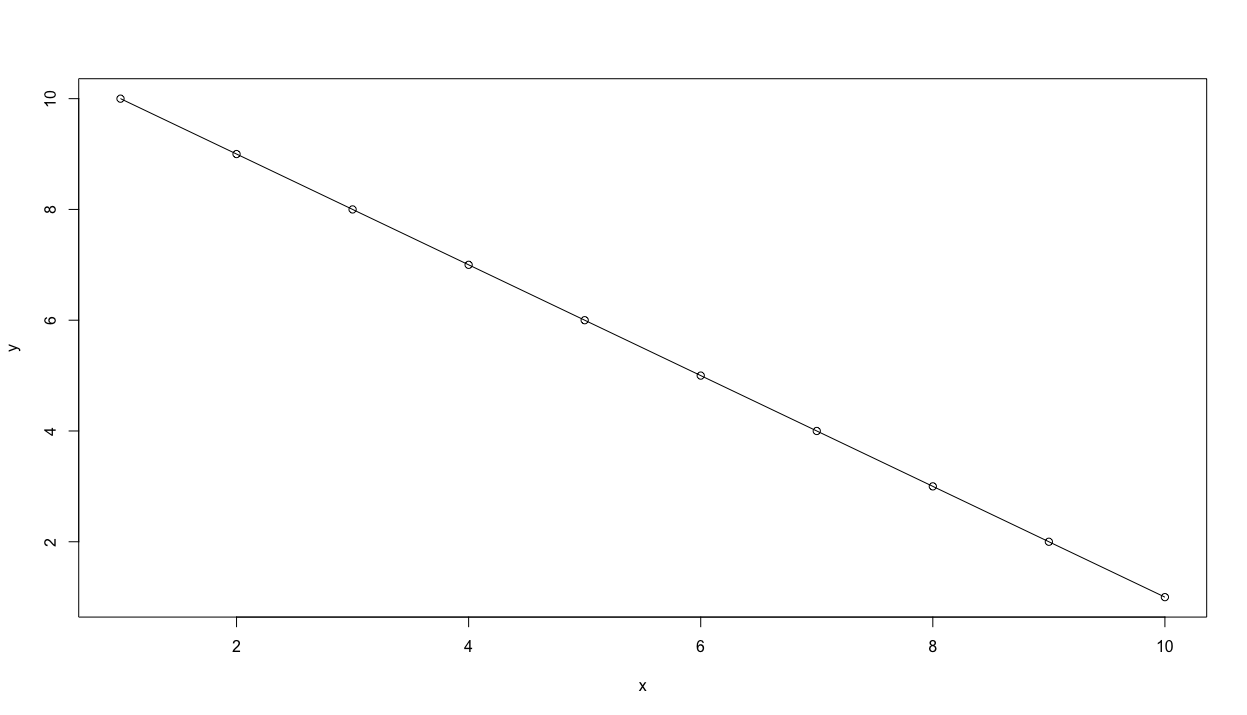

plot(x,y)

We can continue to describe our plot by adding an argument to our command by specifying the plot type as a line and not simply points

plot(x, y, type = "l")

or alternatively a plot with both a line and points over that line.

plot(x, y, type = "o")

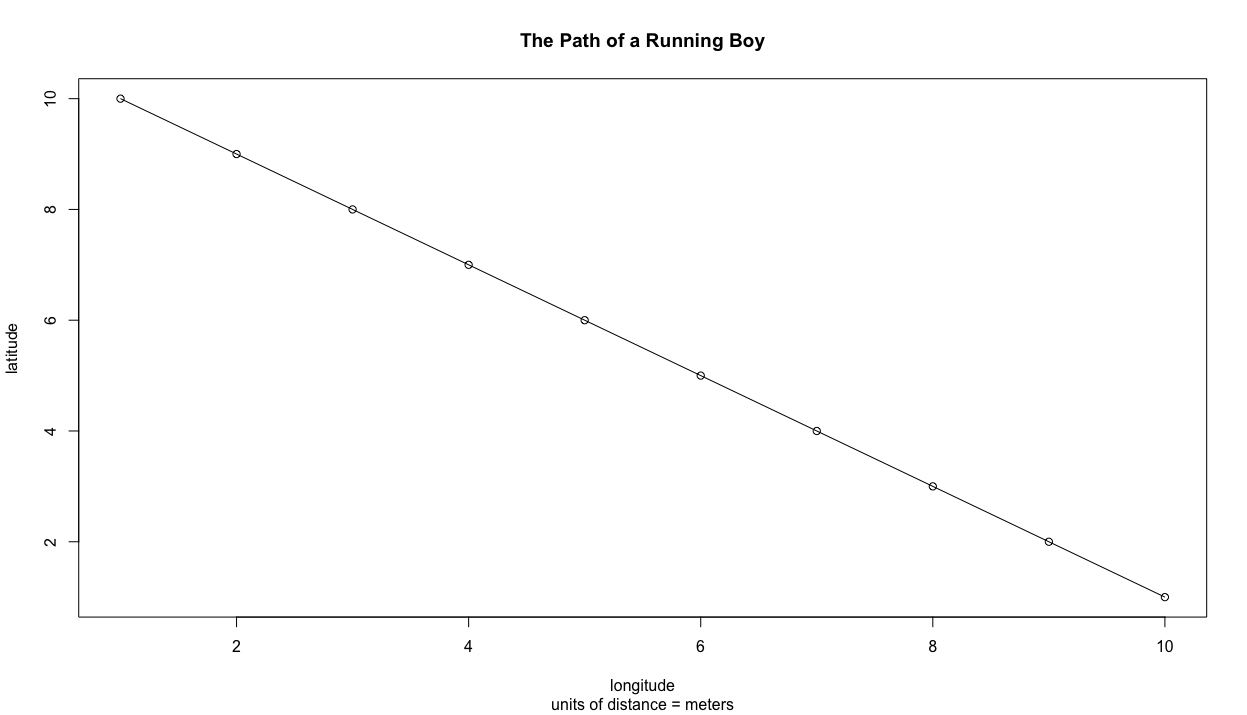

We can also add some description to our plot in order to better communicate our results. We can begin by adding a title, indicating the units of measurement while also adding labels for both the x and y axes.

plot(x, y, type = "o",

main = "The Path of a Running Boy",

sub = "units of distance = meters",

xlab = "longitude",

ylab = "latitude")

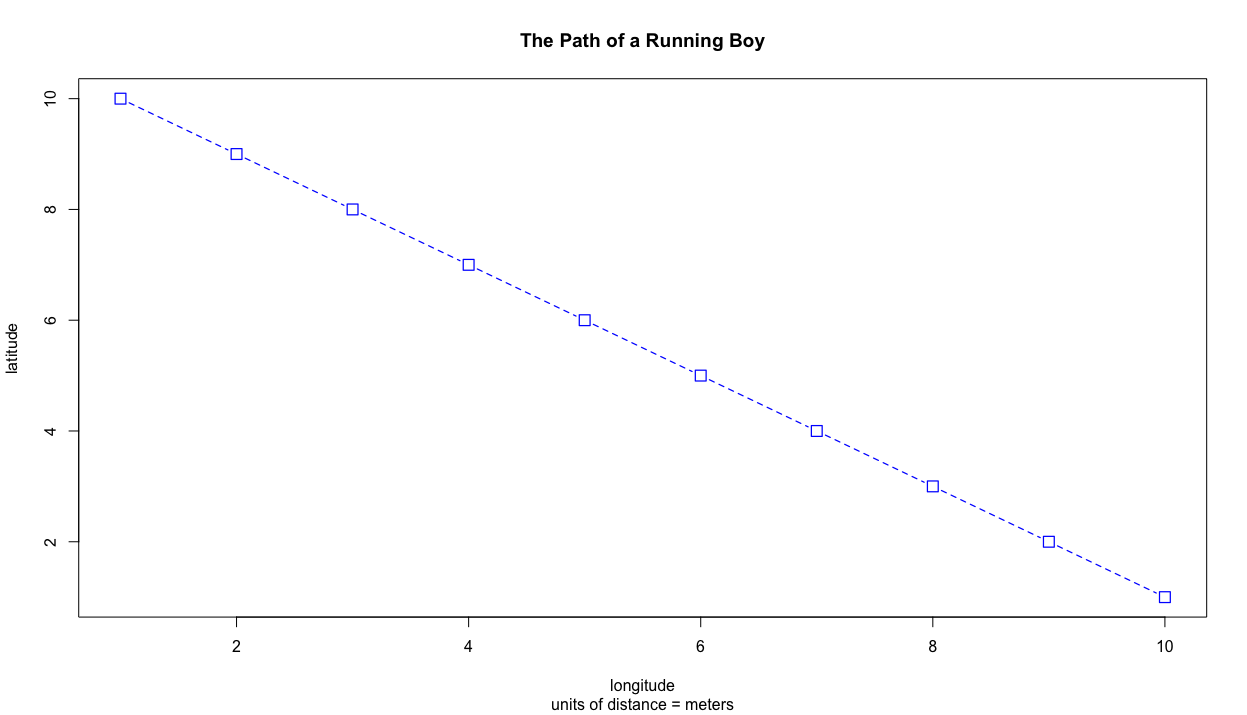

We can also change the linetype by specifying the lty = argument or set the lineweight by using the lwd = argument. The color of our line can be changed using the col = "some_color" argument, while the point symbol itself can be modified by using the pch = argument. Scale of the symbol is increased or descreased using cex =. Have a look at the Quick-R website for a comprehensive list of some available graphical parameters.

plot(x, y, type = "b", main = "The Path of a Running Boy",

sub = "units of distance = meters",

xlab = "longitude",

ylab = "latitude",

lty = 2,

lwd = .75,

col = "blue",

pch = 0,

cex = 1.5)

Creating a More Complicated Plot while also creating and then using a Data Frame

Now lets make your plot a bit more complicated than simply a line with points. First increase the scale of our plot area by increasing the range of values for both the x & y axes.

x <- 1:100

y <- 1:100

Now instead of using those values, let's randomly select from both x & y in order to produce a random series of x & y coordinates.

east <- sample(x, size = 10, replace = TRUE)

north <- sample(y, size = 10, replace = TRUE)

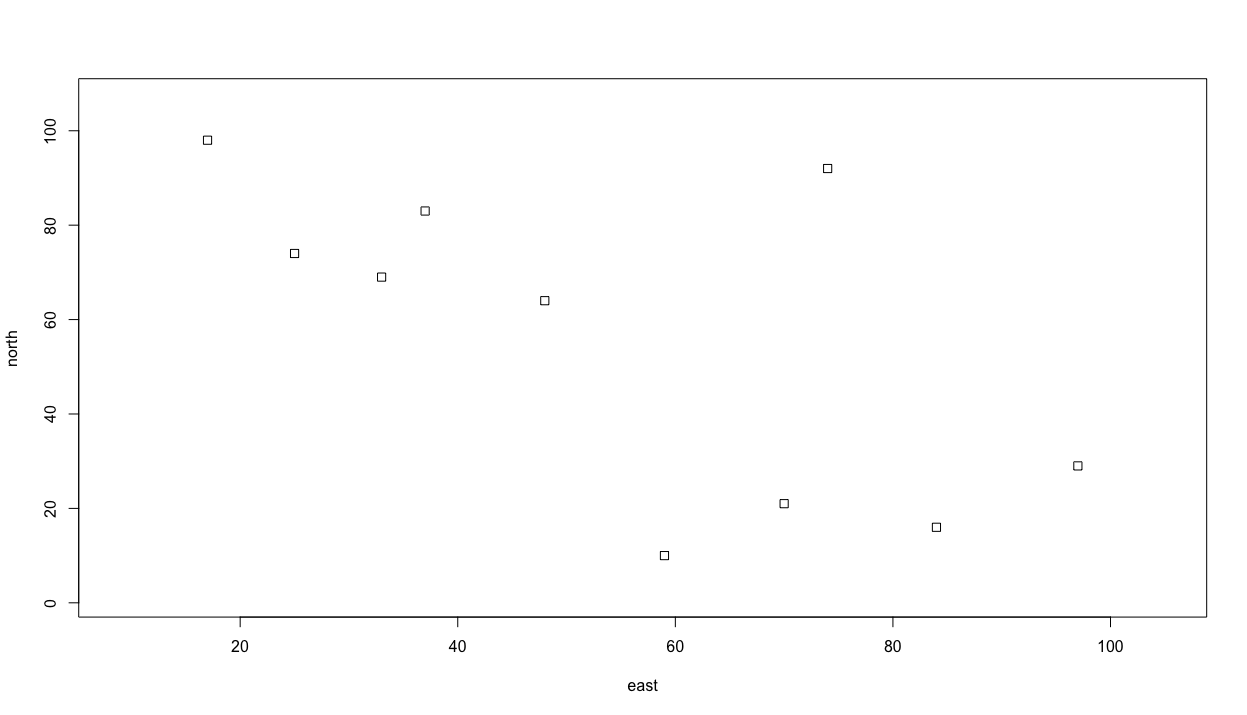

The above command sample() will randomly select in a uniform manner, one number from x and then also y, 10 times, creating the vector objects east & north. I have also included the replace = TRUE argument, such that each time a number is selected, it is returned and potentially can be selected again in the next draw. Now, lets take each value and use it as the coordinates for the center point of a number of squares. We will use the symbols() command in order to add additional specifications to our command.

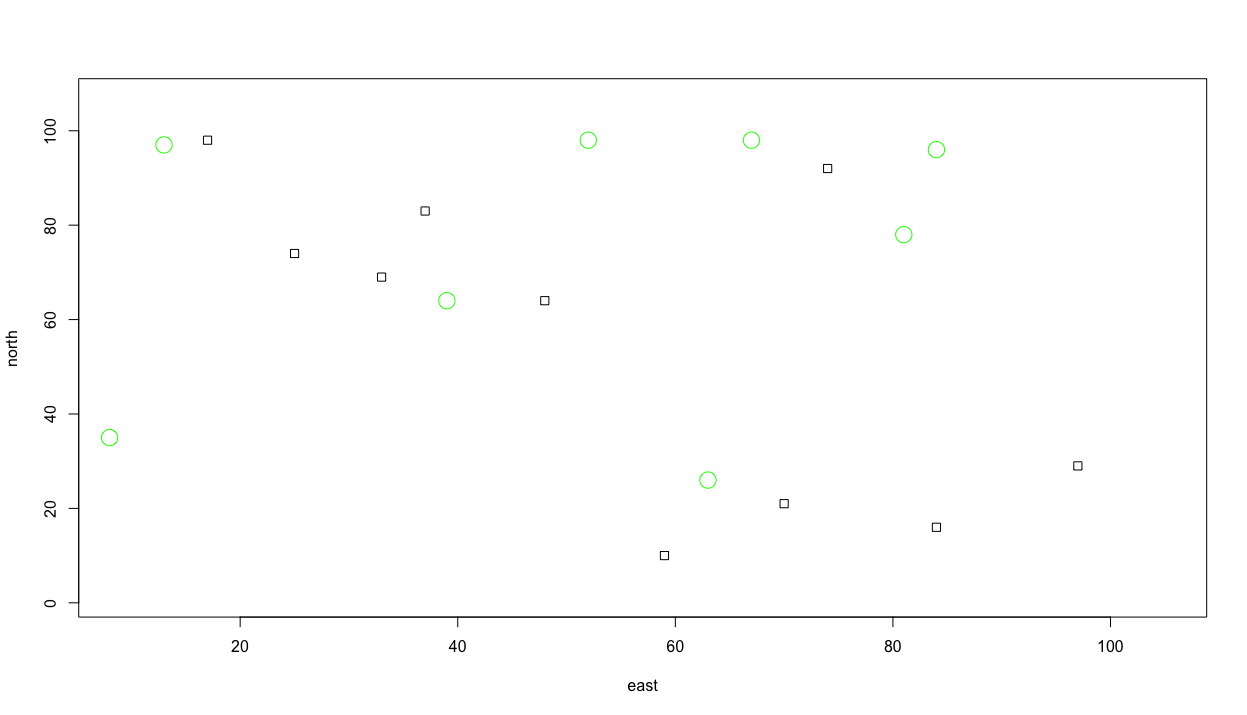

symbols(east, north, squares = rep(.75,10), inches = FALSE)

Following is one possible outcome produced by the randomly produced coordinates. While the squares produced in your plot will be in different locations, the number of squares as well as the size of each, should be very similar. Lets also consider the additional arguments in the symbols() command. In the squares = argument within the command, I have also used the rep() function, which will repeat the length of each square, .75 in this case, 10 times, or 1 time for each square. I have also added the inches = FALSE argument so the units are considered to be similar to the axes.

Now lets add some circles to our plot. This time, instead of assigning an object a permanent value by randomly selecting from a series of numbers, lets randomly select values as part of creating the plot with the symbol() function.

symbols(sample(x, 10, replace = TRUE),

sample(y, 10, replace = TRUE),

circles = rep(.75,10),

inches = FALSE,

fg = "green",

add = TRUE)

Where as before I created two objects and plotted their values as x & y coordinates, this time I have nested the sample() command within the symbols() function, in the place where R is looking for the x & y value coordinates. In this manner, each time I execute the command, 10 circles will be randomly placed throughout the defined area, each with a radius of .75. I have also included the add = TRUE argument within the command, in order to add the circles to our previous plot of square. The fg = argument permits us to select a color for each circle.

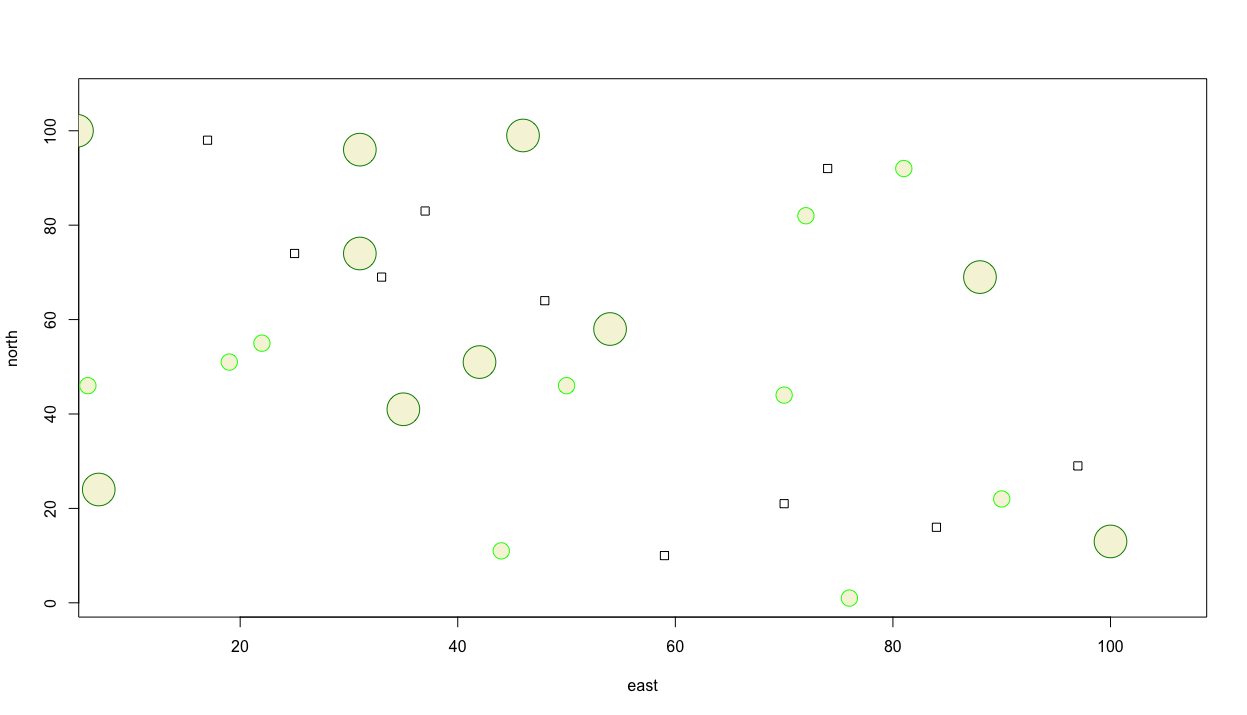

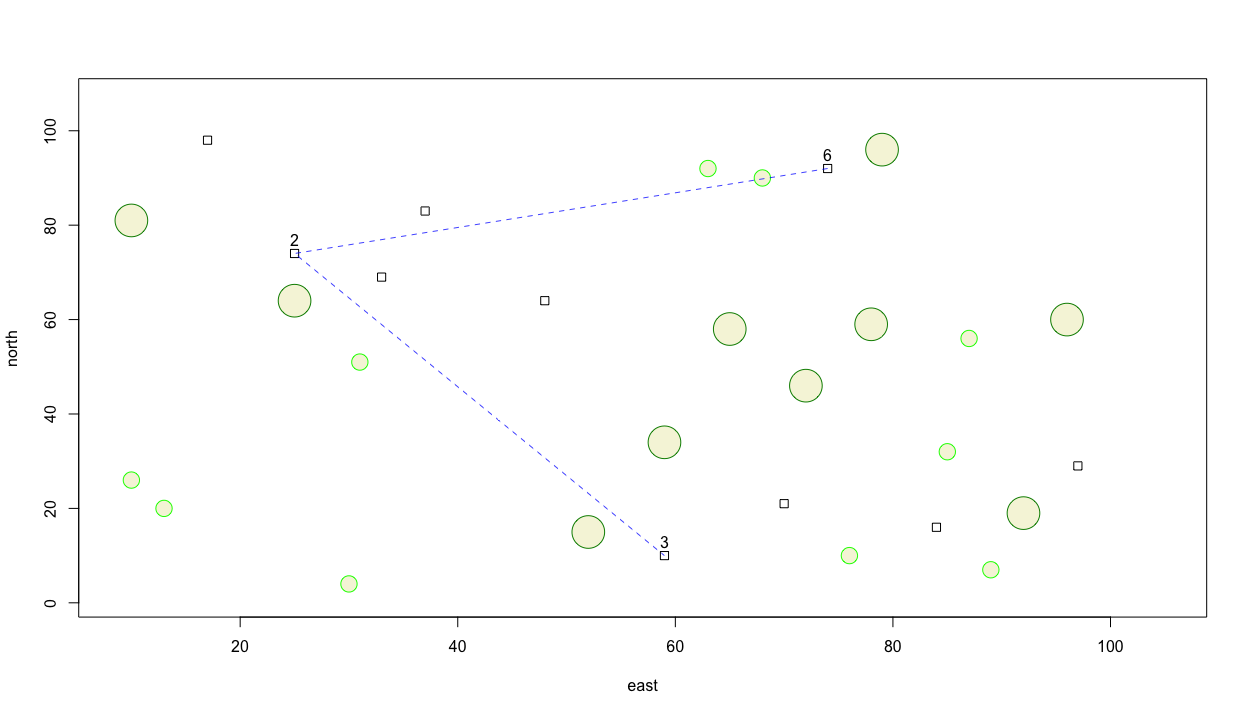

Let's also add some larger trees and specify their color as well. Again we will randomly place them while using the add = TRUE argument so they are added to our previous plot. Also, consider a wider range of colors to use as the outline for each circle, while also filling each circle with a color. In order to determine how to fill the circle with a color, use the ? followed by the command you are interested in learning more about in order to view all of the available options. In this case you can type ?symbols directly in the console in order to see all of the arguments possible. If you scroll down in the help window, you will see that fg = is used to specify the color or your symbol border, while bg = is used to indicate the color for your symbol's fill. You may also be interested to know which colors are available to select. In order to review a list of all available colors, simply type colors() directly into your console. Running the following chunk of commands will then produce a plot similar to the following image.

symbols(east, north, squares = rep(.75,10), inches = FALSE)

symbols(sample(x, 10, replace = TRUE),

sample(y, 10, replace = TRUE),

circles = rep(.75,10),

inches = FALSE,

fg = "green1",

bg = "beige",

add = TRUE)

symbols(sample(x, 10, replace = TRUE),

sample(y, 10, replace = TRUE),

circles = rep(1.5,10),

inches = FALSE,

fg = "green4",

bg = "beige",

add = TRUE)

Thus far we have only created R objects that are of the vector class. We can review the class of one of the objects we have created by typing class(east) directly into the console and observe that R informs us that the object is a vector of integers. Now let's create a new class of an object called a data frame that contains a series of rows and columns where each row represents an observation while each column represents a different variable. We can start with the coordinates that represent the center point of each square.

dwellings <- cbind.data.frame(id = 1:10, east, north)

In this case, we are using the cbind.data.frame() command to column bind together the newly created variable named id with our two integer vectors east & north into a newly formed data frame named dwellings. After executing the above command, you can type the name of your data frame directly into the console to review its content. Within the environment pane in the top right hand window, under the data tab, you can also use your mouse to click on the data frame symbol that is off to the right of the dwellings data object.

| id | east | north | |

|---|---|---|---|

| 1 | 1 | 48 | 64 |

| 2 | 2 | 25 | 74 |

| 3 | 3 | 59 | 10 |

| 4 | 4 | 37 | 83 |

| 5 | 5 | 97 | 29 |

| 6 | 6 | 74 | 92 |

| 7 | 7 | 84 | 16 |

| 8 | 8 | 17 | 98 |

| 9 | 9 | 70 | 21 |

| 10 | 10 | 33 | 69 |

You'll notice that R also provides row numbers that in this case are identical to the identification number we have assigned to each square. Instead of assigning our id variable manually, we could have just as easily used id = row.numbers(dwellings) in order to achieve the same result, if the object dwellings already exists.

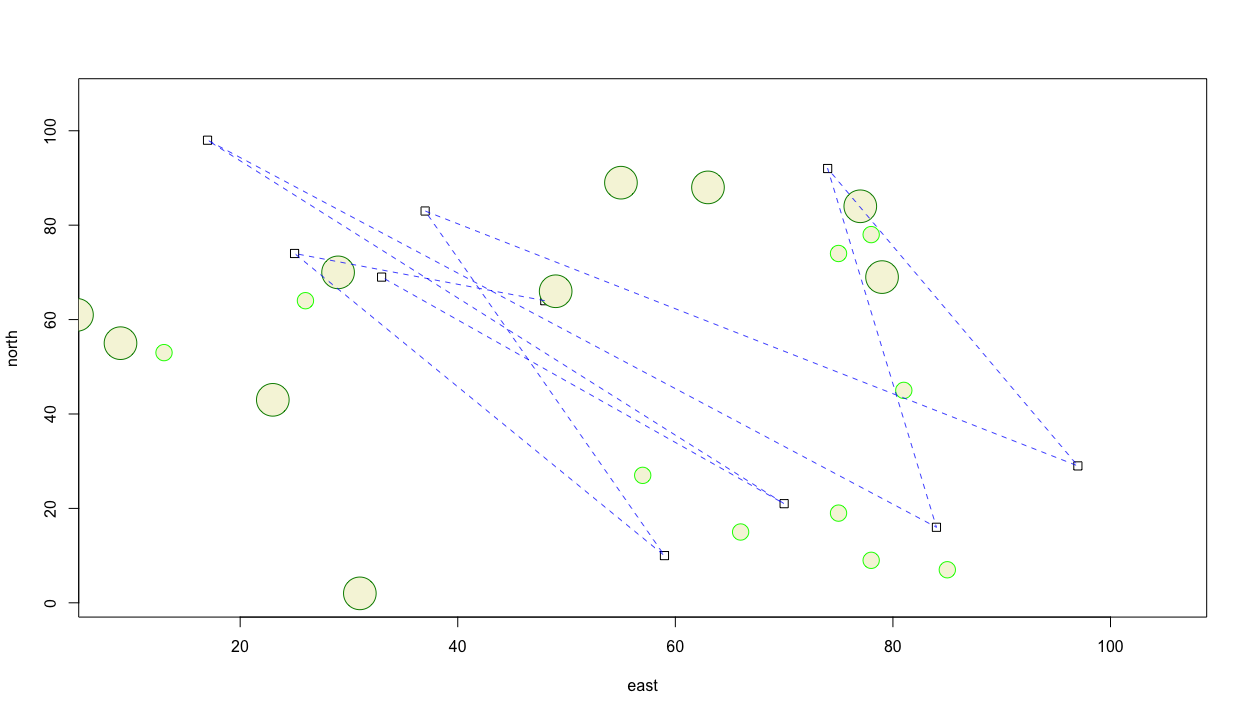

Now let's add a line that represents some type of transportation activity between each of the different dwelling units we have represented within our plot as squares. We can add lines to the plot with the lines() command. In order to identify the beginning and ending point of each line we set the x = argument to the east variable within the dwellings data frame. Likewise we set the the y = argument to the north variable also witin the dwellings data frame. One manner of informing R which variable is needed within a data frame is to use the $ operator. In general terms, the form to call a variable is my_data_frame$my_variable. Following is an example, which should produce something similar to the subsequent plot.

lines(x = dwellings$east,

y = dwellings$north,

lty = 2,

lwd = .75,

col = "blue")

You'll notice that unlike the previous commands, it wasn't necessary to include add = TRUE in order to add the lines to the plot. Perhaps it would also be helpful to add some text to annotate each household. We can accomplish this using the text() command. As with lines(), we also do not need to use the add = TRUE argument. The text() function also requires identifying the location of the text you will use to annotate each dwelling unit. That is accomplished through using the labels = argument. Again, identify the variable where the id is located within the data frame using the my_data_frame$my_variable format.

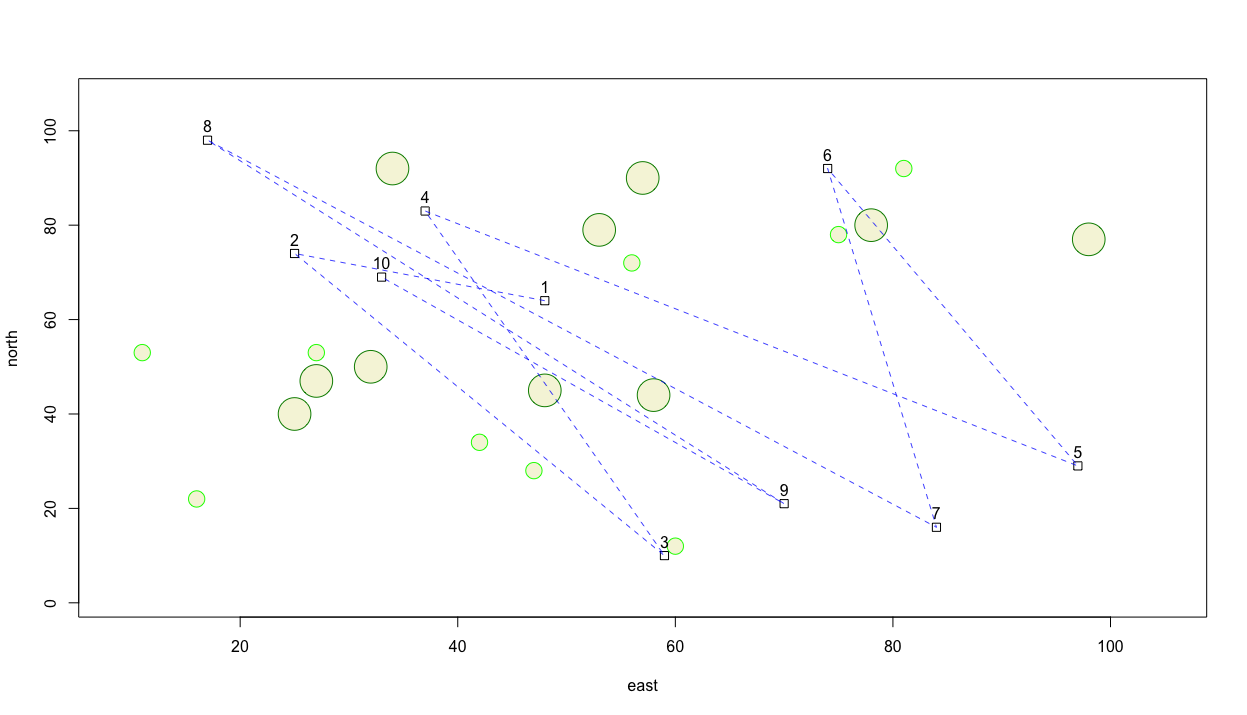

text(x = dwellings$east,

y = dwellings$north,

labels = dwellings$id)

Since label coordinates are the same as the center point for each square, reading the labels is confounded. Instead of placing the label directly on top of the dwelling unit, add a few units north to the y = argument in order to displace each label a bit in the northerly direction.

Now perhaps instead of traversing a path between each house sequentially, our traveling person selected on 3 of the dwellings and moved between each of those buildings. First we will randomly select 3 numbers that will be used to identify the chosen homes. This time, set the replace = argument to FALSE since our traveling person will only visit each dwelling unit one time.

locs <- sample(1:10, 3, replace = FALSE)

Now instead of using the lines() command to identify the x = and y = coordinates of each dwelling unit's center point, we will select only 3 building locations. To do this, we wil lintroduce another method of traversing and selecting rows and/or columns from a data frame for use in an command and its arguments. The [ and ] symbols are extremely powerful operators and can be used to subscript from within a function or argument. Subscripting operators follow the format of first selecting the rows followed by a comma and then columns in this [row_numbers, column_numbers] format. If either the rows space or columns space is left blank, then R assumes ALL rows and/or columns should be selected. In the following command, I am using these subscripting operators to first select the 3 rows from the data frame that were randomly identified and then also include either column 2 for the easterly coordinate, or column 3 for the northerly coordinate.

lines(x = dwellings[locs, 2],

y = dwellings[locs, 3],

lty = 2,

lwd = .75,

col = "blue")

Alternatively I could have also specified x = dwellings[locs, ]$east and y = dwellings[locs, ]$north in order to achieve the same result. The following snippet demonstrates how that is accomplished while adding text in order to annotate each house with its id.

# text(x = dwellings$east,

# y = dwellings$north + 3,

# labels = dwellings$id)

text(x = dwellings[locs, ]$east,

y = dwellings[locs, ]$north + 3,

labels = dwellings[locs, ]$id)

You'll notice in the previous snippet of code that the # sign has been added to the first character space on lines 1, 2, & 3. Adding the # sign enables you to comment out that line of code so R will ignore it. In the above example, I have commented out the lines of code we produced earlier where we labeled all 10 houses, and followed it with out code that serves to label only the 3 units that were randomly selected. At this point our plot should appear similar to the following image.

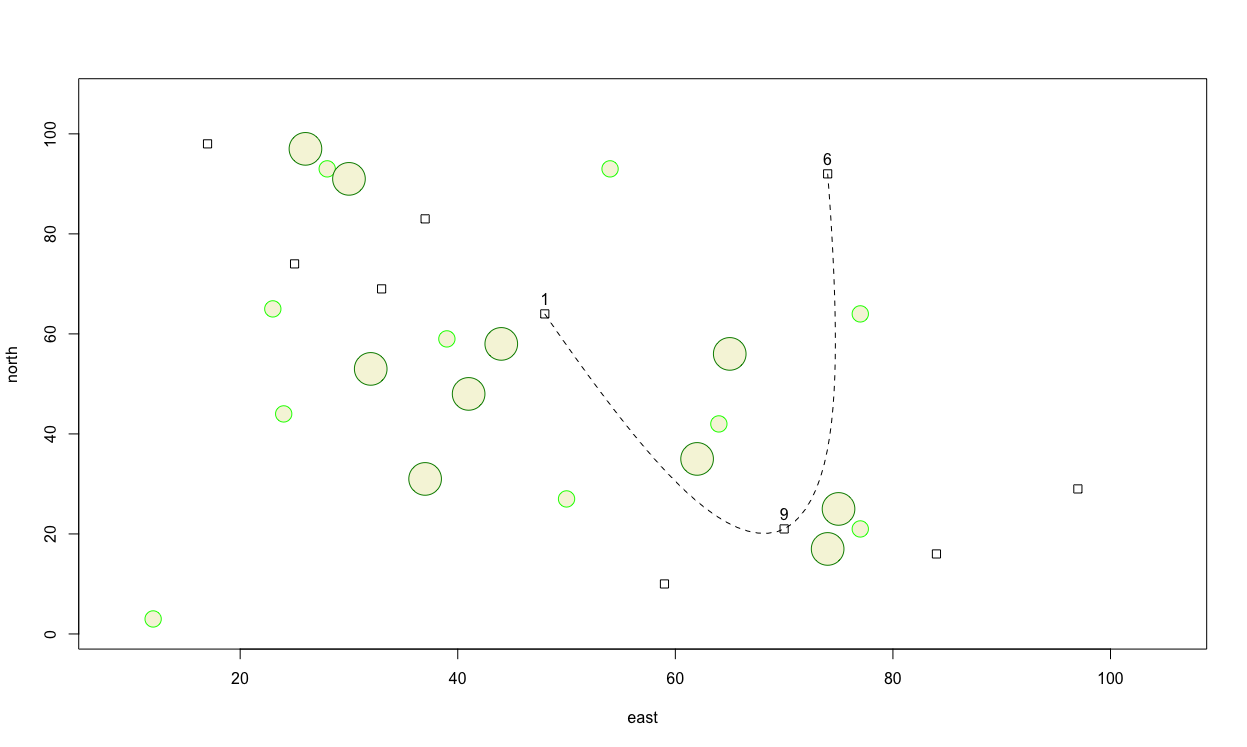

Now instead of using a straight line, let's use a spline to represent a more continuous path betweem each of the selected locations along the persons travel path. Comment out the previous lines() command and instead use the xspline() command to identify the path. I will set the shape = -1 in order to interpolate all points while crossing each dwelling unit.

xspline(x = dwellings[locs, 2],

y = dwellings[locs, 3],

shape = -1,

lty = 2)

Finally, add a title to your plot using title(main="A Person's path between Homes").

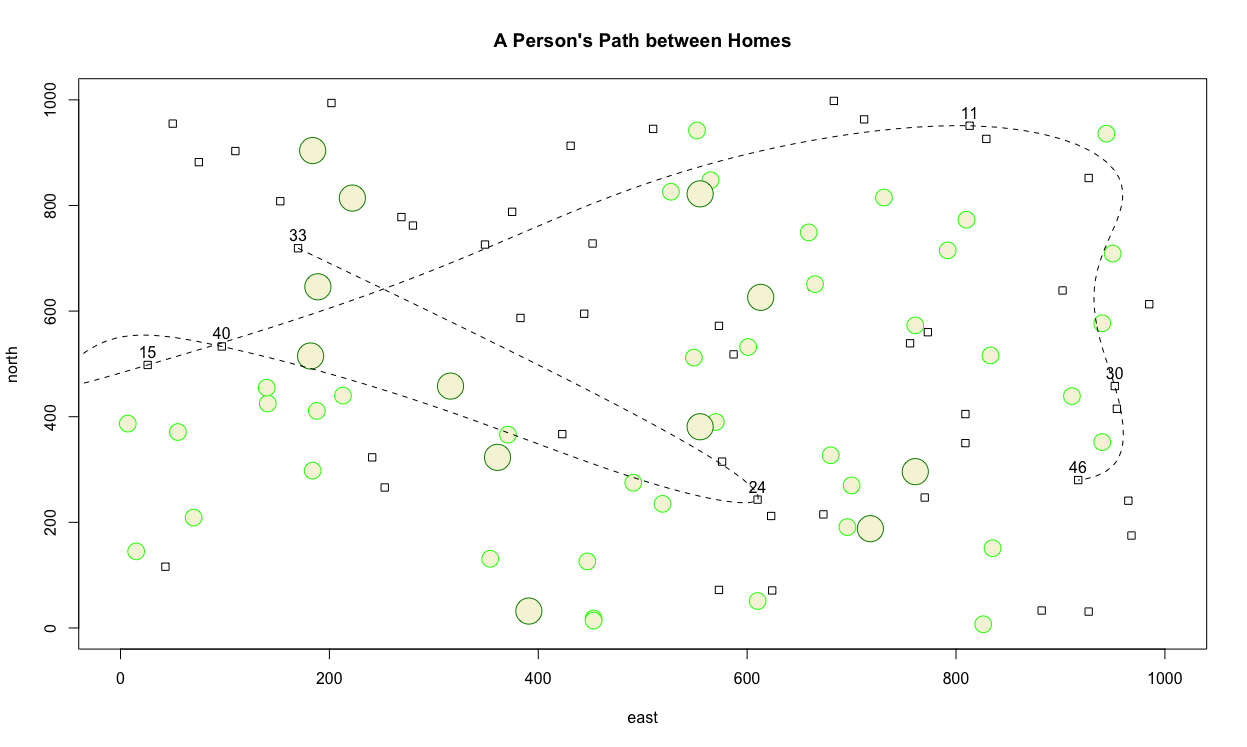

Challenge Question

Create a similar plot as the one produced above, but instead meet the following specifications.

- Increase the minimum and maximum limits of your area from 1 to 1000 in both the

x&ydimension. - Randomly place 50 dwelling units throughout the 1000 x 1000 dimensioned area. Size each square appropriately.

- Randomly place 40 small circles (trees) throughout the 1000 x 1000 dimensioned area. Set the radius of each circle to the same or approximately the same as the width of each home.

- Randomly place 12 large trees throughout the defined area, such that each tree has almost twice the radius as each home's width.

- Randomly select 7 homes from the 50 total, and use a dashed spline to describe the path between each labeled dwelling unit.

- Title your plot.

Python Review

Some basic operations

First lets do some basic operations in Python. See if you can execute the following tasks.

- add 1 + 1

- print 'Hello World!'

- create a variable x that is equal to 1

- create a variable x that is equal to x + 1

Logical operators can be important when working with data that requires us to assess logical comparisons, such as equality, greater than or less than. Logical operators can be used to find specific data we are looking for, or perhaps to filter out rows of data that we may need.

Practicing with logical operators

Now let's practice comparing values using some of the logical operators available in Python.

- write a logical operation that compares the sum of 1 + 1 with the numerical value 2

- compare the character values of the words 'cat' and 'dog'

- write a logical expression that determines whether 4^6 is greater than 6^4

- write an inclusive "or" statement (use the pipe which is the | character as the "or" operator) evaluating both the character values of the words 'cat' and 'dog' AND whether 2 is greater than or less than 1 -- in this case either A or B, or both is true

- write an exclusive "or" statement evaluating both the character values of the words 'cat' and 'dog' AND whether 2 is greater than or less than 1 -- in this case either A or B, but not both is true

Next up is something you should definitely be aware of! NaN ("not a number") is not equal to itself! It is also the ONLY value for which this is the case! This is important, because missing records in a data set are often treated as NaN, and you will sometimes need to be careful about how you handle them. It may not happen very often, but it will happen eventually and can cause confusion if you're not aware of it.

Try assigning float('nan') to the variable x and then evaluating that same variable with itself in an equality statement (using the == logical operator). Also try using the not equal logical operator (in this case !=) to evaluate x with itself.

From the results of the logical comparison, you could conclude that x must be NaN. This may show up at some time in the future, don't forget it! There's also usually special operations for checking if something is NaN. Use the import() to load the math library of functions into your PyCharm work session. Now evaluate x with the command math.isnan(). Also try running the same command from the numpy library by again first using the import() library command and then using the isnan() command. To distinguish the two similarly named functions from the two different libraries, specify the library in the command itself numpy.isnan().

Evaluating Lists and Subscripting with an Index

If we attempt a similar approach where we evaluate each term in a list, we might not obtain the expected results. For example try the following.

y = [1,2,float('nan'),4,5,float('nan')]

y

First we could extract one of the values in the list by using a subscripting operator such as y[2]. Notice that Python indexes values in a list starting with 0, thus the 2 will in essence retrieve the third value in the list. Also, consider the evaluation of y == y, which is evaluating the equality of two lists rather than two float objects as in our previous example. To isolate and evaluate individual values within the list we could evaluate each term as part of a loop, one by one.

for yi in y:

print(yi==yi)

To simplify the command in a single line of code, use "list comprehension" syntax.

[yi==yi for yi in y]

An even simpler approach is to evaluate the list y using the np.isnan() command.

Last but not least, you might want to identify which values in a list match some criteria, and then perhaps remove or keep those elements from the list. Let's suppose that we wanted to remove the NaN values from y. To do this, first, identify the elements in the list that match your criteria by creating an object idx and then assigning the output from y using the np.isnan() command. You can negate boolean values using the ~ preceding the object (in this case idx).

To isolate and drop our Nan values we start by assigning the output from np.array(y) command back to the y object and essentially writing over and replacing that object. Now use the inverted values to retain those values that have true records in the list by subscripting the index like this y[~idx]. Go ahead and assign those values to a new object named y_filtered.

Using the pandas library and DataFrames

The first thing you'll usually do when writing Python code is to import any libraries that you'll need. When working with data files, the pandas library offers all sorts of convenient functionality. You can also give the library a shorter name (alias) in the process using "as" like this import pandas as pd.

Next, let's get our file imported. First, open up the file in the text editor and take a look at it. You'll notice that there are tabs between each value in the file. In other words, it's a tab-separated file. We'll need to let pandas know this when we import it because, by default, it's going to assume a comma-separated file.

First, specify where the file is located by creating an object named path_to_data and then assign the path of your tab separated values file to that object. If your gapminder.tsv file is in your project folder, then you only need to specify the name of the file itself. Don't forget to nest the file name in '' quotes.

To import the data use the read_csv() command from the pandas library of functions with the path_to_data object you already created. In order to specify that command first use the library alias followed by the command itself, pd.read_csv(). Assign the output from reading your gapminder.tsv file to a new object that is simply named data. Finally, in order to inform Python that our data is in a tab separated format, we need to add an additional argument following the path_to_data object. Use the \t specification in your argument to execute the command as follows.

new_object = library_name.function_name(path_to_file_object, sep = '\t')

To interrogate the new data frame object we just created, you can start by simply typing data in the console. You can also look at just the top, or just the bottom, using data.head() and data.tail(). To further specify the number of lines from the data object you would like printed in the console add that number as a value within the .head() or .tail() command.

You can check the number of rows and columns in your data frame with the .shape command or if you would like to have the number of total cells returned you can use .size. If you would like to manually confirm the number of cells, you can subscript the first and second values from the shape of the data. Keep in mind that the first value is in the 0 place while the second value is in the 1 place.

data.shape[0]*data.shape[1]

To get a quick summary of the names of the columns, as well as what type of data use data.info() or if you just want a quick list of the column names use data.columns. If you check the your data.columns output with the type() command, you will find that the columns themselves within your pandas data frame are an Index. To return a list of just the column names, instead use list(data.columns). Using the .describe() command is a good way for Python to return descriptive statistics about your data frame.

Subsetting our data frame

Now let's extract all the data for Asia and store it in a new DataFrame. To do this create an index of all the rows where data['continent']=='Asia' is true. Then once you have your index in place, use it to subset from your data object with all of the records where data['continent'] == 'Asia'. You could use the subscripting operator to subset on the fly like this.

data_asia = data[idx_asia]

Now consider if we only wanted the data for the most recent year, from Asia? We could again select by using our data frame data_asia with the column (or variable) identified using the ['year'] syntax and then append a .max() command to the end. This value for the maximum year from the data frame can be assigned to a new object, and used to create an index for subsetting all rows (or observations) where the year variable is equal to the maximum year

new_obj_w_max_yr = all_countries_in_asia_df['year_variable'].max()

idx_year = (countries_in_asia_df['yr_var'] == max_year)

data_asia = data_asia[idx_year]

data_asia

Alternatively, you could have setup the subscript from your data frame as one command.

data_asia = data[(data['variable']=='select_continent') & (data['yr_var'] == data['yr_var'].max())]

Even though the results above are what we wanted, the logic we used is not equivalent to what we were originally trying to do. For example, if there were no records for Asia for 2007, then we would have gotten back an empty DataFrame. So, here's a better way. First, I would write down what I want to do:

Goal: Specify a continent, and extract the most recent data from that continent

Next, do one operation at a time to get to the desired result:

cont = 'select_continent'

idxCont = data['variable']==cont

temp = data[idxCont]

year = temp['variable'].max()

idxYear = temp['variable']==year

data_final = temp[idxYear]

data_final

And if this was something I thought I would be using a lot, I might even write a function. Start out with the def command and follow it by the name of the function you wish to define and specifications within a parenthesis.

def GetRecentDataByContinent(data,continent):

idxCont = data['continent']==continent

temp = data[idxCont]

year = temp['year'].max()

idxYear = temp['year']==year

return temp[idxYear]

Now execute the function you just created by running GetRecentDataByContinent() with your data object and the 'Asia' outcome for the continent variable. Assign it to a new object and review the output.

Back to the original dataset - can we get a list of all the countries that exist in the data? As you did before, append a function to the end of your data frame object where your variable specified. In this case the unique() function will help us to isolate all of the individual country names.

data['variable'].function()

How many countries are in that list? It's a little long to count manually. Nest the entire previous command in a the len() function in order to return the number of unique observations in the list.

function2(data['variable'].function1())

What if we wanted to know how many records there are for each individual country? Append the value_counts() command to your data frame and variable specification in order to return these results. If you would like to return the entire list, assign the results to a new object and print() this data frame with the .to_string() command appended.

new_data = data['variable'].value_counts()

print(your_new_data.to_string())

Can we sort the list by the country name? Yes - but we need to understand what type of object we are working with first. Use the type() command to return the class of our object. It appears this is a series object. A Series is basically a column of a DataFrame, and just like a DataFrame, it is a special type of object that has all sorts of built-in functionality. The column with the country names is the index of the Series. Just like with DataFrames, the index is not an actual column. If you want to sort by it, then you would need to use the sort_index() function.

print(your_df.sort_index().to_string())

Indexing DataFrames

There are several ways to extract individual rows from a DataFrame, usually you will be using .loc or .iloc. Let's identify the record with the largest GDP per capita:

max_gdp = data['variable'].max()

max_gdp

max_gdp_idx = data['variable'].idxmax()

max_gdp_idx

If this were an array, you might try data[max_gdp_idx] but instead use the location command .loc to extract the row with the label (index) 853 data.loc[max_gdp_idx].

Using .iloc is a little different. To see why, compare the output from data_asia.loc[11] and data_asia.iloc[0], and notice how .iloc is fetching the row by it's integer position, whereas .loc is fetching the row by its label. In this case, the first row of the data (starting counting at 0) has the label 11.

Sometimes, you might want to reset the index so that the rows are labeled sequentially again. Append the reset_index() command to your data frame to do this. Adding the drop=True argument drops the previous used index as it is no longer needed.

data_frame = data_frame.reset_index(drop=True)

data_asia

Exercises

-

Get a list of all the years in this data, without any duplicates. How many unique values are there, and what are they?

-

What is the largest value for population (pop) in this data? When and where did this occur?

-

Extract all the records for Europe. In 1952, which country had the smallest population, and what was the population in 2007?

References

Python Review by Ron Smith, Data Science Program, William & Mary. Converted from an .ipynb. 2021.

Chapter 2

Describe

Locating and retrieving administrative subdivisions for your selected LMIC, as well as plotting boundaries and labelling each local government unit.

Projecting, Plotting and Labelling Administrative Subdivisions

We have had a bit of practice creating a theoretical environment, but now we will move to a more practical application. In this exercise you will learn how to install a package and load a library of functions into R, install spatial data as a simple feature and then use the grammar of graphics (aka ggplot::) to plot your geospatial data. To begin, install a package that will be used in order to describe and analyze our simple features.

install.packages("tidyverse", dependencies = TRUE)

In the above command we are installing a collection of packages designed for data science, where all packages share a common design. Once RStudio has informed you that the package has been installed, you may then execute the command that makes the library function available for use during your current work session.

library(tidyverse)

After executing the library command, R may inform you about the current version of attached packages while also identifying any conflicts that may exist. Conflicts between functions often exist when one package installs a function that has the same name as another function in another package. Generally, what happens, is the latest package to be installed will mask a same named function from a previously loaded library.

The tidyverse is not one library of functions, but is in fact a suite of packages where each one conforms to an underlying design philosphy, grammar and data structure. In the previous exercises we used commands from the base R package, but in this exercise we will begin to consider the more recent development of the tidyverse syntax nomenclature that emerged from the gramar of graphics (ggplot2). The tidyverse is arguably a more coherent, effective and powerful approach to data science programming in R.

After installing and loading the tidyverse suite of packages, let's install yet another important package that is used when working with spatial data.

install.packages("sf", dependencies = TRUE)

This will install the sf package, or simple features, which like the tidyverse is a recent, arguably more effective implementation of a design philosphopy for using spatial data in R. The sf:: package also has been designed to integrate with the tidyverse syntax. After installing sf::, then as before run the library() function to load the library of functions contained within the package for use in your current R worksession.

After running the install.packages() command successfully, you should add a # at the beginning of that line in order to comment it out. Running the install.package() command is generally necessary only once, in order to retrieve the package from a remote location and install it on your local machine, but it is necessary to run the library() command each time you open R and wish to access a function from within that library.

Another helpful command to add at the beginning of your script is rm(list=ls(all=TRUE)) , which will delete everything from your R workspace from the outset. By running this line of code first in your script, you will be working with what John Locke called a tabula rasa or a clean slate from the outset. After adding the remove all function as your first line of code but after installing your packages and loading those libraries, be sure to set your working directory. While it's fine to use the drop down menu to find the location of your working directory, the first time, it is a good idea to copy that line of code into your script, so the setwd() command can be executed programmatically instead of through the GUI (which will save you time). At this point your script should look like the following snippet of code.

rm(list=ls(all=TRUE))

# install.packages("tidyverse", dependencies = TRUE)

# install.packages("sf", dependencies = TRUE)

library(tidyverse)

library(sf)

setwd("the/path/to_my/working/directory")

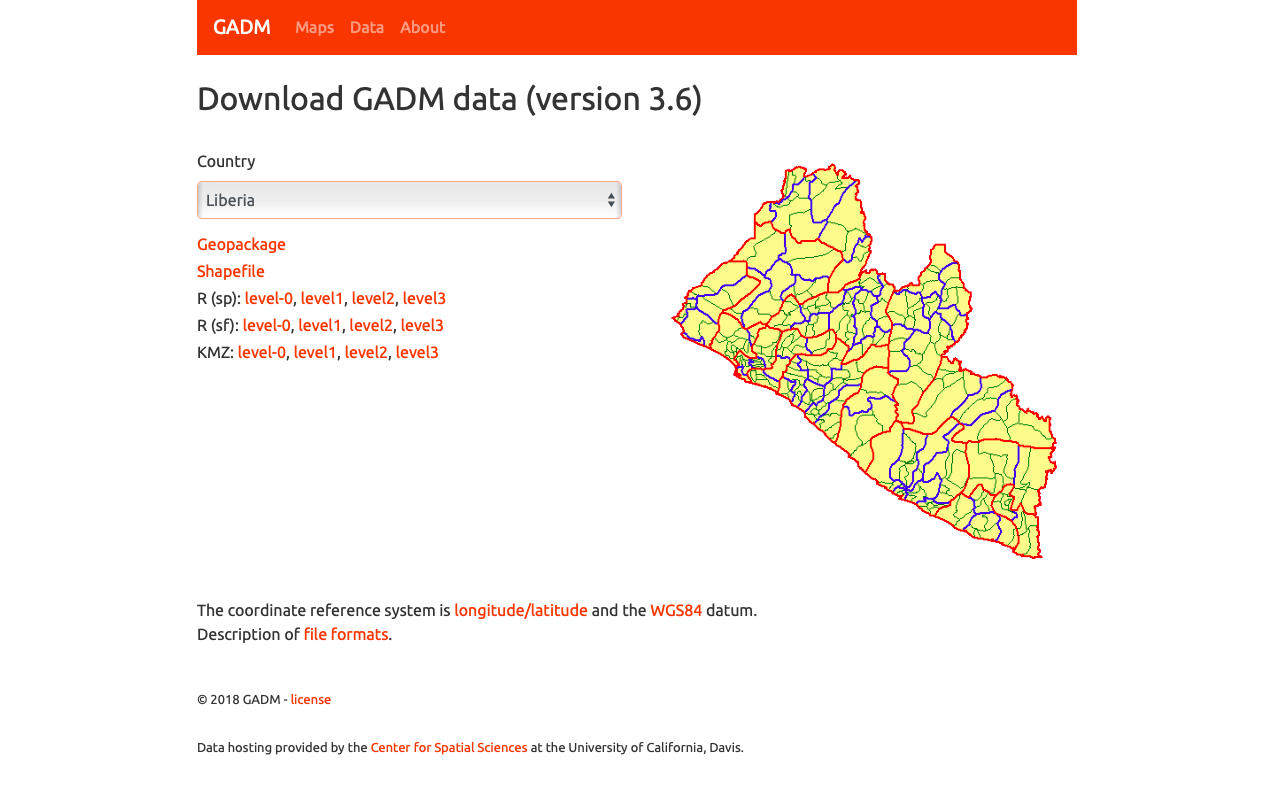

The next step is to visit the GADM website, which is a repository of spatial data that is describing global administrative subdivisions or every country on earth. Select the data tab and become familiar with how the portal presents the administrative subdivision of each country. Find the country link towards the top of the page that forwards you to another page with a drop down tab for downloading GADM data.

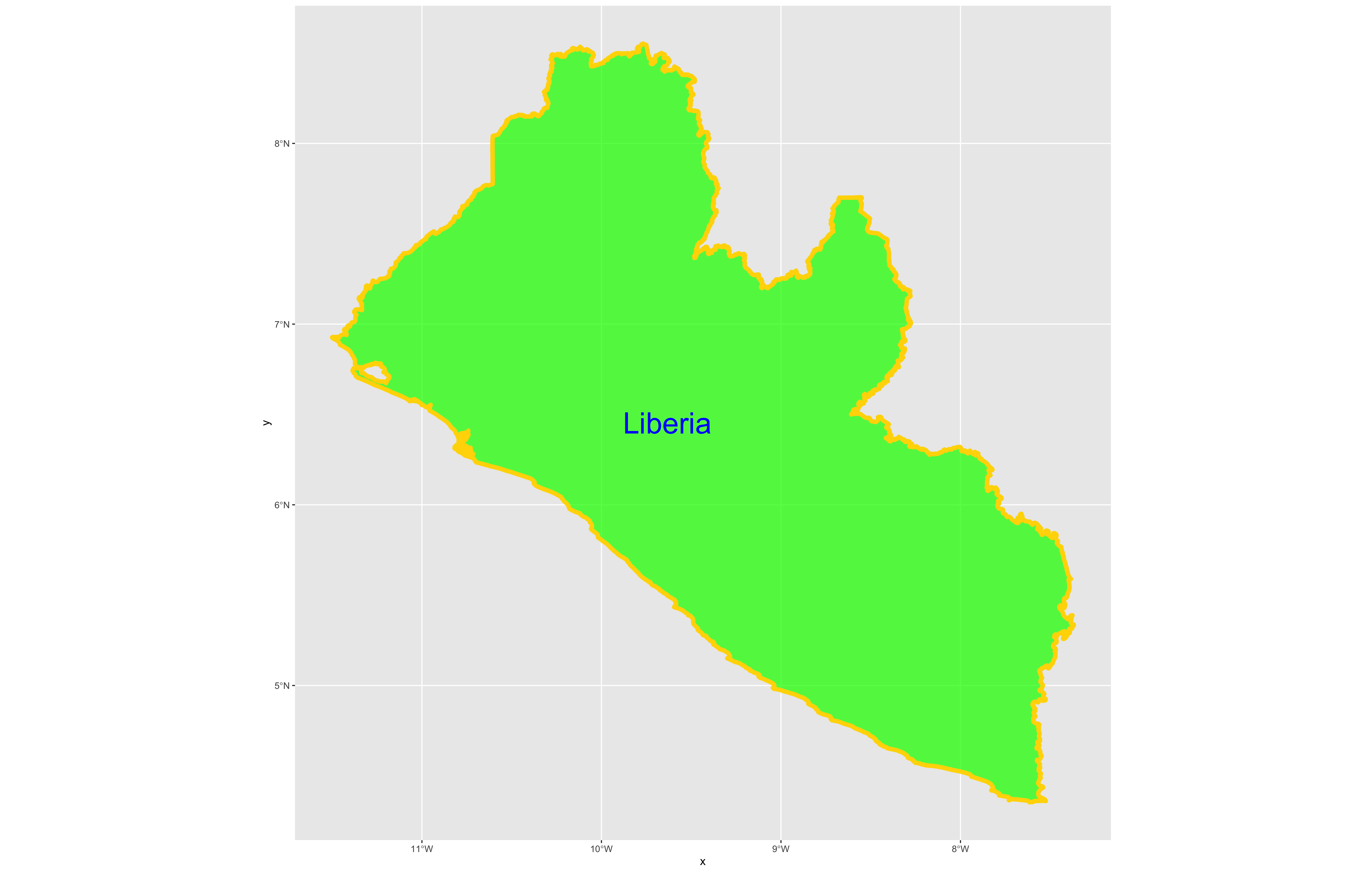

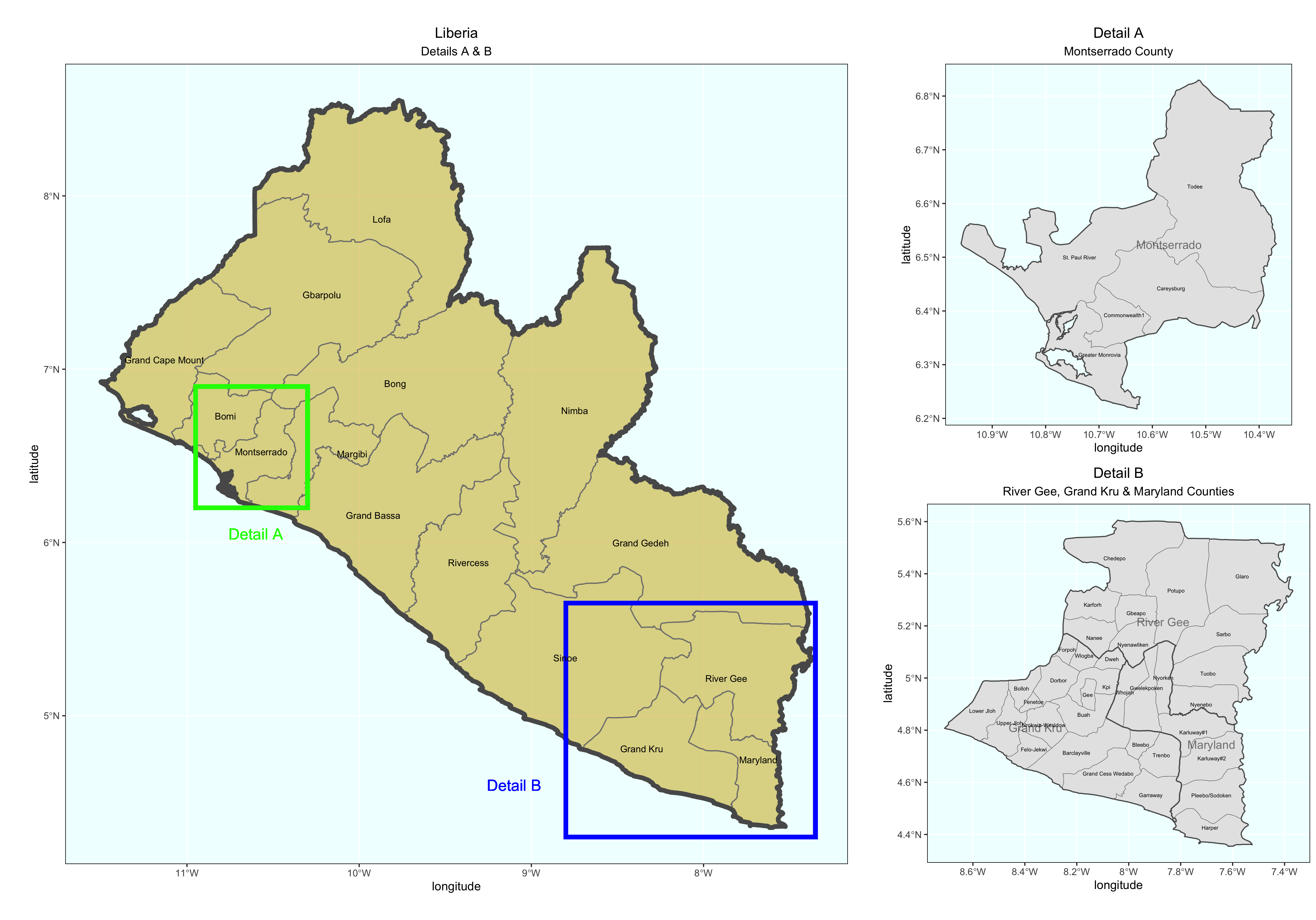

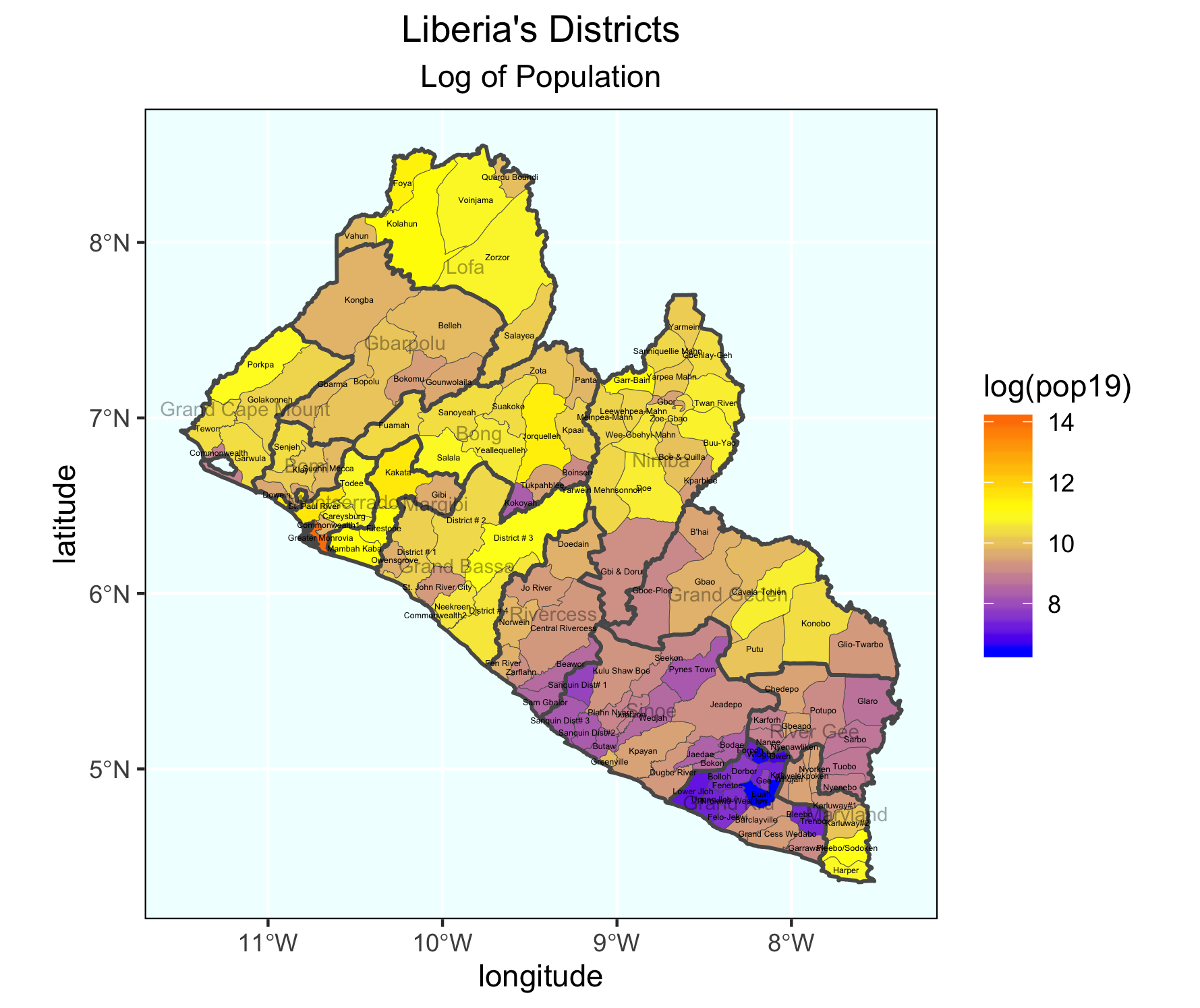

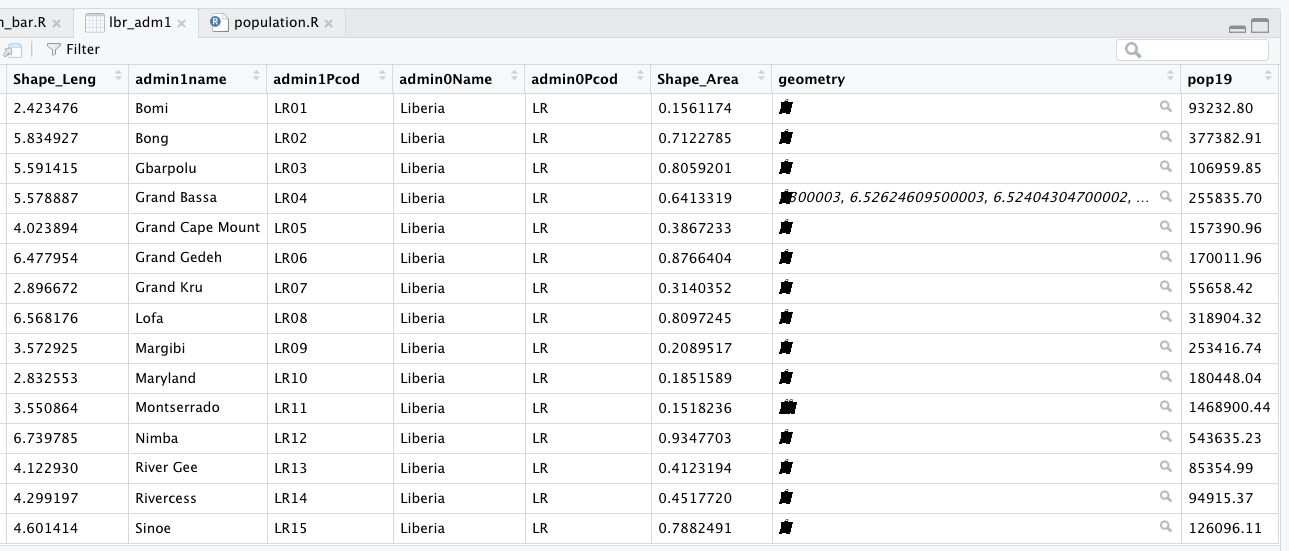

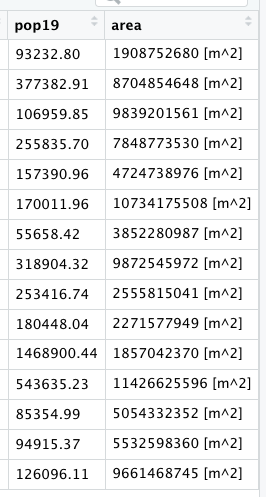

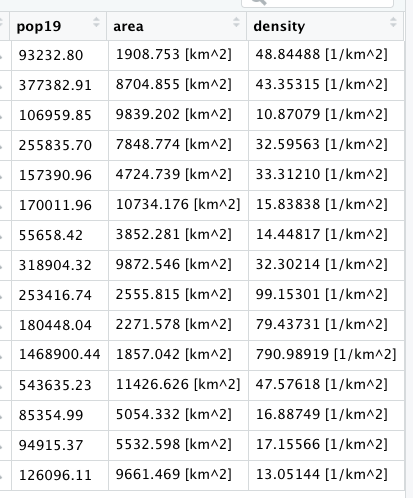

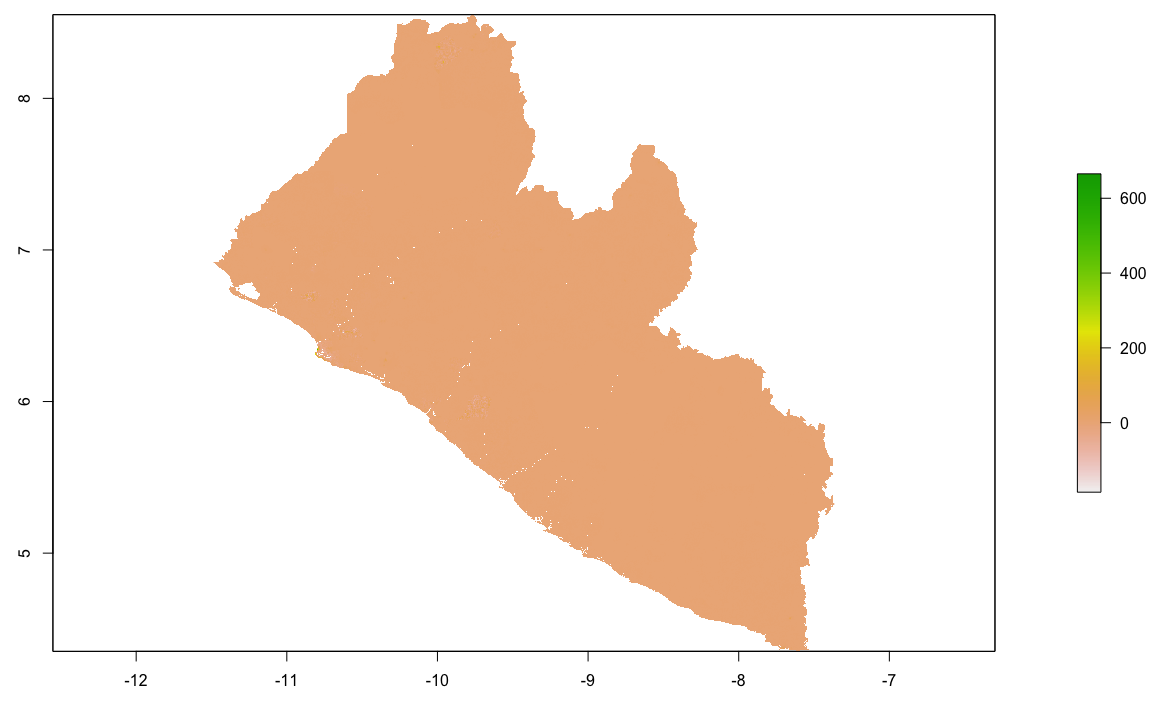

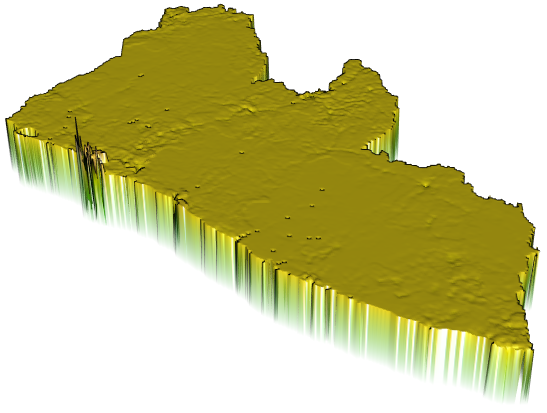

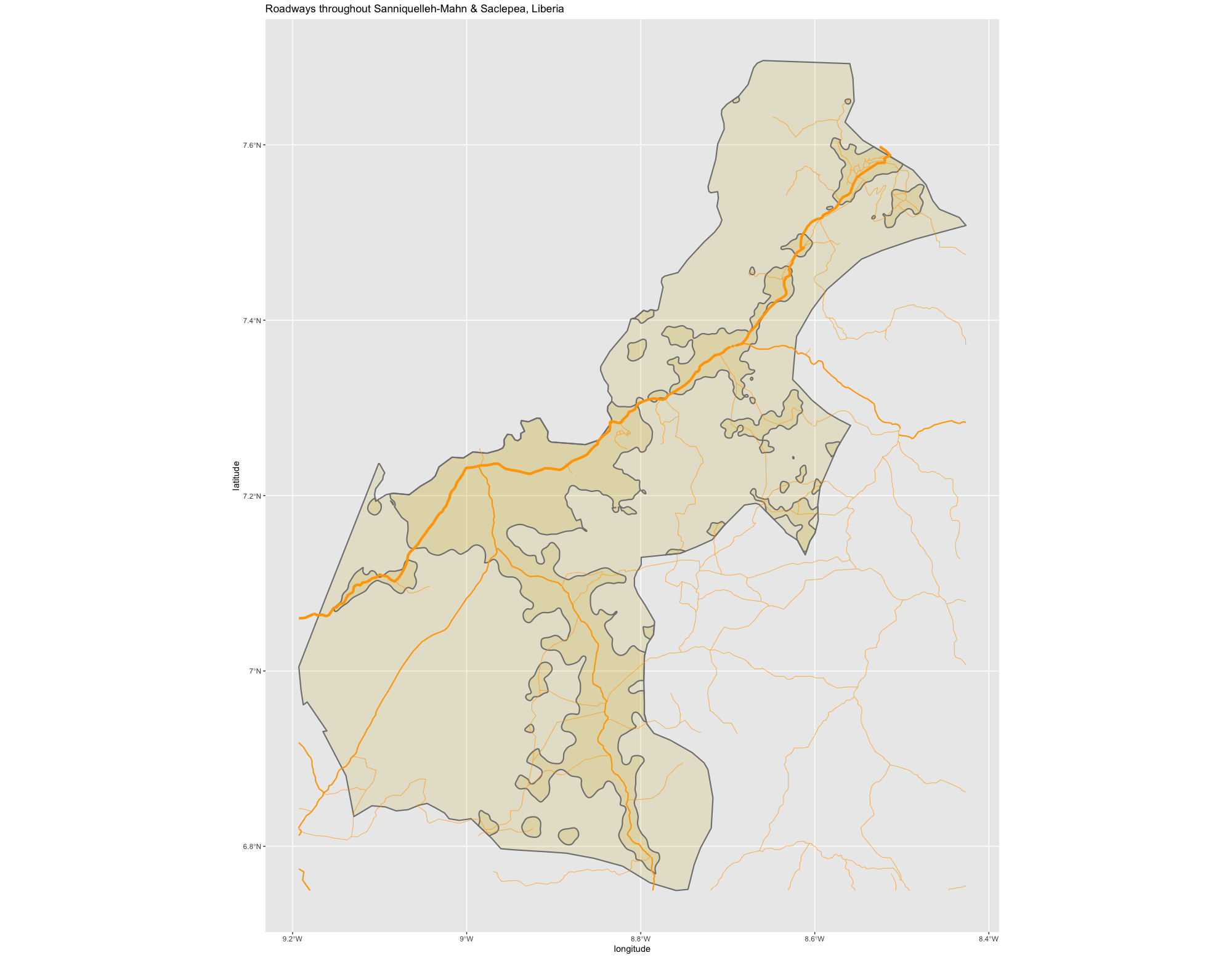

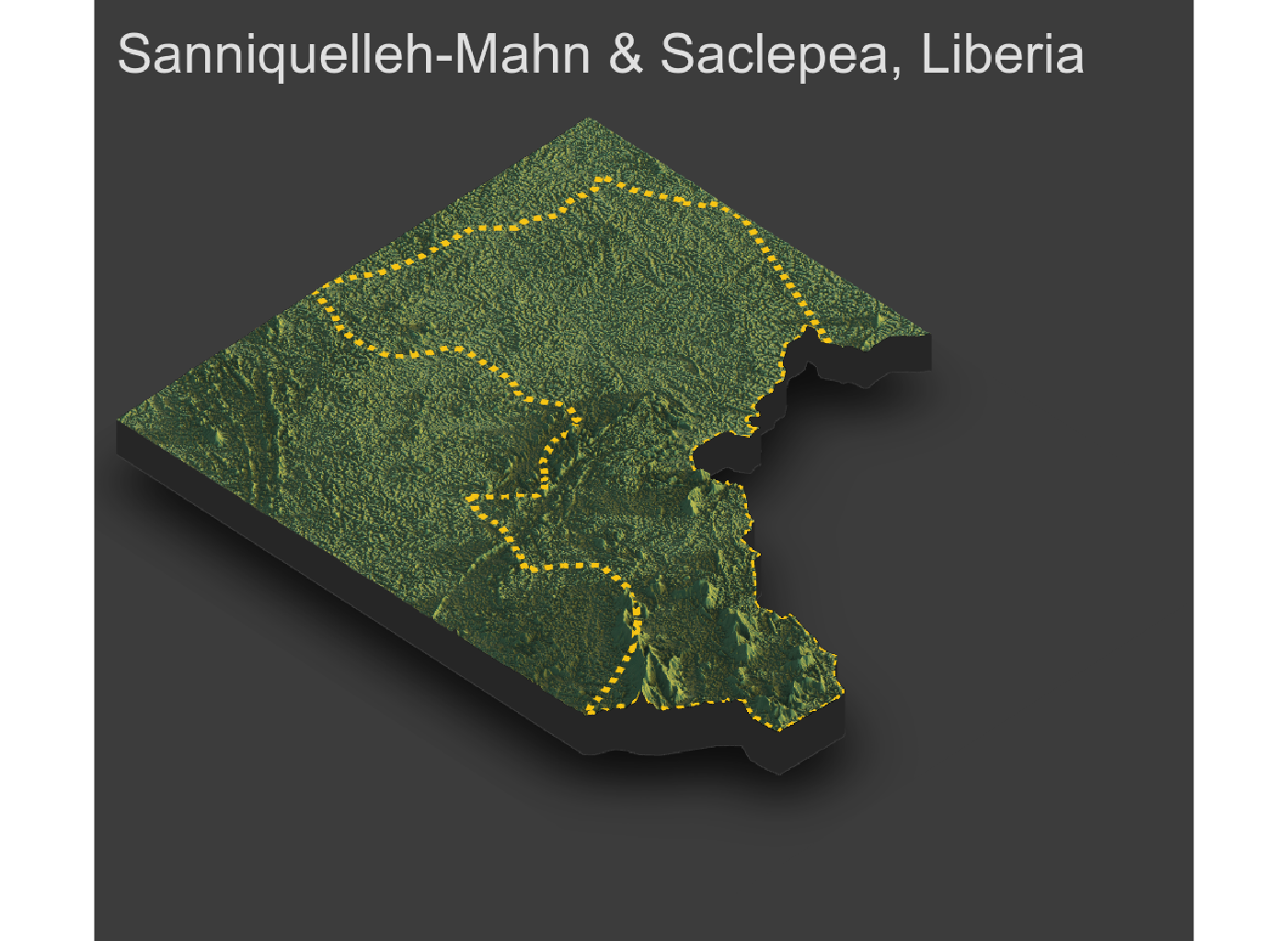

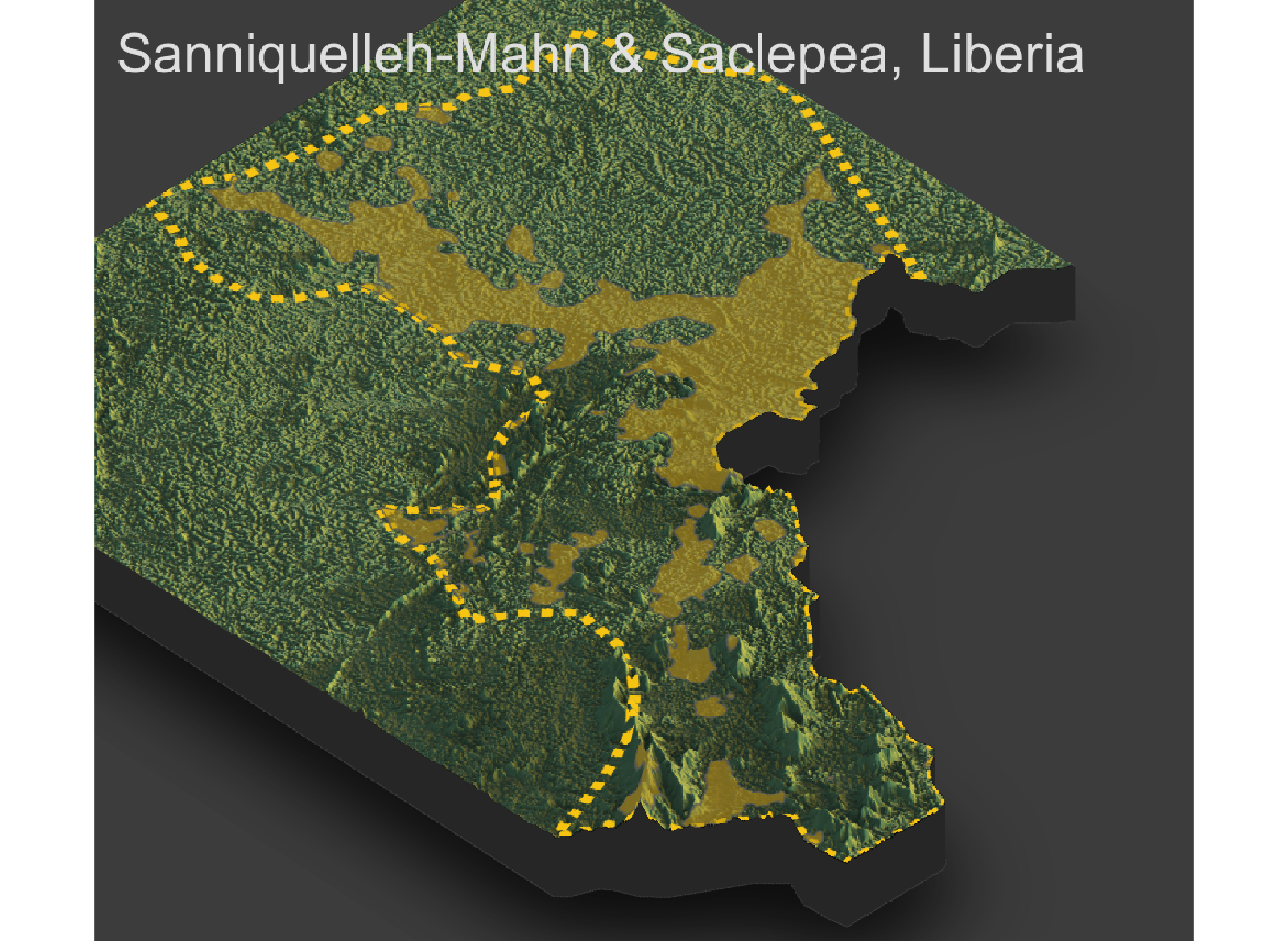

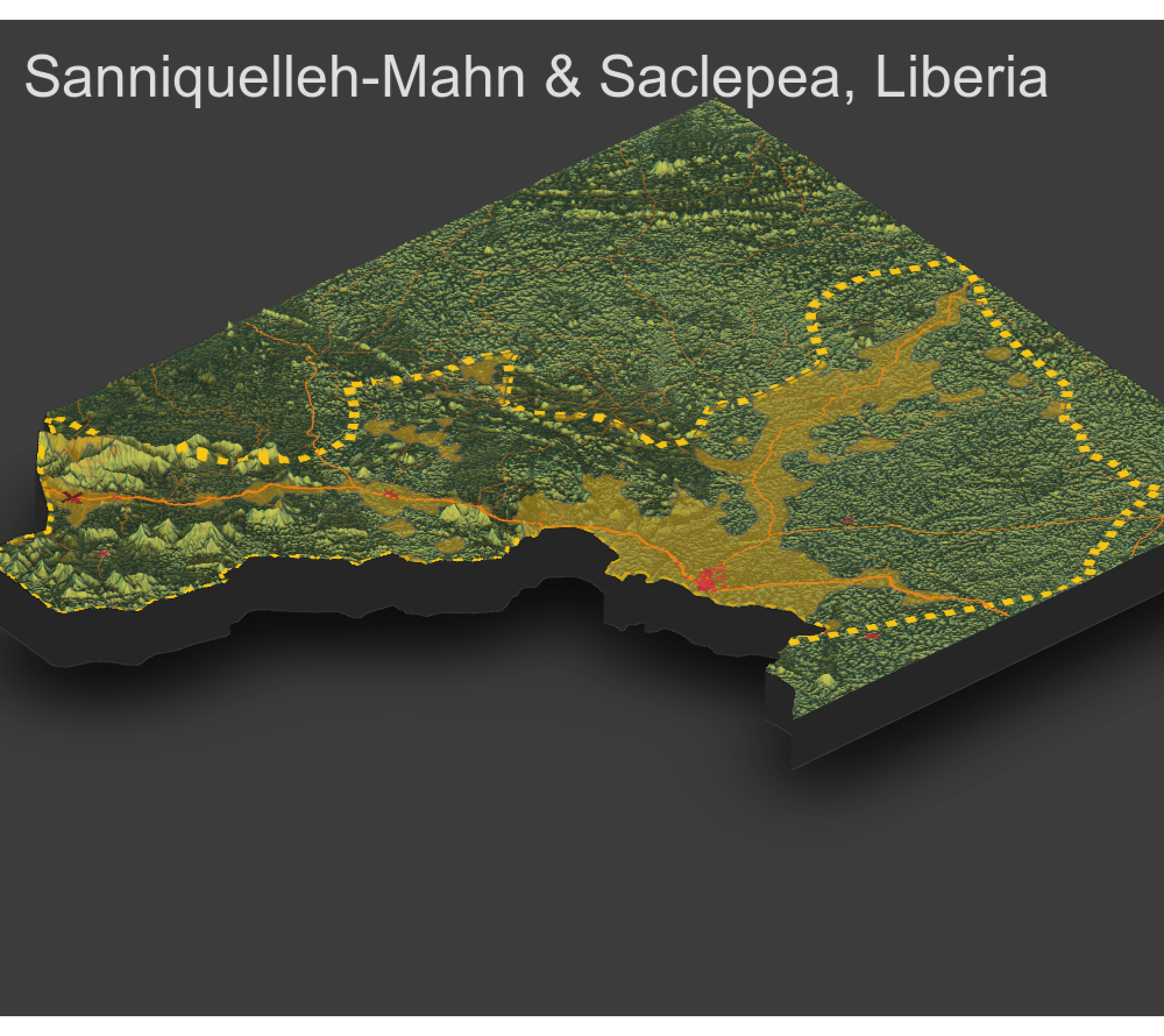

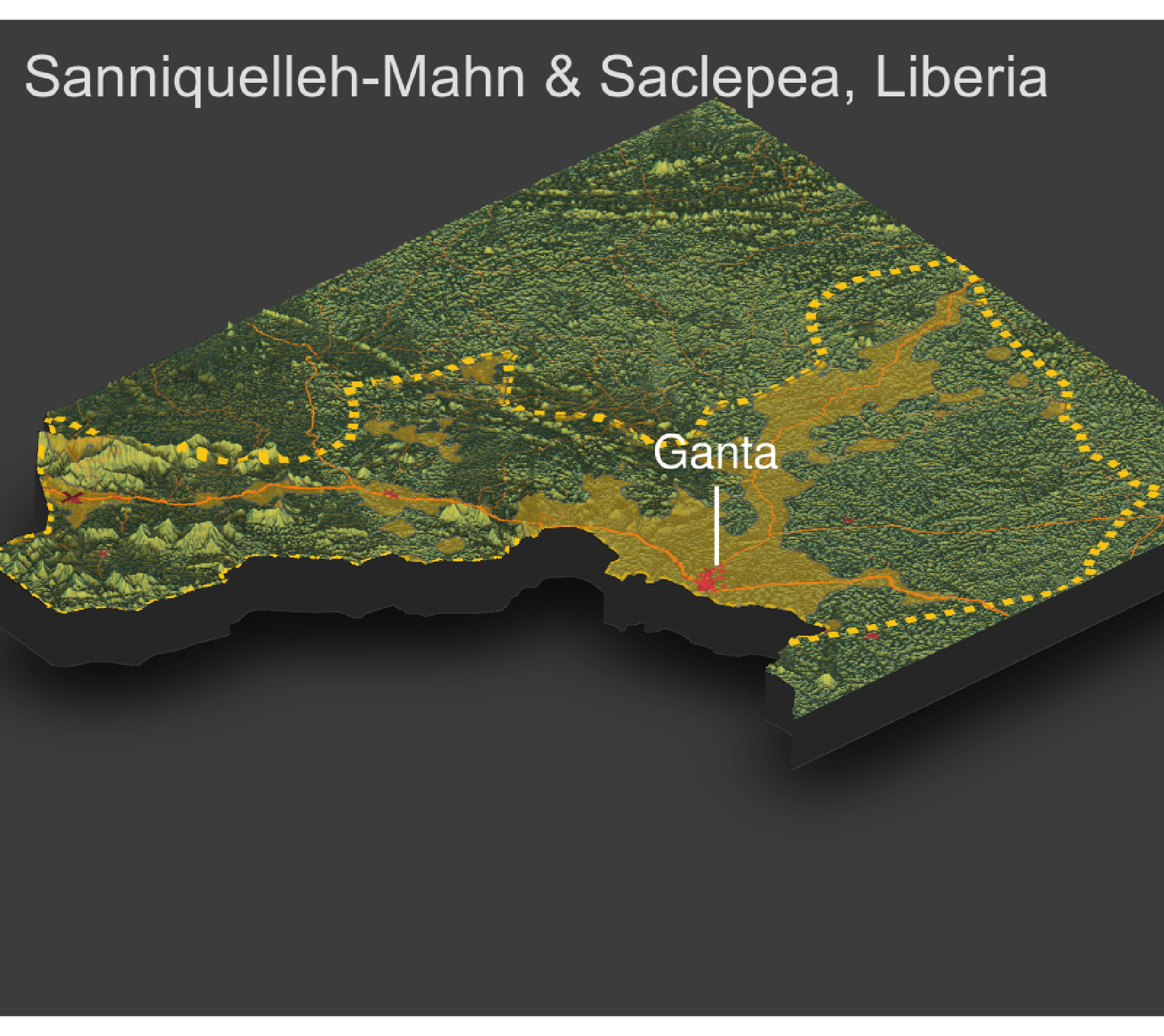

As an example, I will select the West African country of Liberia. The result should present a number of different options for obtaining a spatial data that describes of Liberia's administrative boundaries. Administrative boundaries refer to the national border as well as all of the regional, district and local government subdivisions of that country.

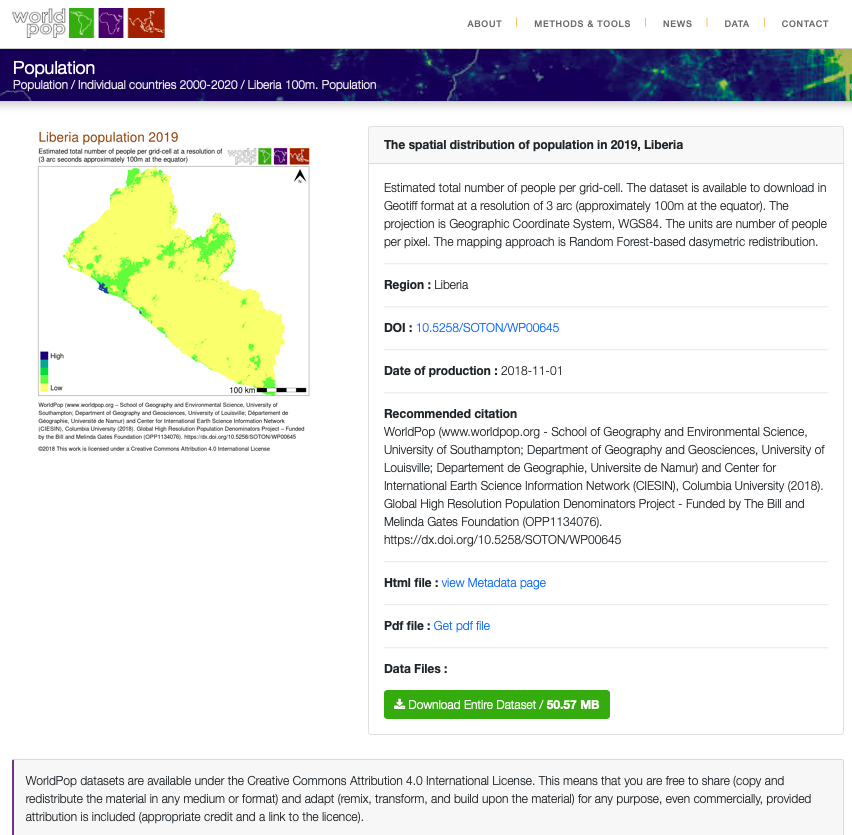

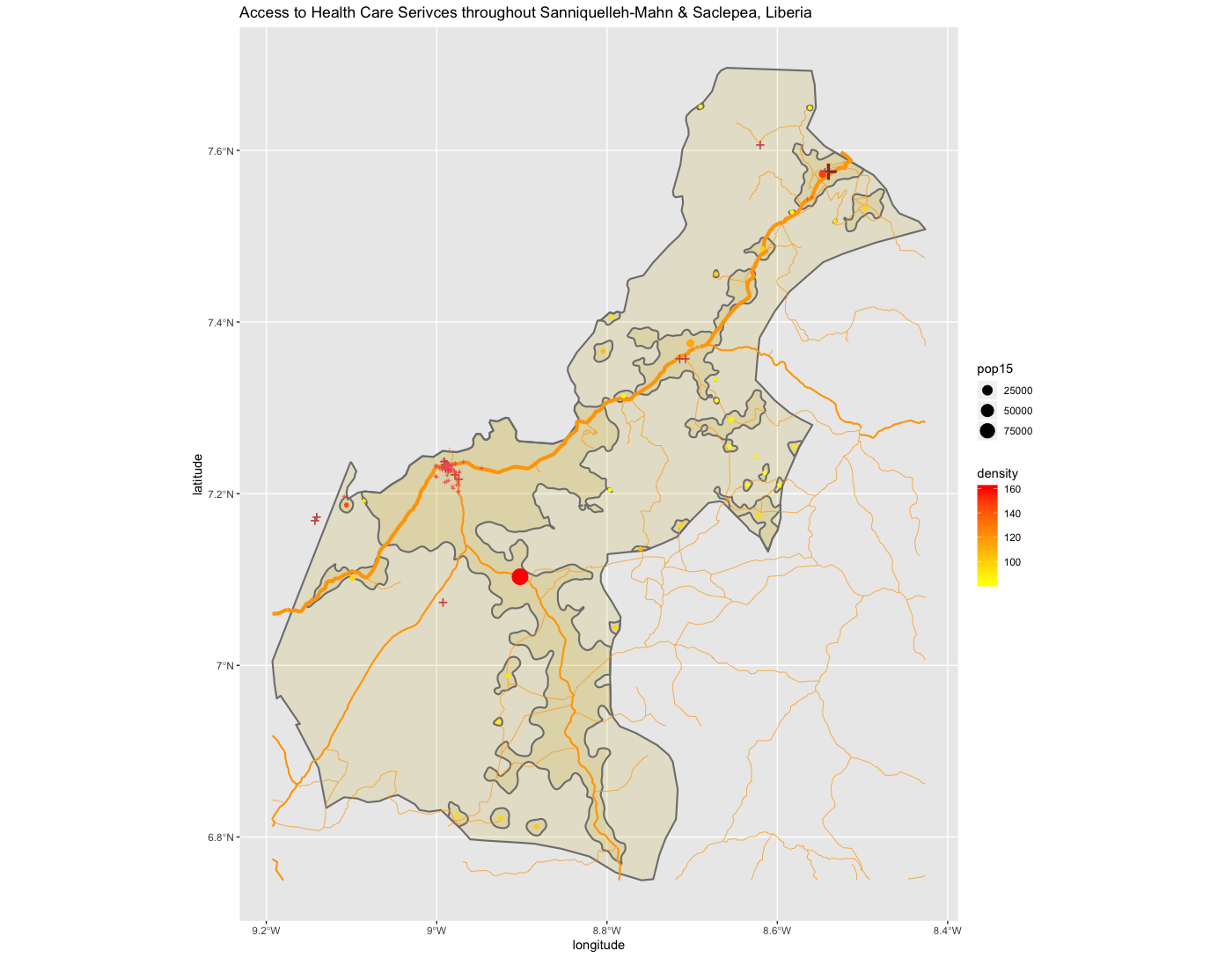

Throughout the course of the semester we will use a number of different data sets that describe healthsites, settlements, roads, population, pregnancies, births, and a number of other local dimensions of human development. Some of the data made available through WorldPop or the Humanitarian Data Exchange will have been remotely sensed, usually from a satellite orbitting the earth. This remotely sensed data is then classified according to different discrete types or perhaps by assigning values or intervals of possible values. Other times the data available will have been obtained from a source in the field, and most typically from some institution or group located or working within that particular country. Surveys and census data are examples of secondary sources that were most often obtained from local institutions. Like remotely sensed data, secondary sources also serve to provide a description of existing conditions, while serving as the basis for further analysis, modeling, inference and potential simulations.

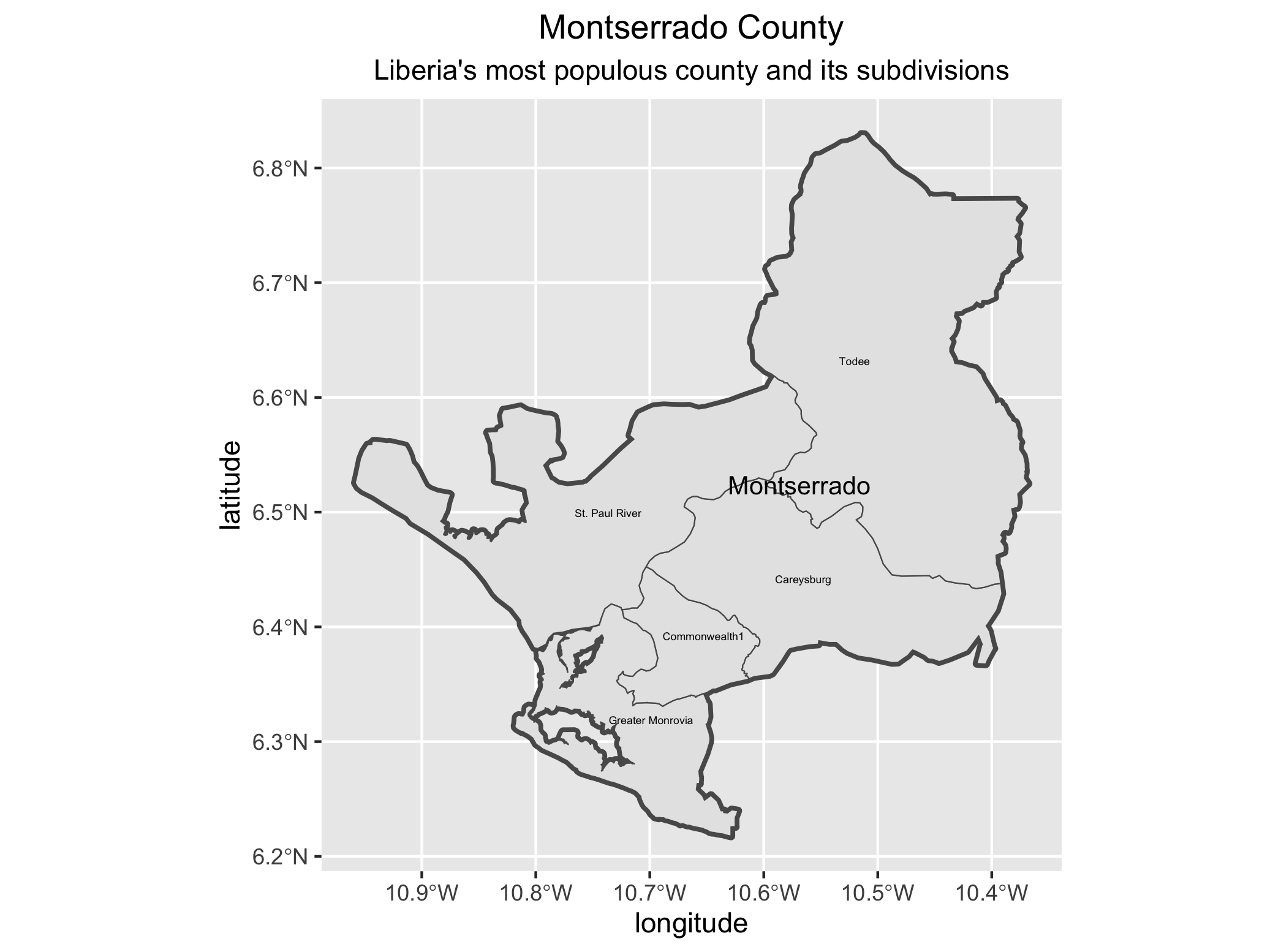

Typically, administrative boundaries and subdivisions have been obtained and provided by one of the regional offices within the United Nations Office for the Coordination of Humanitarian Affaris (OCHA). For example, the secondary sources of data that describe Liberia's political geography were likely provided by the Regional Office of West and Central Africa (ROWCA), presumably as they have obtained these sources from a ministry of government from within Liberia. Every country employs a unique nomenclature in order to describe its administrative subdivisions. Liberia is first subdivided into counties with each county further subdivided into districts. Each of Liberia's districts is then further subdivided into what are called clan areas.

Once you have found the the page for downloading Liberia's administrative subdivisions, note the different available spatial data types, as well as the different levels.

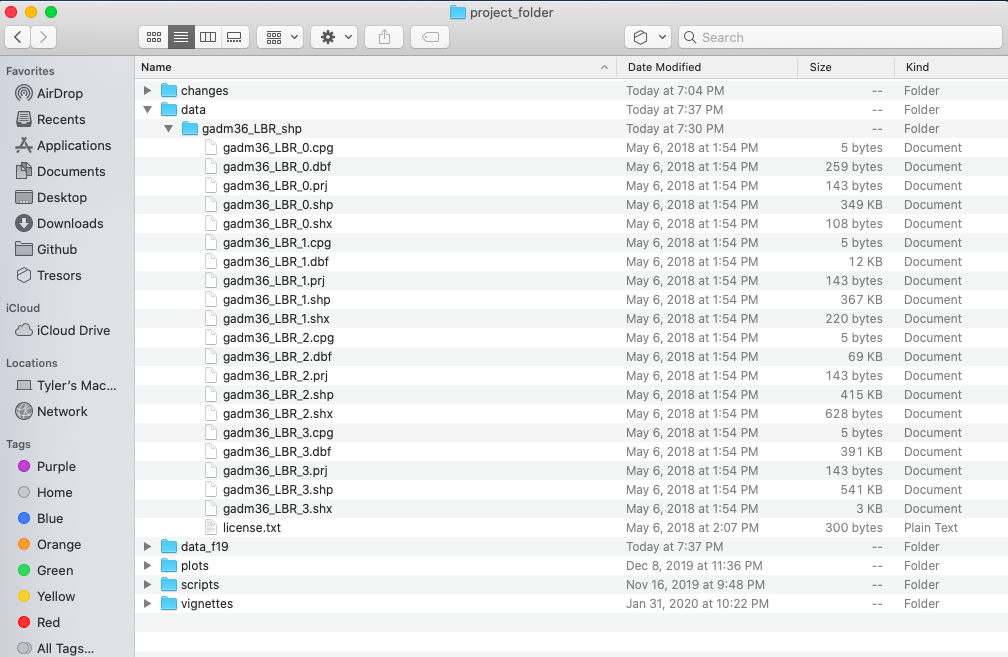

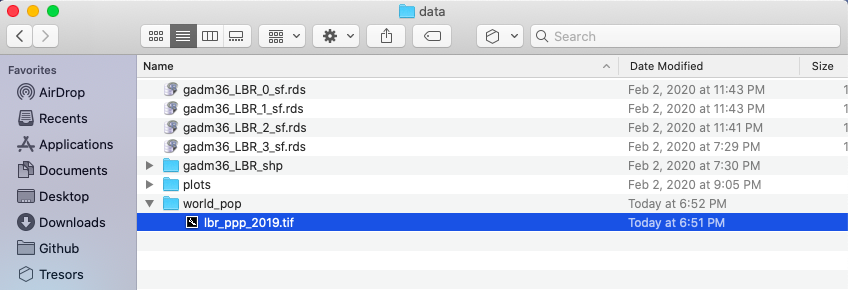

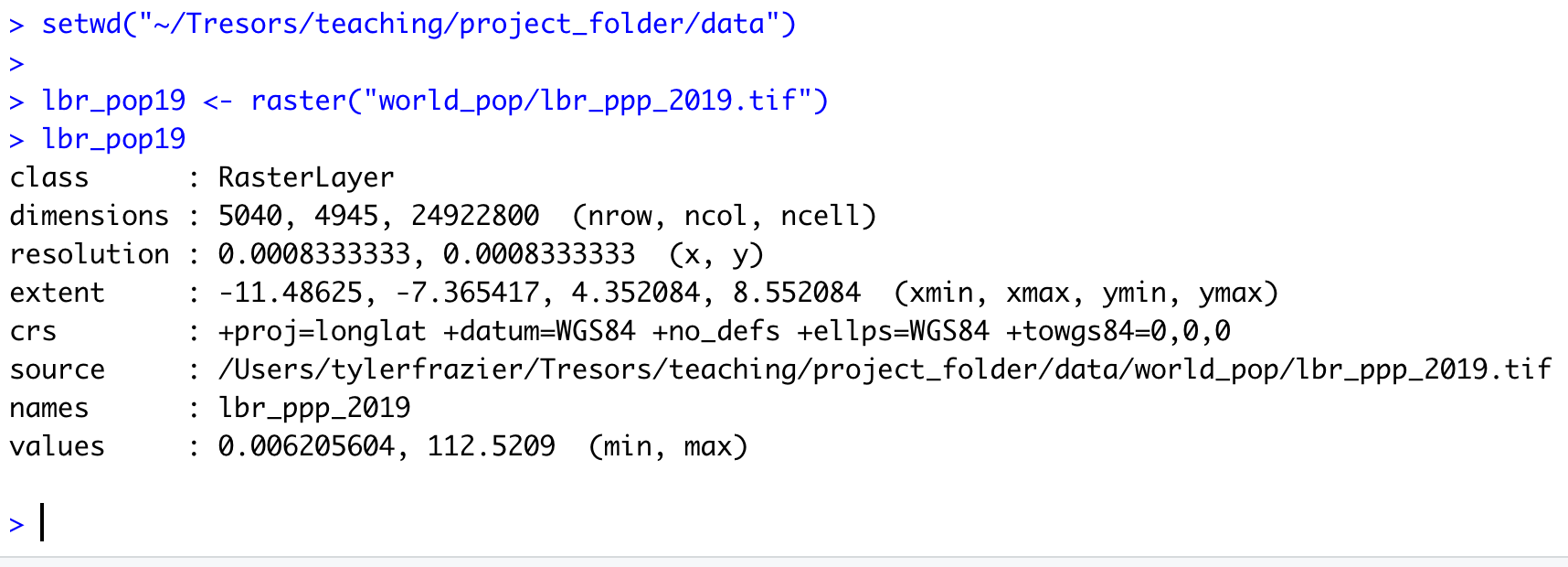

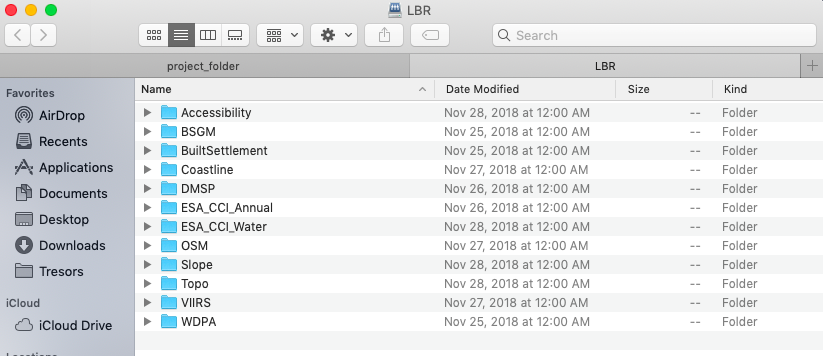

For our purposes, we want to obtain the national boundary (LBR_0), first level administrative subdivisions (LBR_1) and second level administrative subdivisions (LBR_2). Click on the Shapefile link in order to download a folder that contains a shapefile as well as a number of different corresponding files. After the folders have been downloaded, go to your working directory and create a new folder named data and then move the folders describing Liberia's administrative subdivisions to within that folder. The structure of your working directory should look something like the following (minus the additional folders).

You will also notice that there are a number of different files, each one with the same file name yet also having a unique file extension. The file extension is the three letter part of the file name that is to the right of the period, and acts somewhat as an acronym for the file type. For example, files that have the .shp file extension are called shapefiles. A shapefile contains the geometry of the points, lines and polygons used to spatially describe, in this example, the political geography of Liberia. A shapefile also requires most of the other files found in the folder in order for it to function properly. For example, the .prj file provides the projection that is used when plotting the geometry. The .dbf file provides the attributes associated with each spatial unit (for example the name associated with each county or district). Other files also provide information that enables RStudio to further interpret the spatial information in order to better serve our purposes.

In order to import a shapefile into RStudio we are going to use a command from the sf:: package (simple features). RStudio will need to find each of the .shp files in order to import the international border, the first level administrative subdivisions and the second level administrative subdivisions. If I have set my working directory to the data folder, then RStudio will need to traverse through the subfolder in order to locate the correct .shp files. You also will need to use the read_sf() command to import the .shp file into RStudio and create a simple feature class object.

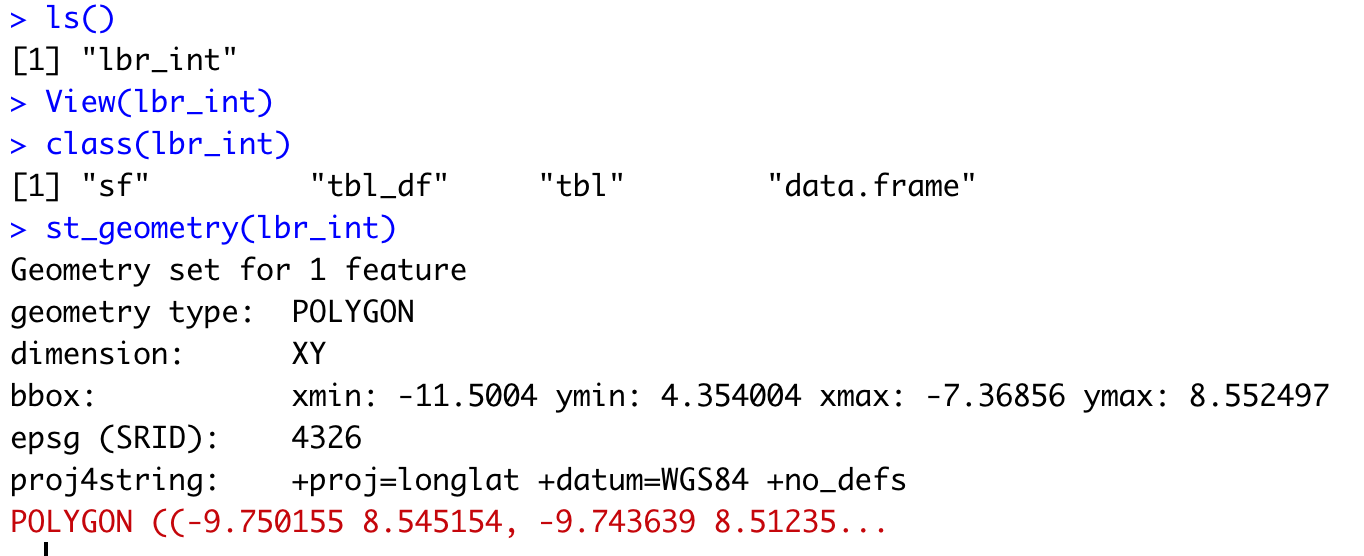

lbr_int <- add_command_here("add_folder_here/add_file_name_here.shp")

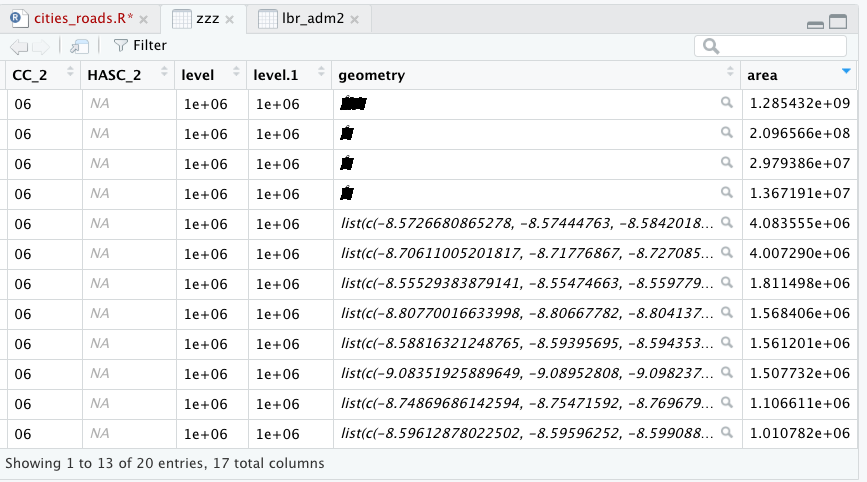

Once you have successfully executed the above function using the sf::read_sf() command, you should observe a new object named lbr_intappearing in the top right data pane within your RStudio environment. To the right of your newly created object there is a small gridded box that you will be able to click on in order to view individual attributes associated with this simple feature class spatial object. You will also notice that within the data pane, RStudio also provides you with some basic information about the object, in this case 1 observation that has 7 variables.

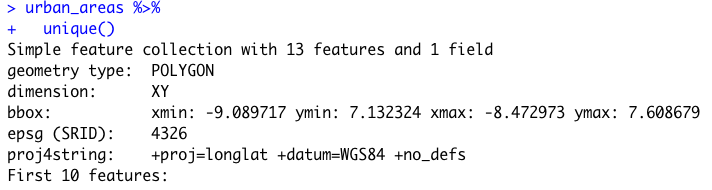

The sf:: package also includes a function called st_geometry() that will enable you to view some of the basic geometry associated with the object you have named lbr_int. Type the name of your object within the st_geometry() command so that RStudio will return some basic geometric information about our spatial object that describes Liberia's international border. You don't necessarily need to write this command in your script, you can just enter it directly into the console

After using the st_geometry() command with our lbr_int object, RStudio provides us with a basic description that includes the geometry type (polygons in this case, but it could also return points or lines), the x & y minimum and maximum values or also known as the bounding box (bbox), the epsg spatial reference identifier (a number used to identify the projection) and finally the projection string , which provides additional information about the projection used.

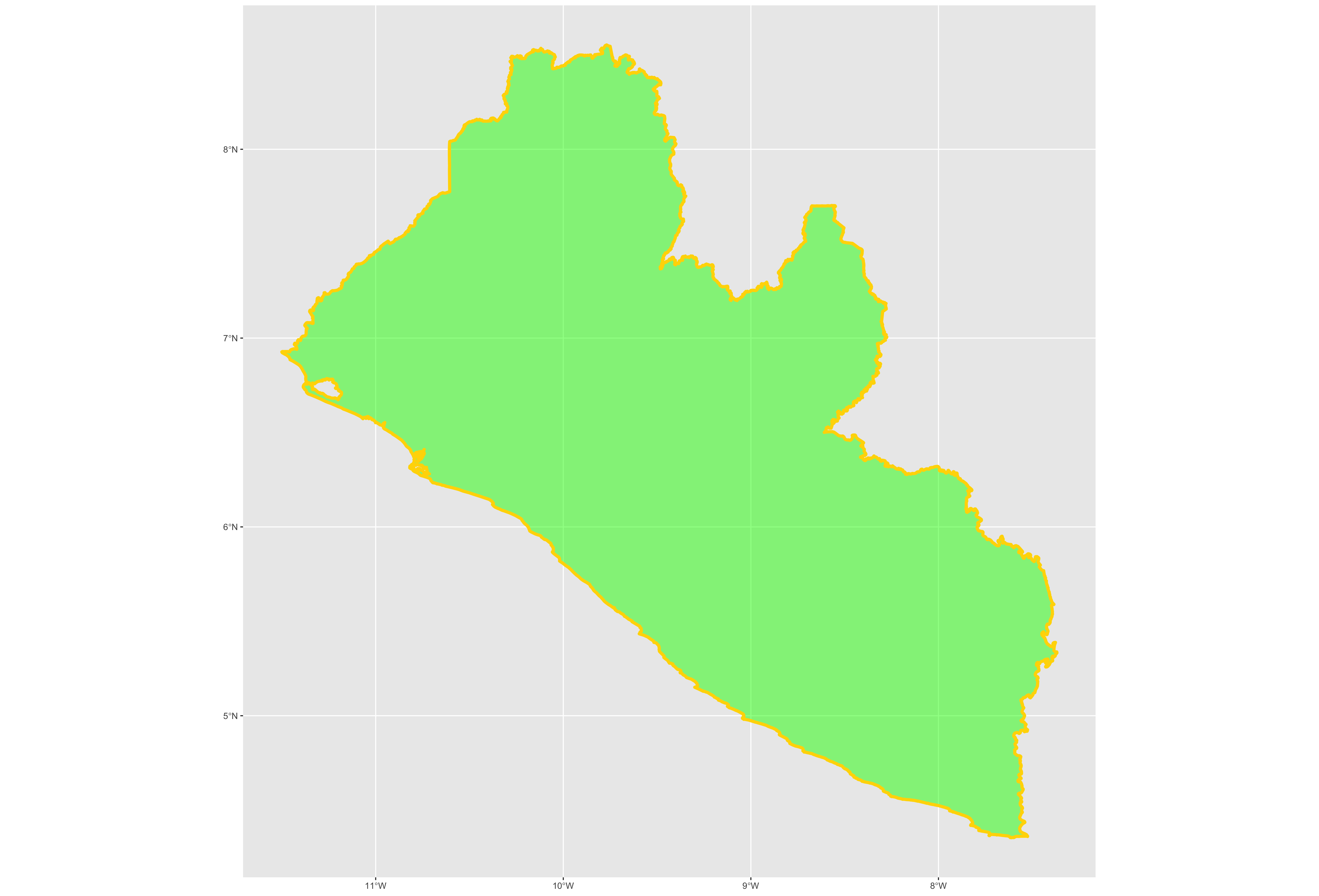

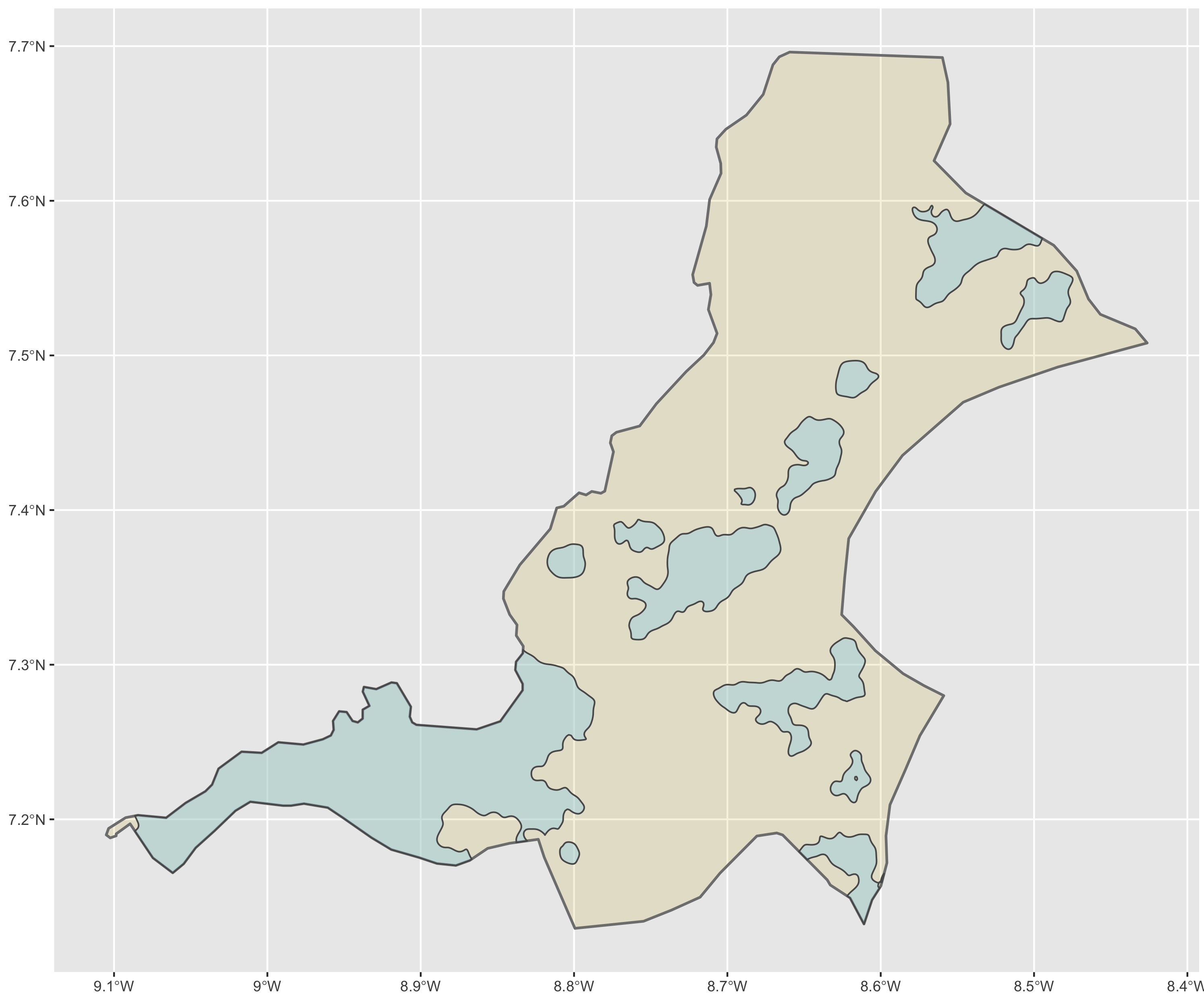

Now that we have conducted a cursory investigation of our simple feature object geometry, let's plot our simple features class object that describes the international border of Liberia. To plot, we will use a series of functions from a package called ggplot(). The gg in the package name ggplot:: stands for the grammar of graphics, and is a very useful package for plotting all sorts of data, including spatial data classes created with the sf:: package. To start add ggplot() + to your script and then on the following line add the geom_sf(data = your_sf_obj) in order to specify the data that ggplot() should use in producing its output.

ggplot() +

geom_sf(data = your_sf_obj)

Following the data = argument, you can also specify the line weight for the border using the size = argument. It is also possible to specify the color = as well as the opacity / transparency of your polygon using the alpha = argument. With the following script I have set the international border line weight width to 1.5 , the color of the border to "gold" , the fill color for the internal portion of the polygon to "green" and the alpha = value to .5 or 50% transparent.

It would also be helpful to have a label describing our plot. In order to do this we can use either the geom_sf_text() command or the geom_sf_label() command. In the following snippet of code you will notice that I have added the aesthetics argument within my geom_sf_text() command. The aes = argument enables us to specify which variable contains the label we will place on our object. If you click on the blue arrow to the left of the lbr_int object in the top right data pane, the object will expand below to reveal the names of all variables. The second variable is named NAME_0 and provides us with the name we will use as our label, Liberia. Following the aes() argument, you can also specify the size = of your label as well as its color =.

ggplot() +

geom_sf(data = your_sf_obj,

size = value,

color = "color",

fill = "color",

alpha = value_between_0_&_1) +

geom_sf_text(data = your_sf_obj,

aes(label = variable_name),

size = value,

color = "color")

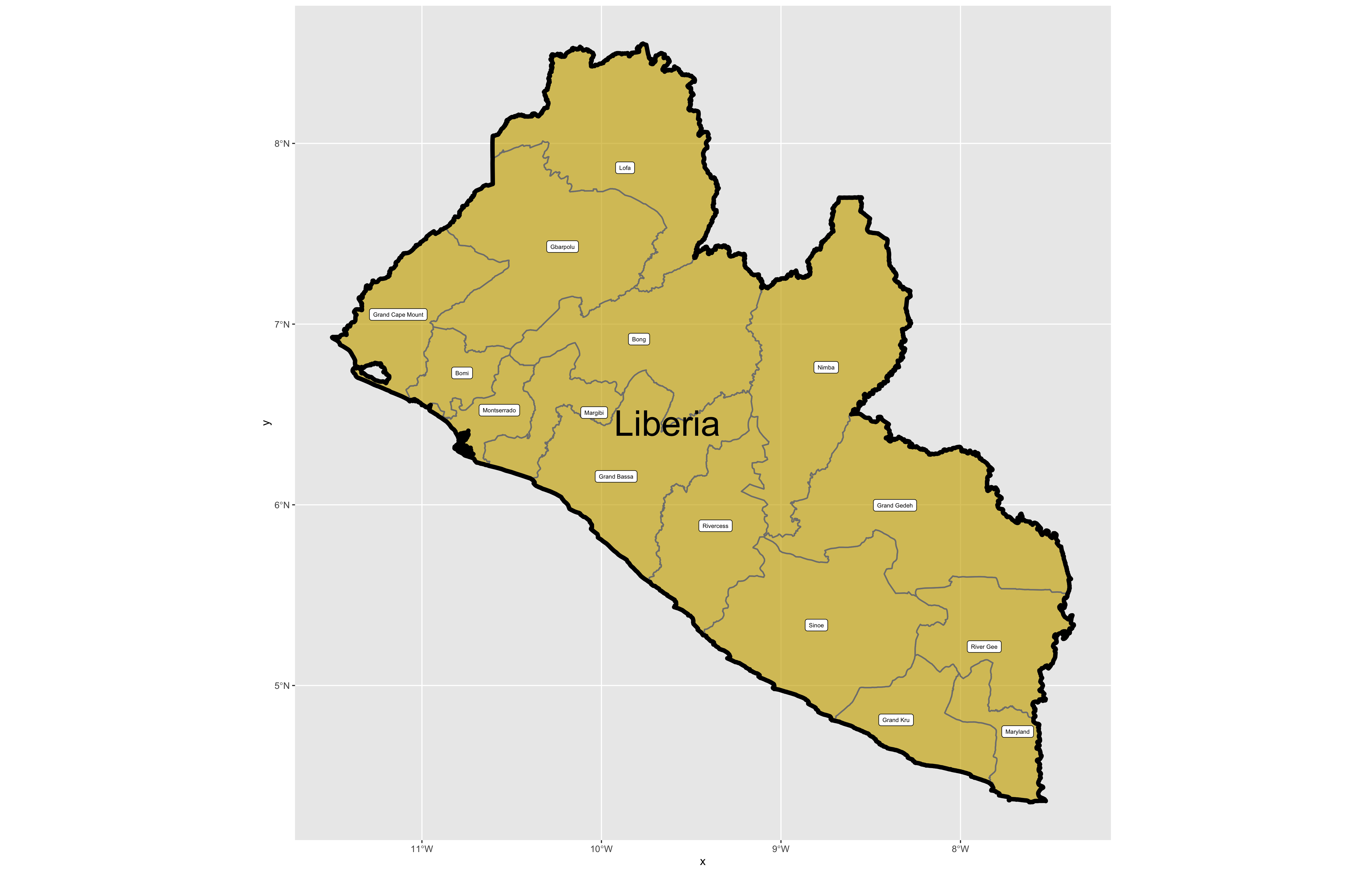

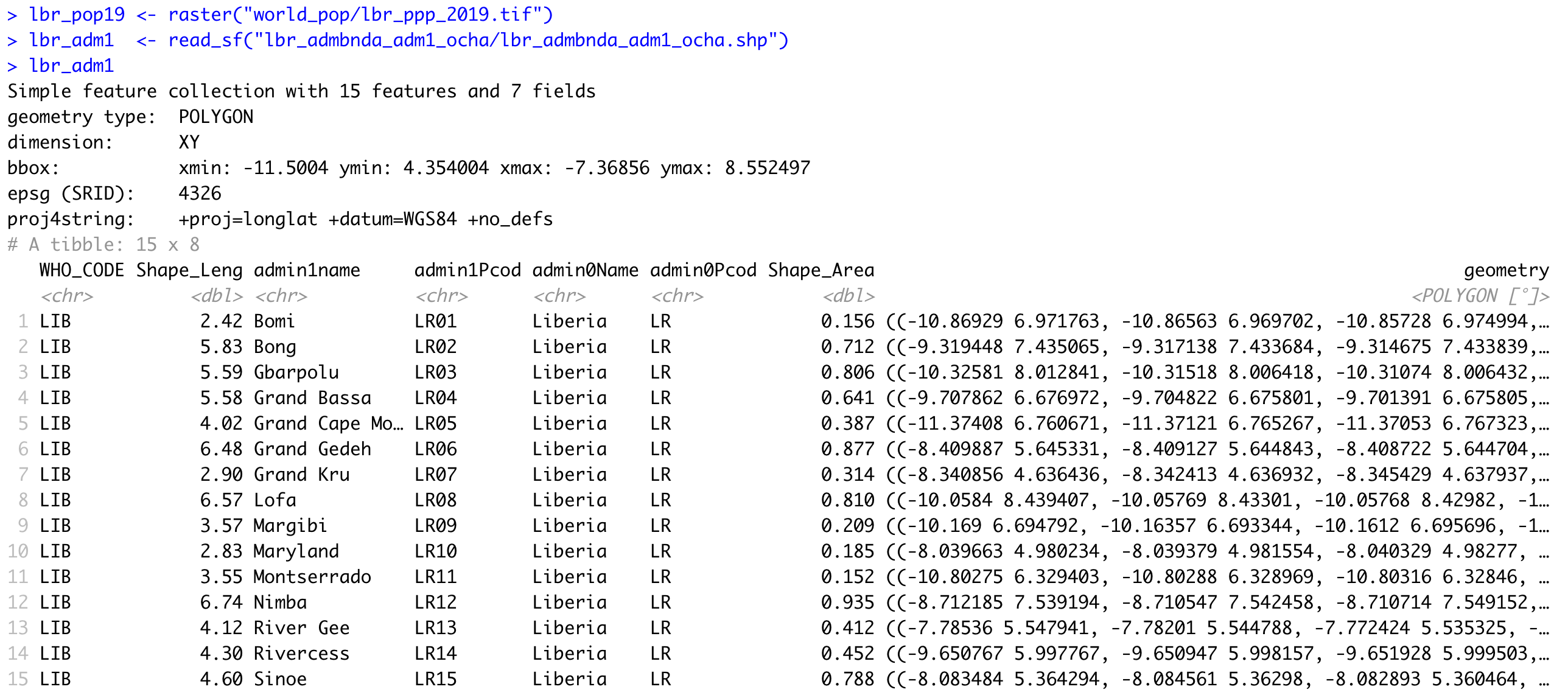

Good job! You have successfully used ggplot from the tidyverse with the simple features package in order to properly project and plot Liberia's international border, as well as to include a label. Now continue with the first level of administrative subdivisions, Liberia's fifteen counties. In order to do this, return to your use of the read_sf() command in order to import and create an object named lbr_adm1.

lbr_adm1 <- add_command_here("add_folder_here/add_file_name_here.shp")

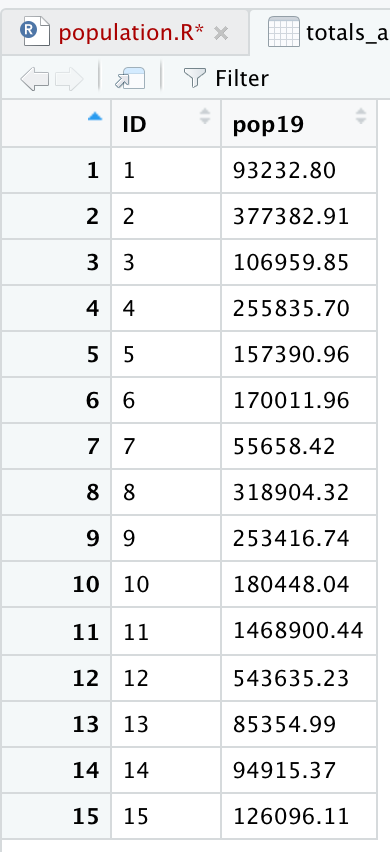

As before you could use the data pane in the top right corner to expand your view of the lbr_adm1 file you created. You can also click on the small grid symbol to the right of your data object (within the data pane) in order to view your data in a new tab in the same pane where your script is located. Whereas before we had a simple feature class object with 1 observation with 7 variables, your lbr_adm1 simple feature object has 15 observations, with each observation having 8 different variables describing some attribute associated with each individual polygon. Let's plot both Liberia's international border as well as its 15 counties.

To do this, follow the same approach you used with the lbr_int object but replace it with the name of your adm1 spatial object, lbr_adm1. Also follow the same approach you used for adding the labels in your previous snippet of code, but this time specify the variable with the county names from lbr_adm1.

ggplot() +

geom_sf(data = your_adm1_sf_obj,

size = value,

color = "color",

fill = "color",

alpha = value) +

geom_sf(data = your_int_sf_obj,

size = value,

color = "color",

fill = "color",

alpha = value) +

geom_sf_text(data = your_adm1_sf_obj,

aes(label = variable_name),

size = value,

color = "color") +

geom_sf_label(data = your_int_sf_obj,

aes(label = variable_name),

size = value,

color = "color")

The code above will produce the plot below when thegeom_sf() function using the lbr_adm1 arguments is specified with a line weight size = 0.65, line weight color = "gray50", a polygon fill = "gold3" and a 65% opacity value of alpha = 0.65. Additionally, I have set the size = 2.0 , and the alpha = 0 (100% transparent) for the data = lbr_int object. The geom_sf() command will default to a color = "black" if the line color is not specified. Additionally, since the alpha = 0 no fill = "color" is needed (since it will not appear). The county labels have a size = 2 (and also defaults to a color = "black", while the geom_sf_text() command to label Liberia has a size = 12 argument. In order to nudge the label to the east and south I have also added the nudge_x = 0.3 and nudge_y = -.1 arguments to the geom_sf_label() command.

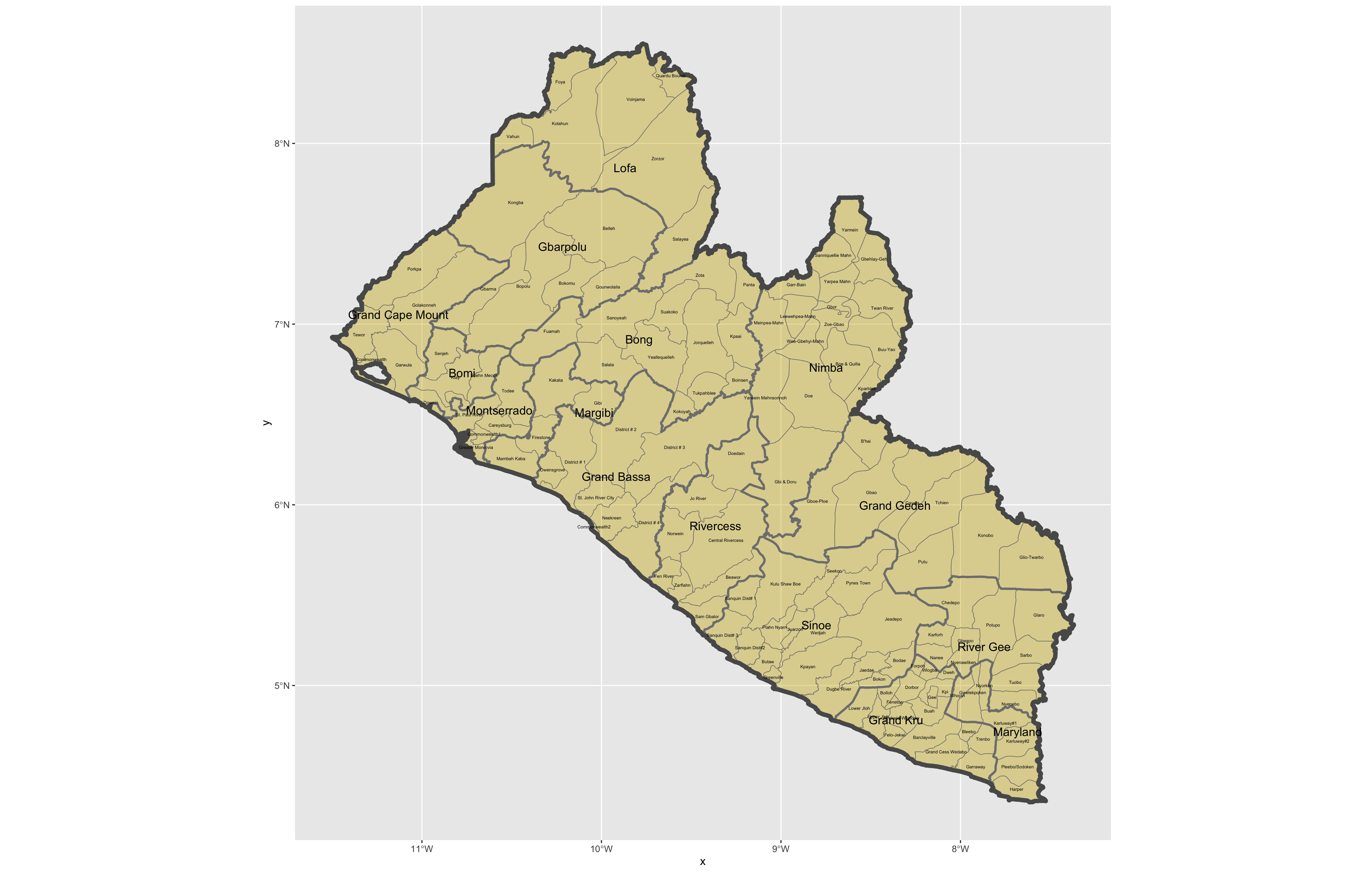

After adding the counties go back and add the second level of administrative subdivisions, or districts. Again use read_sf() to import that shapefile as a simple feature object into your RStudio workspace. Use the geom_sf_text() command to add the labels, while also making sure to specify the correct variable name in the aes(label = variable_name) argument. Size the district borders and labels so they are smaller than the internation border as well as the county delineations.

rm(list=ls(all=TRUE))

# install.packages("tidyverse", dependencies = TRUE)

# install.packages("sf", dependencies = TRUE)

library(tidyverse)

library(sf)

setwd("~/Tresors/teaching/project_folder/data")

lbr_int <- add_command_here("add_folder_here/add_file_name_here.shp")

lbr_adm1 <- add_command_here("add_folder_here/add_file_name_here.shp")

lbr_adm2 <- add_command_here("add_folder_here/add_file_name_here.shp")

ggplot() +

geom_sf(data = adm2_object,

size = value,

color = "color",

fill = "color",

alpha = value) +

geom_sf(data = adm1_object,

size = value,

color = "gray50",

alpha = value) +

geom_sf(data = int_object,

size = value,

alpha = value) +

geom_sf_text(data = adm2_object,

aes(label = variable_name),

size = value) +

geom_sf_text(data = adm1_object,

aes(label = variable_name),

size = value)

ggsave("liberia.png")

Use ggsave(file_name.png) to save your plot as a .png file, to your working directory.

Challenge Question

Follow the steps from above that you used to produce your plot of Liberia, but instead each team member should select their own LMIC country and produce the output for it. Refer this World Bank guide for a list of low, middle and high income economies. Go back to the GADM website and find the administrative boundaries for the LMIC country you have selected. Plot and label the international border, the first level of administrative subdivisions and the second level of administrative subdivisions. Make sure you designate heavier line widths for the higher level administrative subdivisions and thinner line widths for the more local governments. You may also use darker and lighter colors to discern hierarchy. Please be sure to use different label sizes and/or colors to further differentiate administrative hierarchies. Modifying annotation transparency also as needed.

Meet with your group and prepare to present the best two plots for the Friday informal group presentation. Then as a group, upload all 5 team members plots to #data100_project1 (informal group presentations) by Sunday night.

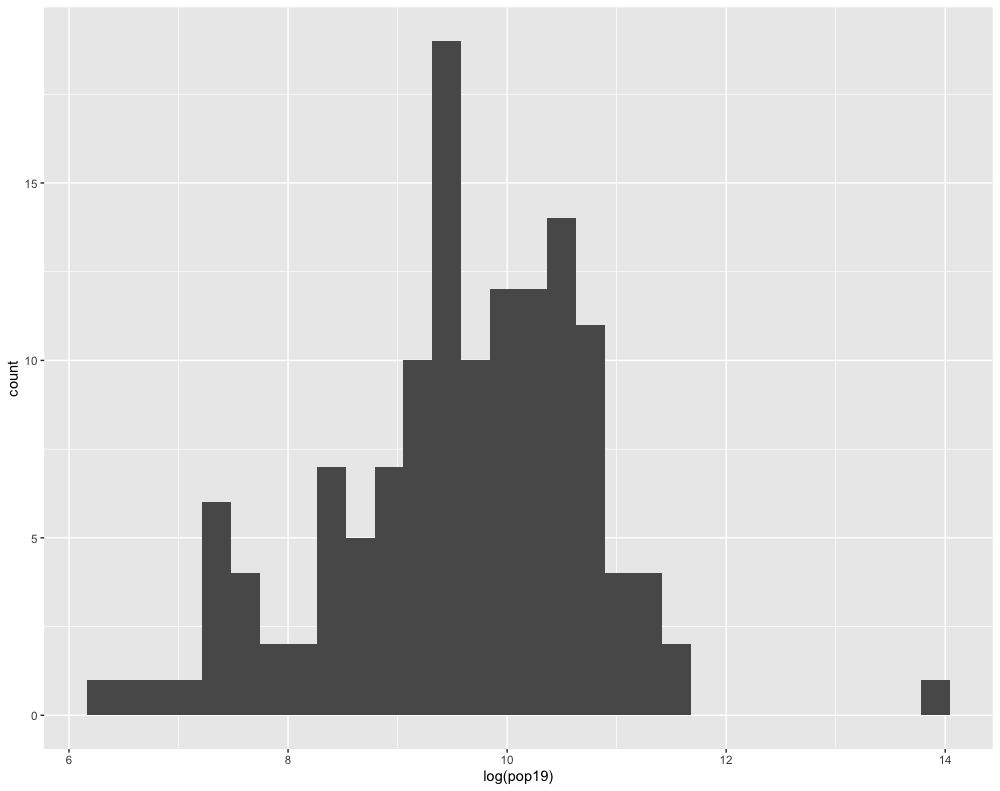

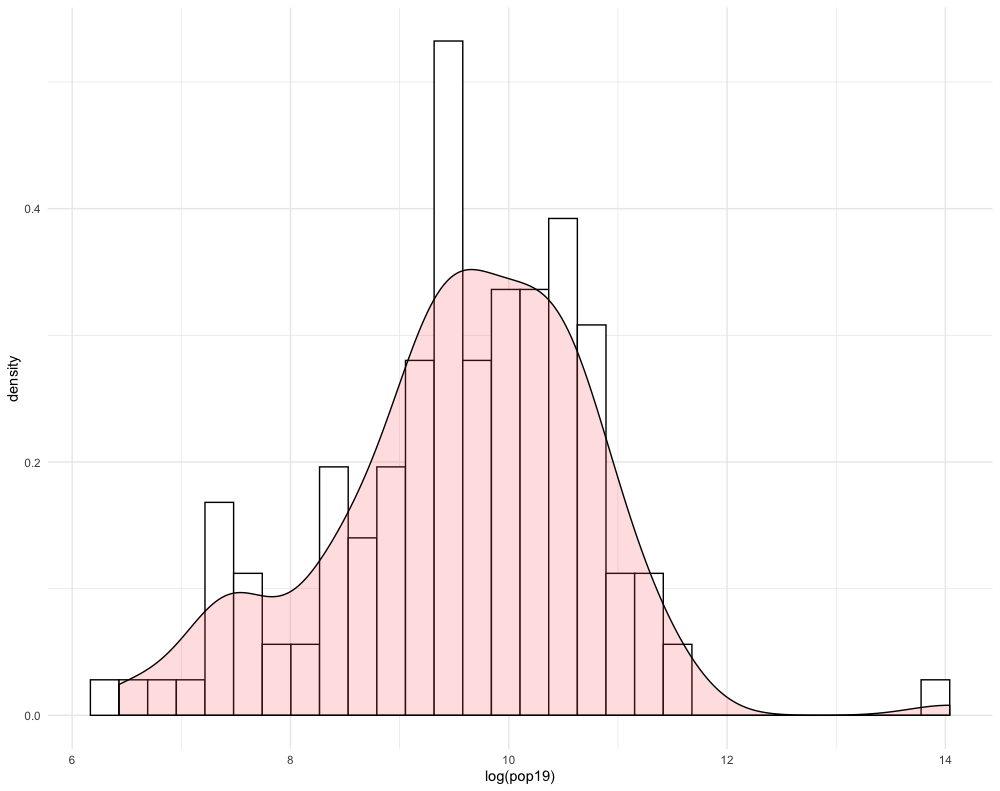

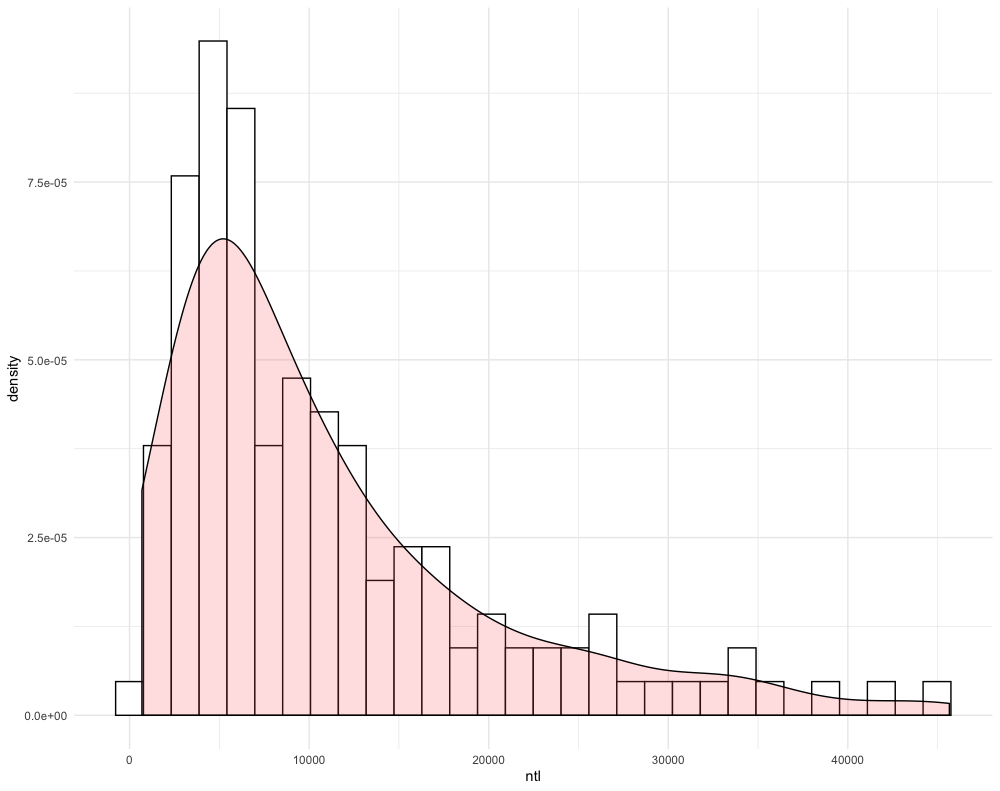

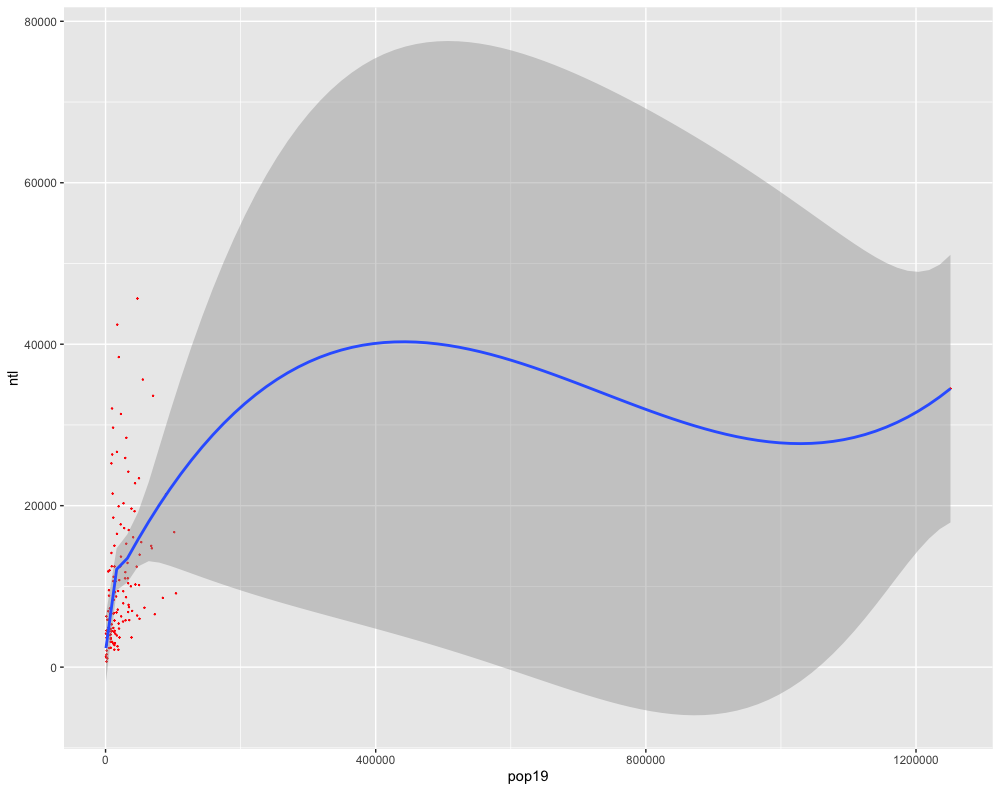

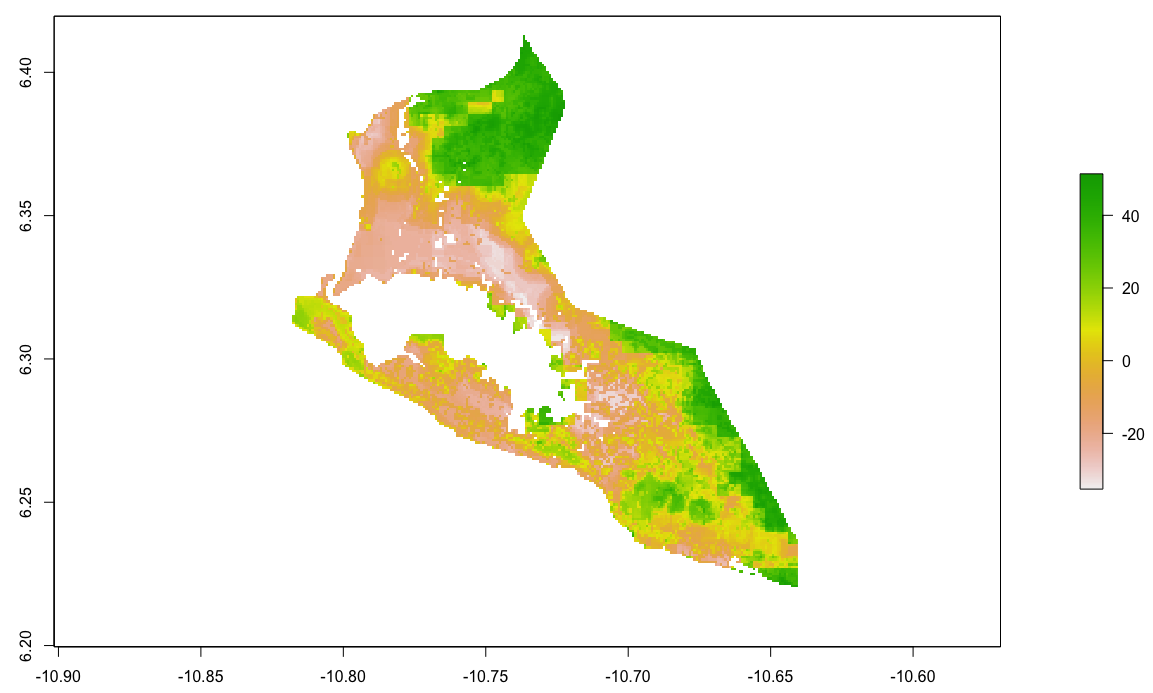

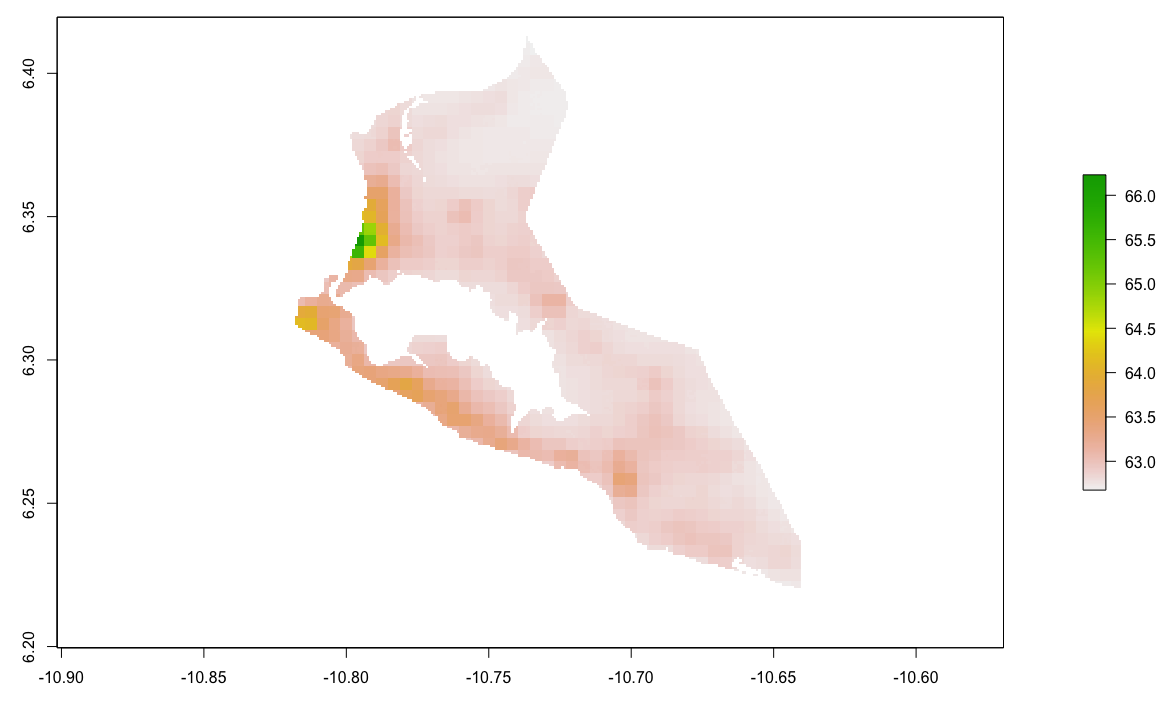

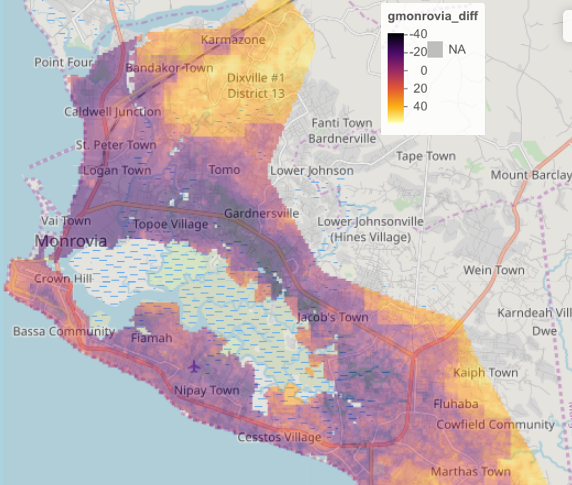

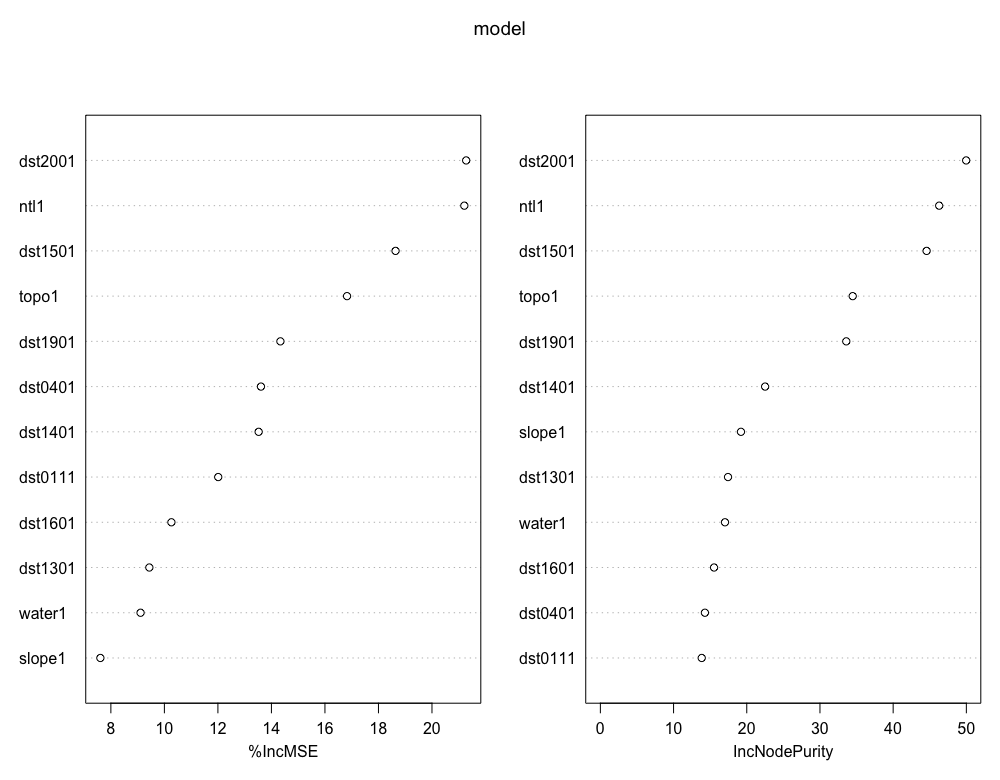

Stretch Goal 1